This is a full list of statistics on the number of parameters in Chatgpt-4 and GPT-4O.

Although Openai has not publicly published the architecture of their recent models, including GPT-4 and GPT-4O, various experts have estimated.

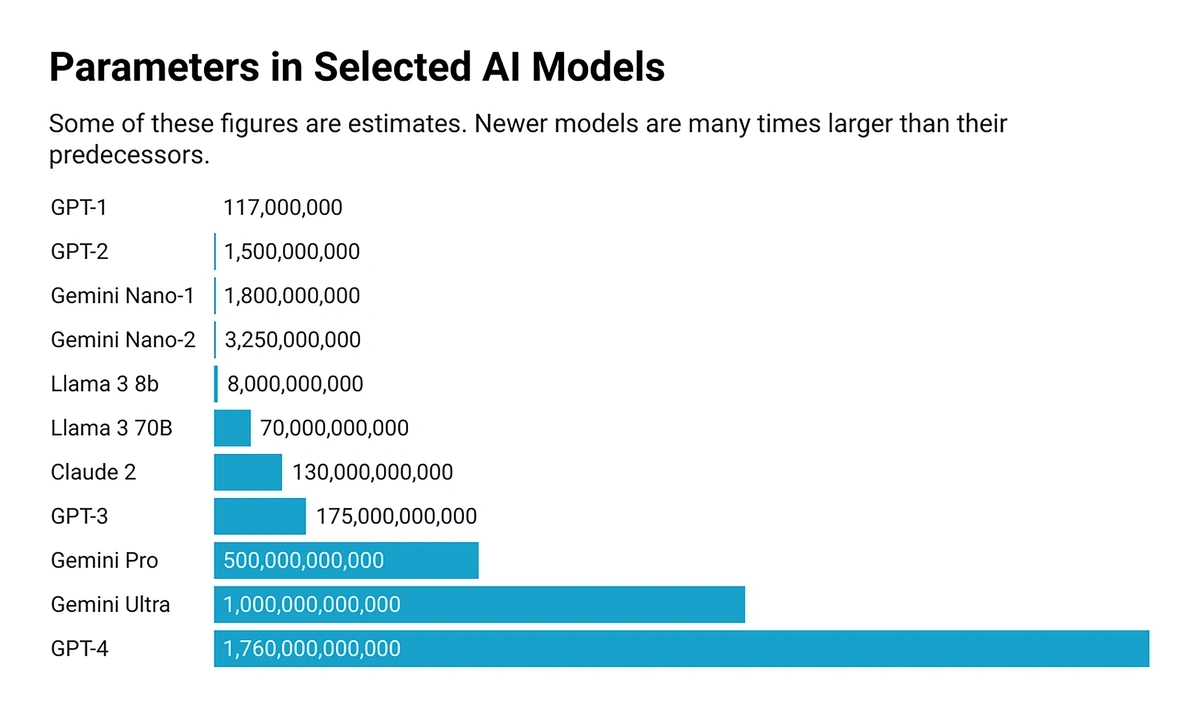

Although these estimates vary somewhat, they all agree on one thing: the GPT-4 is massive. It is much larger than the previous models and many competitors.

However, more parameters do not necessarily mean better.

In this article, we will explore the details of the parameters within GPT-4 and GPT-4O.

Statistical key parameters (upper choice)

- Chatgpt-4 is roughly estimated 1.8 Billion parameters

- GPT-4 is therefore More than ten times larger that his predecessor, GPT-3

- Chatgpt-4o mini could be as small as 8 billion parameters

Number of parameters in the chatppt 4

According to several sources, Chatgpt-4 has approximately 1.8 billion of parameters.

This estimate first came from AI experts like George Hotz, who is also known to be the first person to break the iPhone.

In June 2023, just a few months after the release of GPT-4, Hotz explained publicly This GPT-4 was made up of approximately 1.8 billion of parameters. More specifically, the architecture consisted of eight models, each internal model made up of 220 billion parameters.

GPT4 is 8 x 220b params = 1.7 Billion of parameters https://t.co/dw4jrzfen2

Ok, I did not know how much the rumors on GPT-4, but it seems that Soumith also confirms the same thing, so here is the fast clip!

So yes, GPT4 is technically 10x the size of GPT3, and all the little ones … pic.twitter.com/m2yiahgvs4

– Swyx 🌁 (@Swyx) June 20, 2023

Although this was not confirmed by OpenAi, the claim of 1.8 Billion of parameters was supported by several sources.

Shortly after Hotz estimated, a Semianalysis report reaches the same conclusion. More recently, a graphic Displayed in NVIDIA GTC24 seemed to support the figure of 1.8 Billion.

And back in June 2023, Meta Engineer Soumith Chiltala confirmed the description of HotzTweet:

“I might have heard the same thing … information like this is transmitted but no one means it out loud. GPT-4: 8 x 220B Experts trained with different distributions of data / tasks and an inference of 16 burials. »»

Thus, we can assert with reasonable confidence that GPT-4 has 1.76 Billion of parameters. But what exactly means the break – eight experts of 220 billion parameters, or 16 of the 110 billion -?

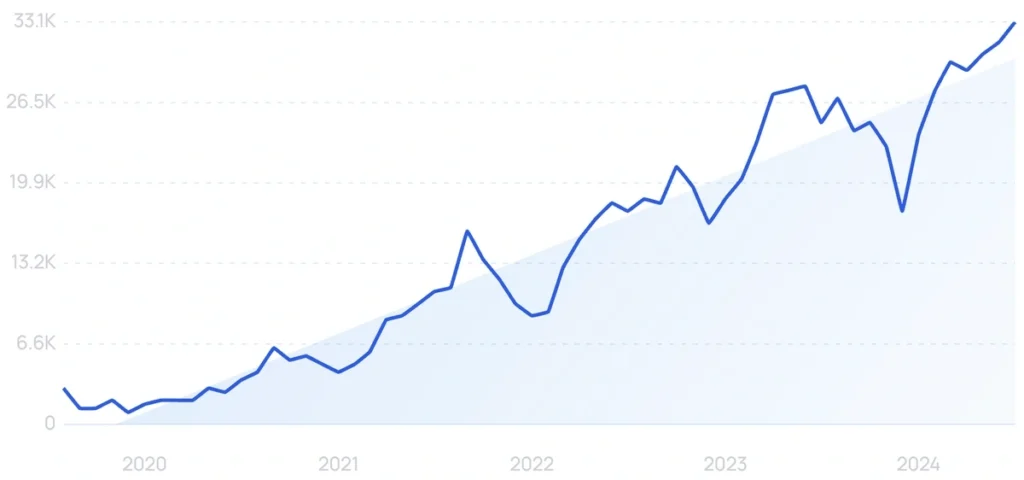

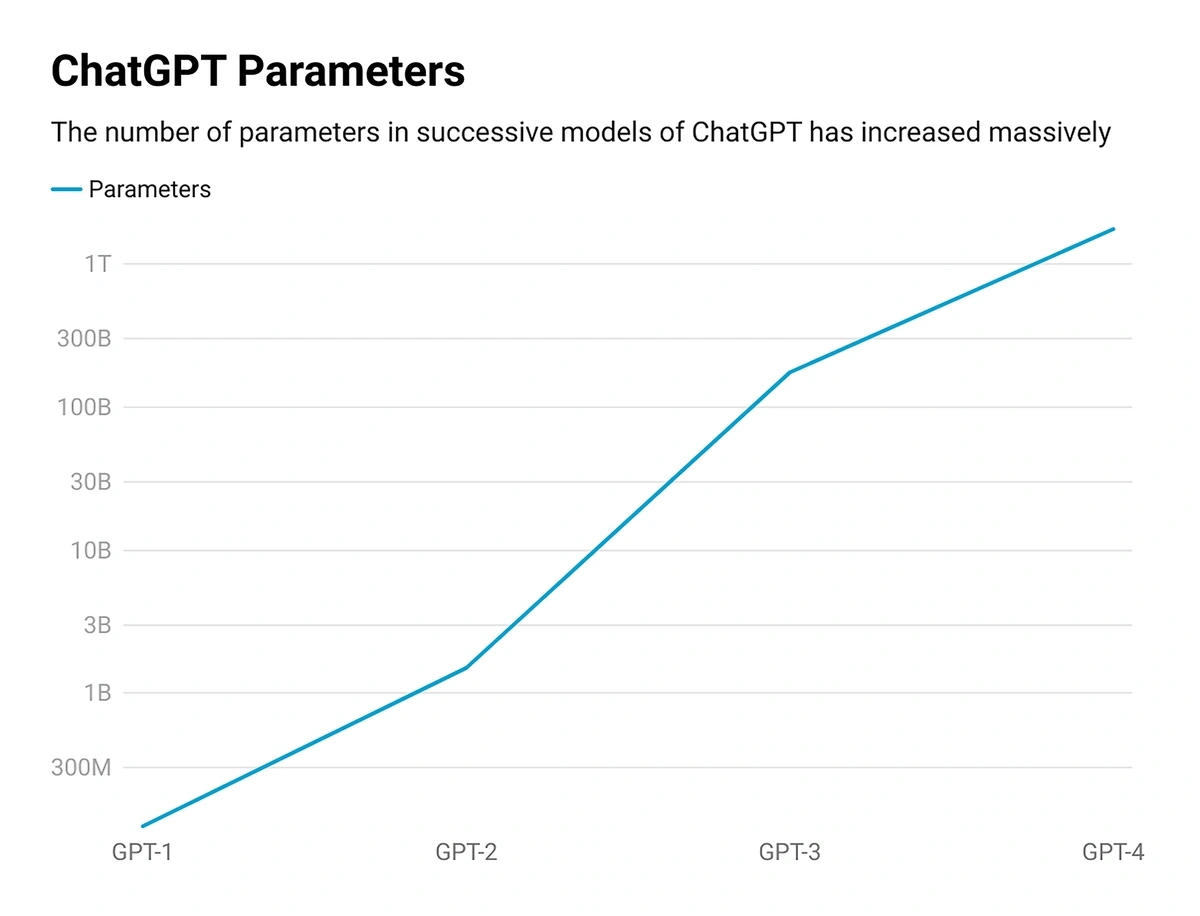

Evolution of chatgpt parameters

Chatgpt-4 A 10x the GPT-3 parameters (OPENAI))

At the time when Openai was in fact open source, he confirmed the number of parameters in GPT-3: 175 billion.

Chatgpt-4 has more than 15,000 times GPT-1 parameters (Makeuseof))

Chatgpt-1 consisted of 117 million parameters.

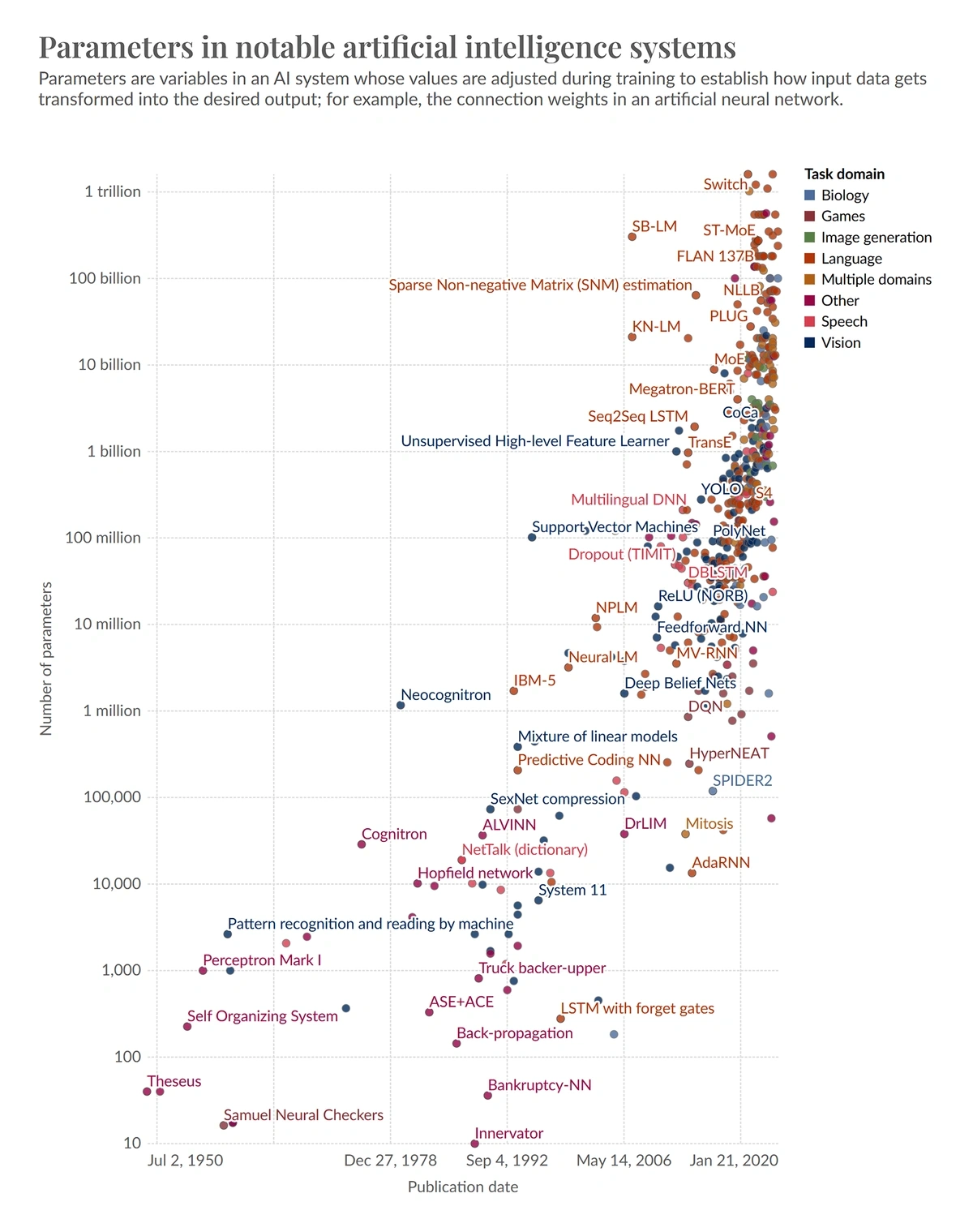

What are the AI parameters?

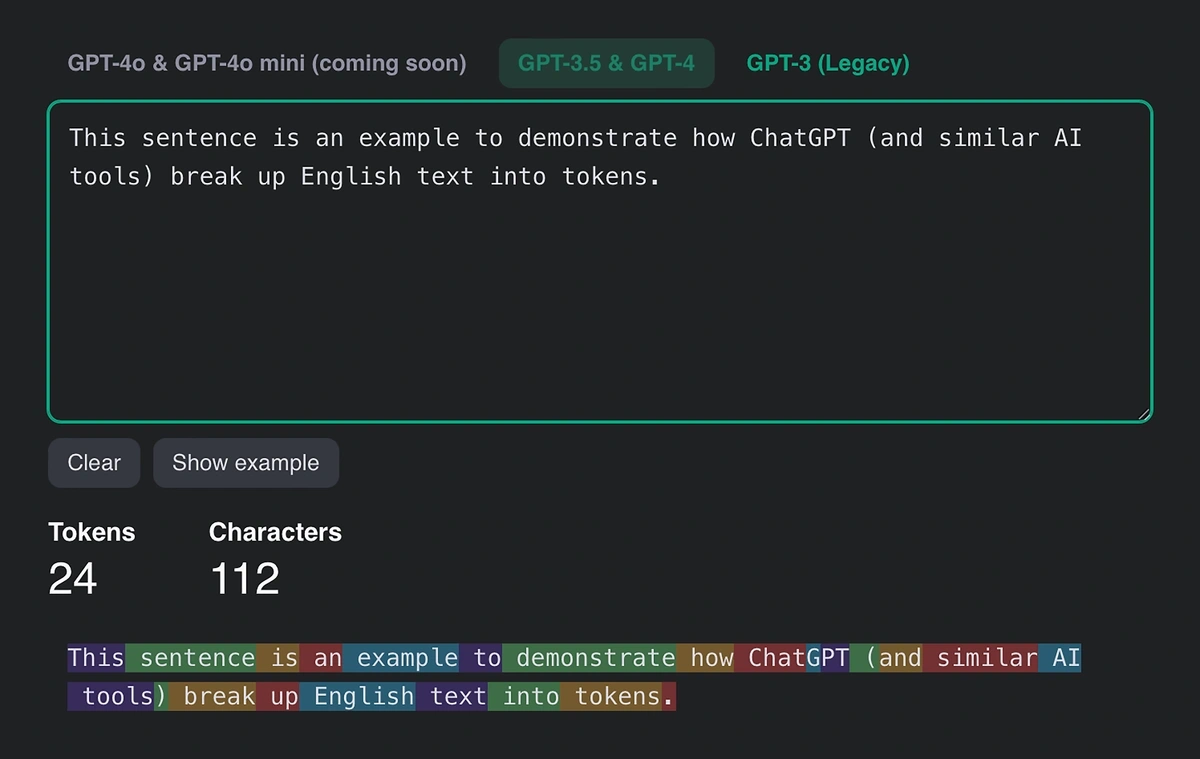

The AI models as chatgpt work by breaking down the textual tokens information. A token is almost the same as three quarters of an English word.

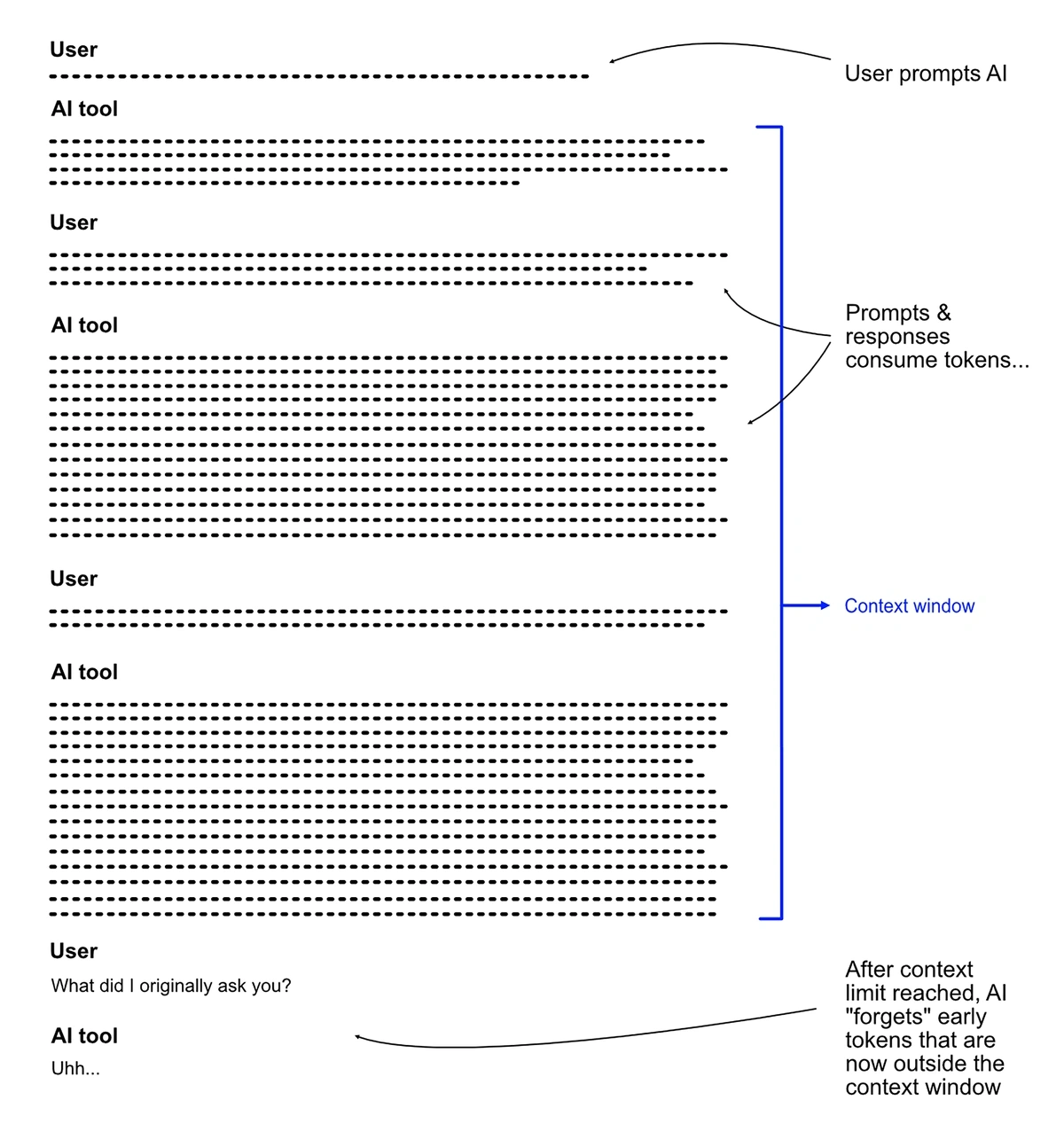

The number of tokens that AI can process is called the length or the context window. Chatgpt-4, for example, has a context window of 32,000 tokens. It’s about 24,000 words in English.

Once you exceed this number, the model will start to “forget” the information sent earlier. This can cause errors and hallucinations.

The parameters are what determines how an AI model can treat these tokens. They are sometimes compared to brain neurons. The human brain has it 86 billion neurons. Connections and interactions between these neurons are fundamental for all that our brain – and therefore the body – done. They interpret the information and determine how to respond.

Research shows that the addition of neurons and connections to a brain can help in learning. A jellyfish has only a few thousand neurons. A snake could have ten million. A pigeon, a few hundred million.

In turn, AI models with more parameters have shown greater information processing capacity.

This is not always the case, however.

More parameters are not always better

An AI with more parameters can generally be better in processing information. However, there are drawbacks.

The most obvious is the cost. Sam Altman of Openai said that the company had spent more than $ 100 million GPT-4 training. The CEO of Anthropic, meanwhile, warned That “by 2025, we can have a model of $ 10 billion”.

This is the main reason why Openai recently released GPT-4O MiniA “profitable small model”. Although it is smaller than GPT-4-that is to say having fewer parameters-it surpasses its predecessor on several important reference tests.

Why the Chatppt 4 has several models

Chatgpt-4 is made up of eight models, each with 220 billion parameters. But what exactly does that mean?

The previous AI models were built using the “dense transformer“Architecture. Chatgpt-3, Google Palm, Meta Llama and dozens of other first models used this formula. Chatgpt-4, however, uses an architecture in particular different.

Instead of stacking all the parameters together, GPT-4 uses the “Expert mixture»(MOE) Architecture. This is not a new idea, but its use of Openai is.

Each of the eight GPT-4 models is made up of two “experts”. In total, GPT-4 has 16 experts, each with 110 billion parameters. As you can expect, each expert specializes.

Therefore, when GPT -4 receives a request, he can complete it through one or two of his experts – the most capable of treating and responding.

In this way, the GPT-4 can respond to a range of complex tasks in a more profitable and timely manner. In reality, much less than 1.8 billion of parameters are really used at any time.

Chatgpt-4o mini has about 8 billion parameters (Techcrunch))

According to an article published by Techcrunch in July, the new mini chatgpt-4o of Openai is comparable to Llama 3 8B, Claude Haiku and Gemini 1.5 Flash. Llama 3 8B is one of Meta’s open source offers, and just 7 billion parameters. This would make GPT-4O Mini remarkably small, given its impressive performance on various reference tests.

Number of parameters in the chatppt-4o

As indicated above, Chatgpt-4 may have approximately 1.8 billion of parameters.

However, the exact number of parameters in GPT-4O is a little less certain than in GPT-4.

Openai has not confirmed how many there are. However, the CTO of Openai said This GPT-4O “brings GPT-4 level intelligence to everything”. If it is true, then GPT -4O could also have parameters of 1.8 Billion – An involvement made by CNET.

However, this connection did not prevent other sources from providing their own assumptions about the size of GPT-4O.

THE India timeFor example, estimated that the Chatppt-4o has more than 200 billion parameters.

Number of parameters in other AI models

Gemini Ultra can have more than 1 Billion of parameters (Manish Tyagi))

Google, perhaps after the example of Openai, did not publicly confirm the size of its latest AI models. However, an estimate puts Gemini Ultra more than 1 Billion of parameters.

Gemini Nano-2 has 3.25 billion parameters (New scientist))

Google Gemini are available in two versions. The first, nano-1, is made up of 1.8 billion parameters. The second, nano-2, is larger, at 3.25 billion parameters. Both models are condensed versions of larger predecessor models. They are intended to be used by smartphones.

Llama 2 to 70 billion parameters (Meta))

Meta’s open source model was formed on two databases, 40% more than Llama 1.

Claude 2 has more than 130 billion parameters (Cortex Text))

Claude 2 was published by Anthropic in July 2023.

Claude 3 opus could have 137 billion parameters (Cogni under))

Unfortunately, this estimate did not provide a source.

But may have more than 2 parameters (Alan D. Thompson))

This estimate was made by Dr. Alan D. Thompson shortly after the release of Claude 3 opus. Thompson also guessed that the model was formed on 40 bowls of tokens.

Chatgpt-4 parameters estimates

Although the figure of 1.76 Billion is the most widely accepted estimate, it is far from the only assumption.

Chatgpt-4 has 1 billion settings (Semaor))

The figure of 1 Billion was thrown a lotIncluding authority sources such as reporting Semaor.

Chatgpt-4 has 100 billions of parameters (Ax semantics))

It is according to the CEO of Cerebras, Andrew Feldman. He claims to have learned this in a conversation with Openai.

Chatgpt-4 was formed on 13 billions of tokens (The decoder))

According to the decoder, which was one of the first points of sale to report on the figure of 1.76 Billion, Chatgpt-4 was formed on approximately 13 billions of information. This training set would have included text and code. It has probably been taken from web robots like Commoncrawl, and may also have included information from social media sites like Reddit. There is an OPENAI chance included information from textbooks and other owner sources.

Conclusion

Unfortunately, many AI – OPENAI developers included – have become reluctant to publicly release the number of parameters in their new models.

However, experts have estimated as for the sizes of many of these models.

While models like Chatgpt-4 have continued the tendency of models becoming greater, more recent offers like GPT-4O Mini may imply a change in focus towards more profitable tools.