Your iPhone has a subject AI detection tool integrated into the Magnifier application. Don’t worry if it’s news for you, because it was also news for me. With all the buzz around big names in AI tools, as Cat And Google BardThe humble tool to go along a bit under the radar.

Using AI, the iOS Magnifier application is capable of detecting and identifying the subjects using the camera, then describing them by the text on the screen and spoken descriptions. The application can also use the same detection system to identify doors and people. It is an impressive accessibility feature that can describe an environment to people who cannot see it by themselves.

While the Magnifier application is available on most iPhones, subject detection depends on your iPhone with a Lidar scanner, which is limited to the pro and pro max models of the iPhone 12 pro ahead. This means that if you have a standard iPhone model, you will not be able to use the AI subject detection.

If you have an iPhone 12 pro or later, the Magnificent Tool powered by AI is easy to use, and this guide is there to show you how.

How to use the iOS magnifying iOS propelled by AI to detect your environment

The screenshots below were taken on a iPhone 15 pro. The detection of subjects using the Magnifier application is available on iPhone Pro models only, from the iPhone 12 Pro. You must run at least iOS 16.

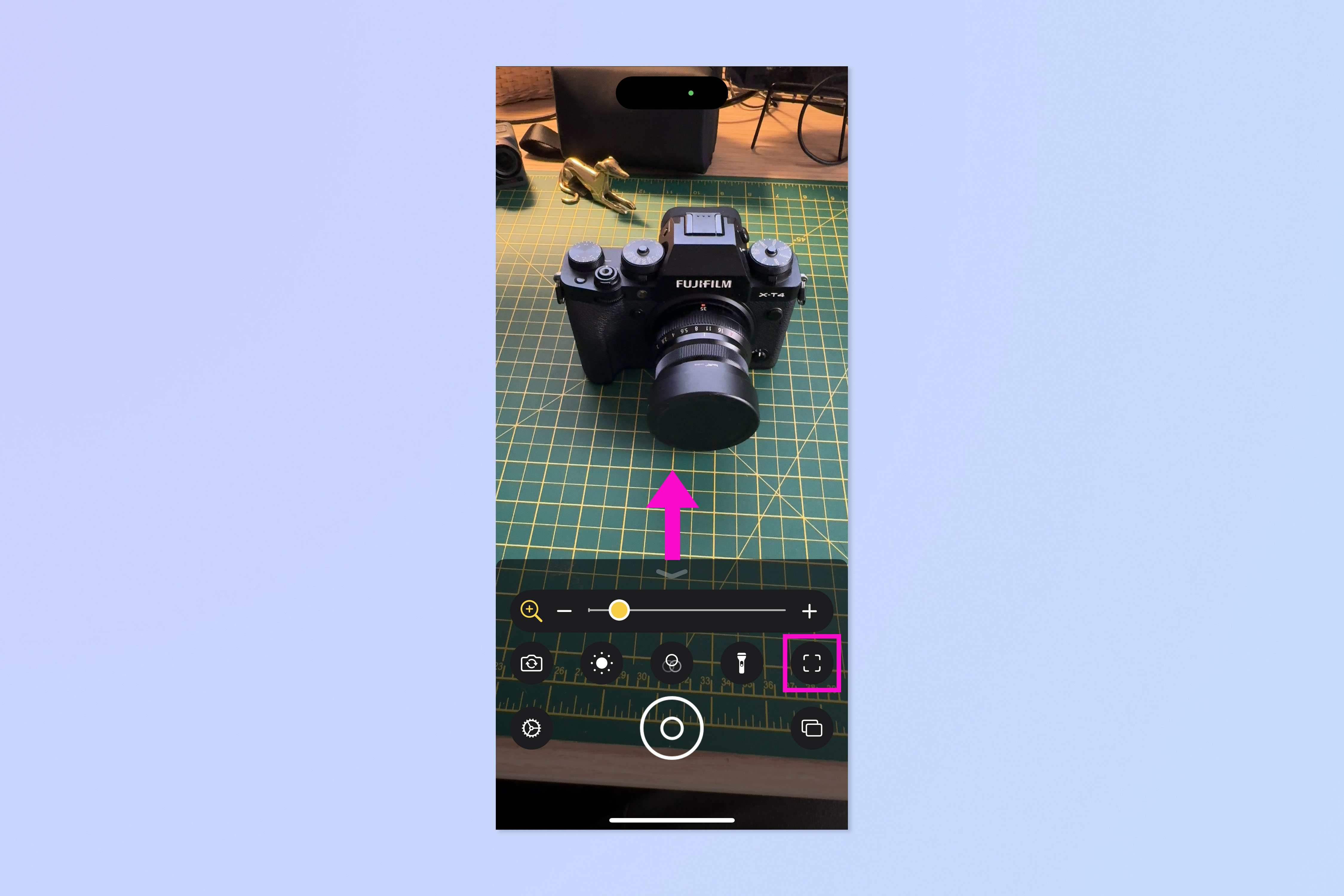

1. Open the Magnifier application, sweep up and press the box icon

(Image: © Future)

First of all, Open the Magnifier ApplicationSO Slide up to the lower panel And Press the box iconon the right side.

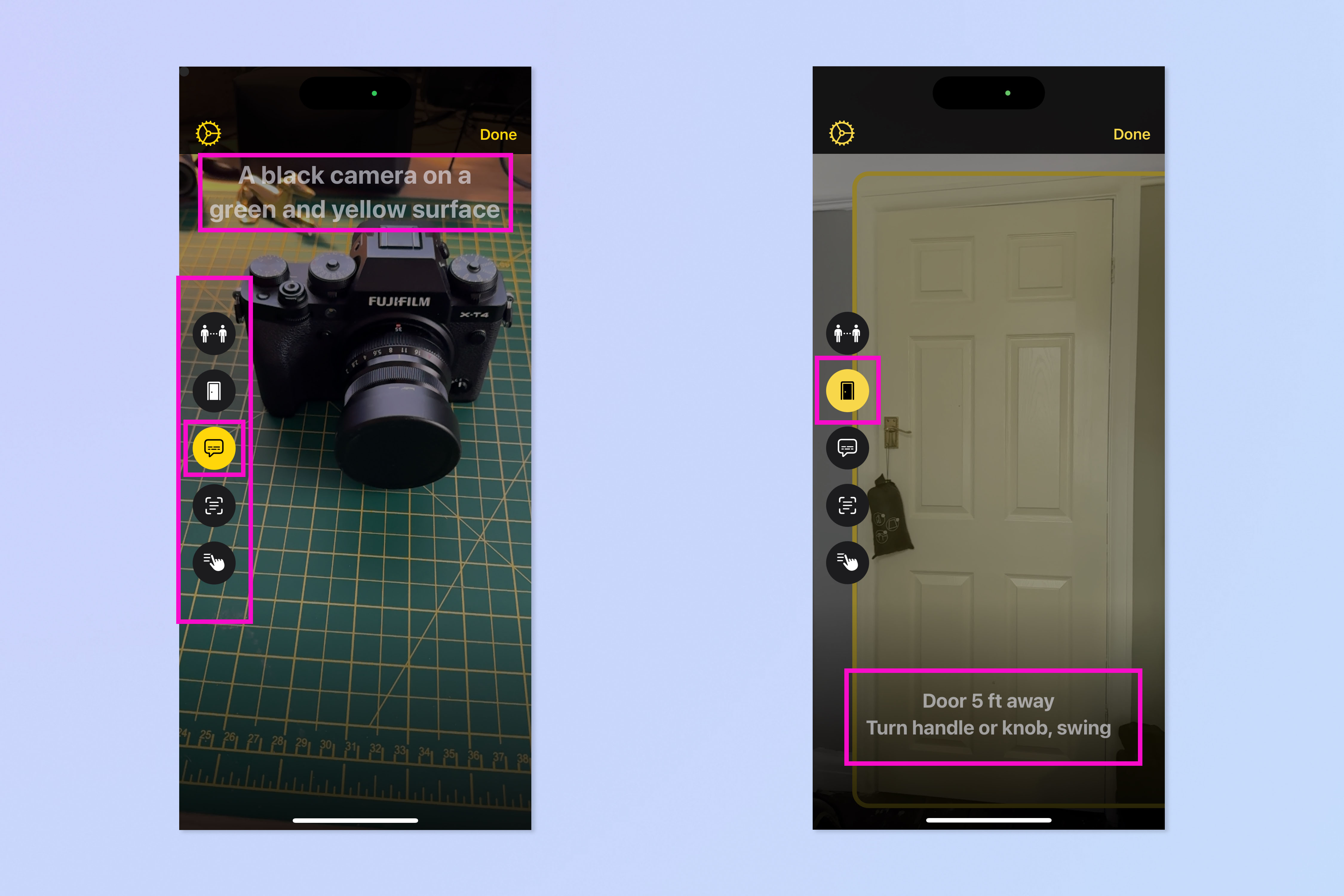

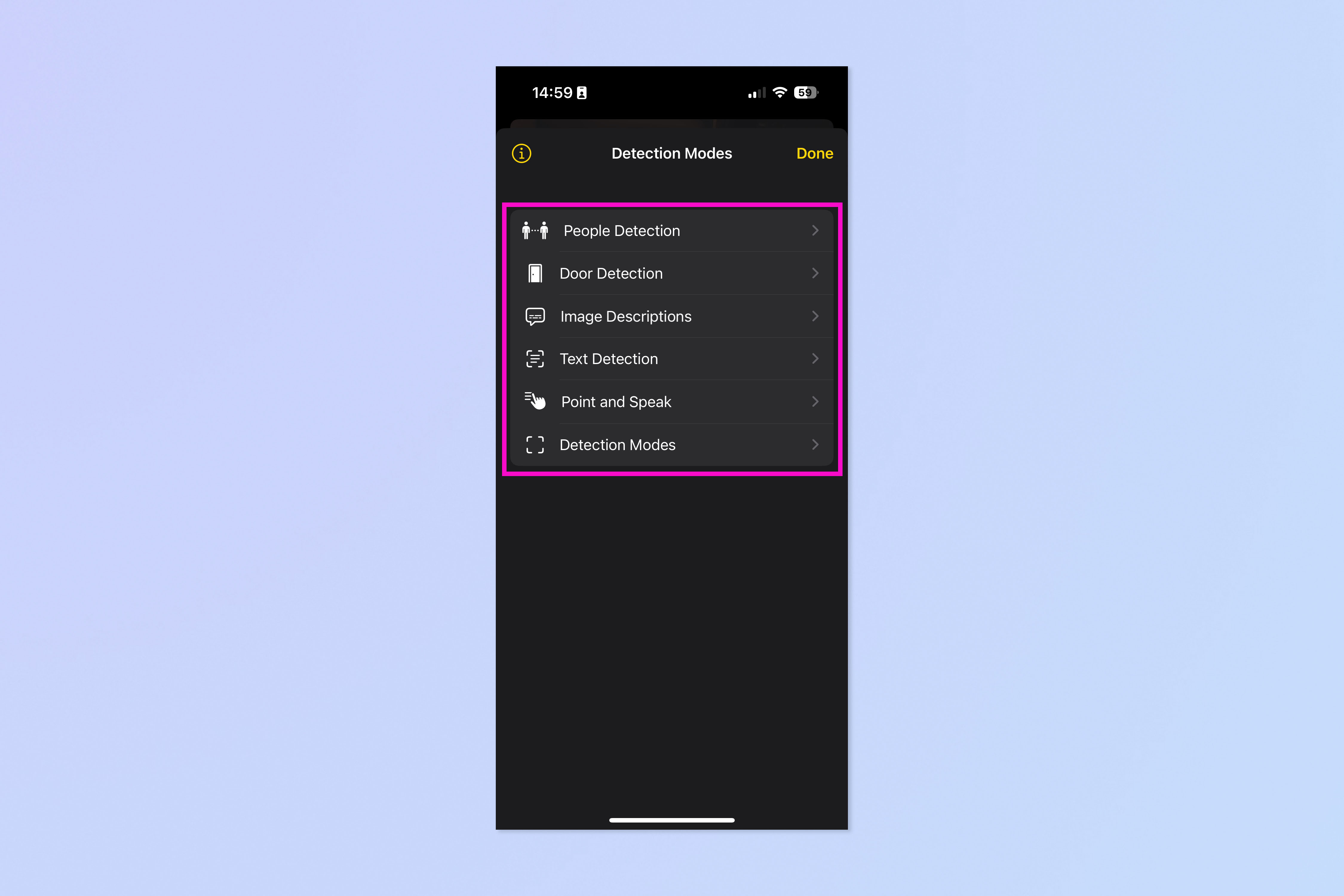

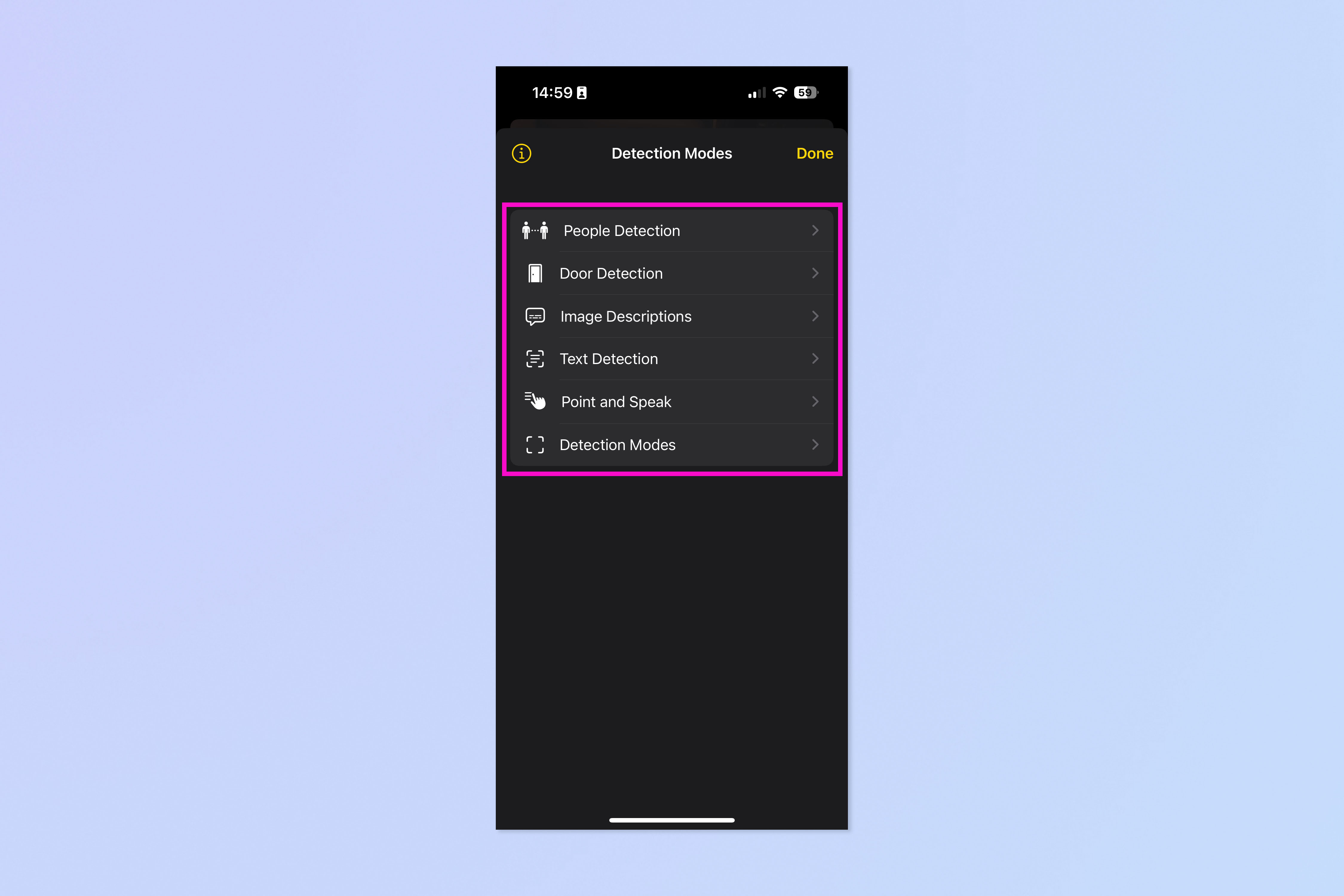

2. Choose a detection mode and point your camera on a subject

(Image: © Future)

The detection modes will appear on the left. Press a and then point the camera on a subject. Your iPhone will identify this subject and tell you what he sees. The icon of the Bulle of Miden speech provides descriptions of images of your environment and acts a bit like a tool for identification for general use – it will simply try to identify everything on the screen (here , a black camera in the screenshot on the left). By default, your iPhone only displays a text description (we will show you how to change it later). The door icon will detect the doors and tell you how far they are and their type of handle (as visible in the screenshot on the right).

The available detection modes are:

- Detection of people (you indicate the distance from neighboring people)

- Doors’ detection (you indicate the distance and type of neighboring doors)

- Environmental images descriptions

- Text detection (relays any text detected on the screen)

- Point and talk (describes what your finger points)

3. Point and talk

(Image: © Future)

All detection modes work similarly, except point and speak. Press the lower icon on the left to enter the point and speak. This mode detects your finger in the frame and will speak what is detected. Point somewhere within the framework Until a yellow box appears and your phone describes what he sees. By default, this mode speaks and does not provide text descriptions (we will show you how to adjust this afterwards).

4. (Optional) Press the COG settings to modify the parameters

(Image: © Future)

If you want to modify the settings of one or more detection modes, Press the COG settingstop left.

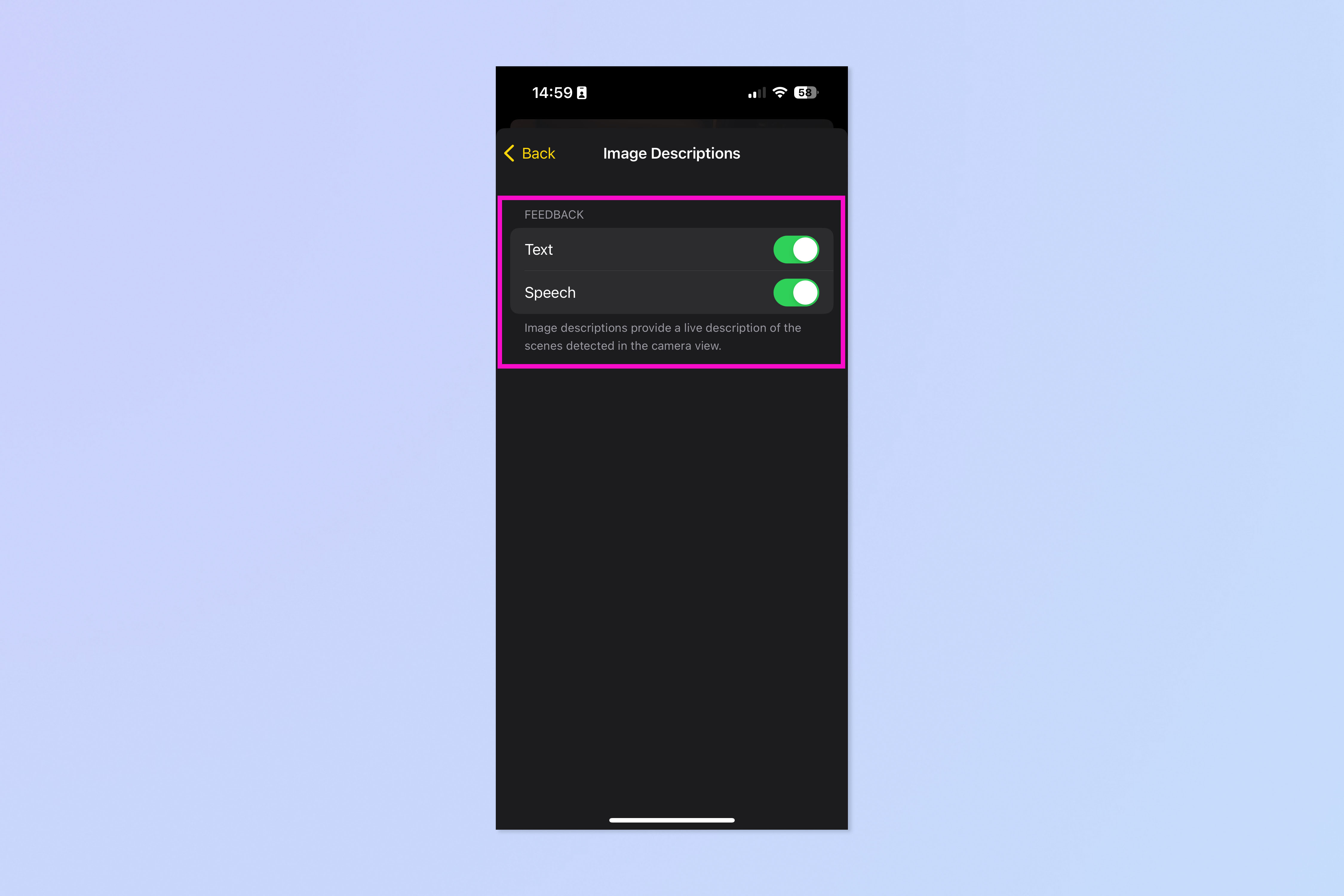

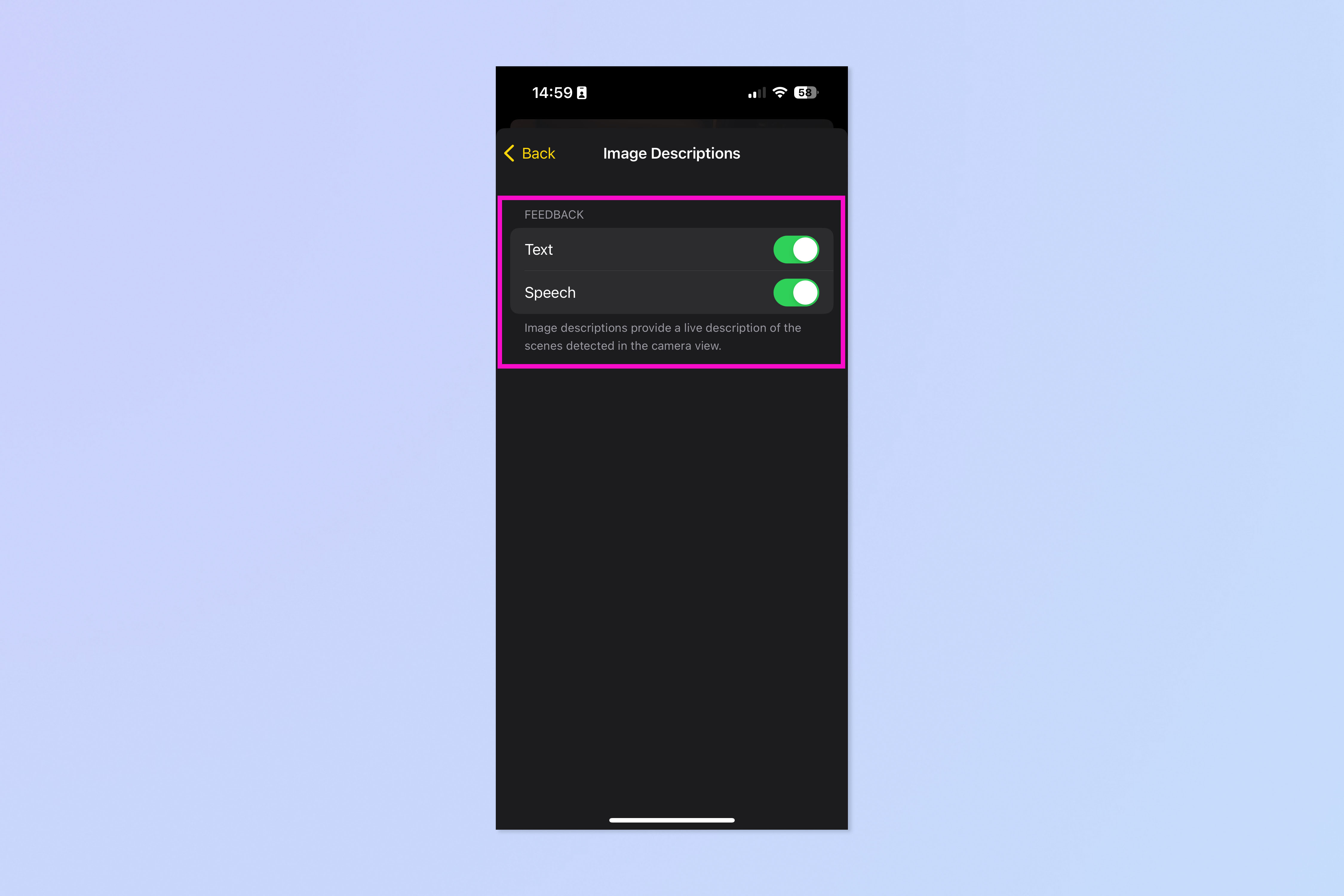

5. Select a detection mode to personalize

(Image: © Future)

Select a detection mode.

6. Apply personalized settings

(Image: © Future)

NOW Make the changes you want to use the downfits. The parameters are not the same for all detection modes. This is where you would switch between spoken results or text results only, for example.

If you want to learn more ways to use your iPhone, see our other tutorials, including How to erase the RAM on the iPhone,, How to transfer data from iPhone to iPhone And How to delete several contacts on iPhone.