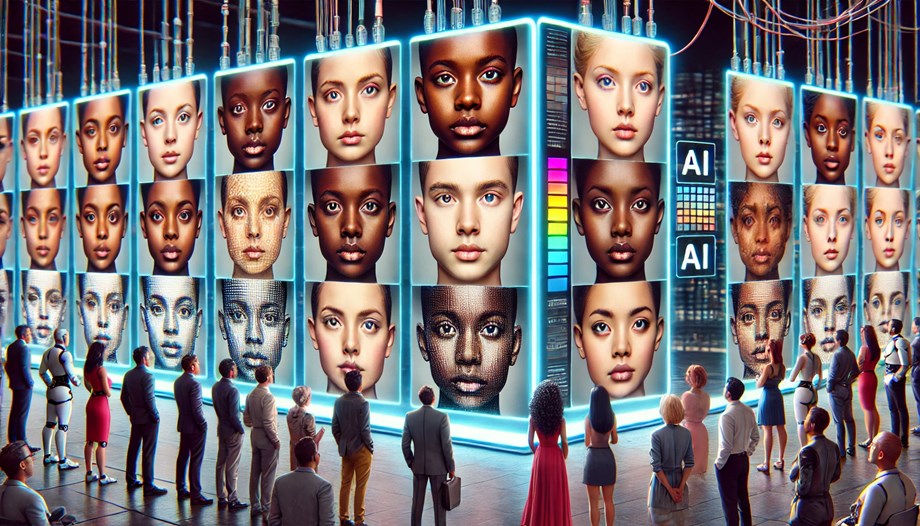

AI-generated images are reshaping digital content. However, the technology is not immune to societal biases, with recent research uncovering troubling evidence of racial disparities in AI-generated imagery. While AI-powered tools are designed to be objective, studies suggest that these systems consistently favor White individuals over people of color, raising concerns about representation, fairness, and digital discrimination.

A new study titled “Racial Bias in AI-Generated Images”, authored by Yiran Yang and published in AI & Society (2025), explores the racial and gender biases in AI-powered image generation tools. The study focuses on a Chinese AI-powered beauty app, analyzing how its image-to-image generation system replicates or alters the race and gender of individuals in uploaded photos. The findings reveal systematic biases, including higher accuracy in generating White individuals while frequently misrepresenting Black and East Asian individuals – especially women. The study also highlights how these biases reinforce white normativity and perpetuate harmful beauty standards.

The problem of racial bias in AI-generated images

AI-generated imagery relies on machine learning algorithms trained on vast datasets of human faces. However, these datasets are often skewed toward certain racial groups, leading AI systems to perform more accurately for some populations than others. This study examined the image transformation accuracy of a popular AI beauty app, analyzing 1,260 AI-generated images of individuals from three racial groups – White, Black, and East Asian – across different contexts.

The results showed a stark disparity in how accurately AI systems generated different races:

- White individuals were depicted with the highest accuracy in all racial contexts.

- Black individuals had the lowest AI-generated accuracy in single-person images.

- East Asian males were misrepresented more often when appearing with another person of a different race.

- When racial inaccuracies occurred, the AI system most frequently depicted non-White individuals as White.

This means that people of color were often “whitened” by AI systems, reinforcing white normativity—the idea that Whiteness is the default or ideal standard in society. The study found that this bias was consistent across different contexts, suggesting that the app’s underlying AI model has an inherent preference for White features.

The impact of AI bias on representation and identity

One of the most troubling aspects of racial bias in AI-generated images is the concept of representational harm. This occurs when AI systems reinforce stereotypes, erase certain racial features, or fail to accurately depict marginalized groups. The study highlights how this can affect users in multiple ways:

- Diminished self-identification – People of color who use AI-generated beauty apps may struggle to see themselves represented, leading to feelings of alienation or exclusion.

- Reinforcement of White beauty standards – AI tools that lighten skin tones and alter facial features promote a Eurocentric ideal of beauty, further marginalizing non-White features.

- Bias in interracial representations – When AI struggles to accurately depict people of color in images containing multiple races, it distorts racial diversity and can erase non-White individuals from visual narratives.

For example, the study found that in interracial images featuring a White and an East Asian person, the AI system often altered the East Asian person’s features to appear Whiter. This suggests that the AI prioritizes White facial features, leading to misrepresentation and potential reinforcement of societal hierarchies.

Why AI image generators are failing people of color

The study identifies multiple factors contributing to racial bias in AI-generated images, including:

- Training Data Bias: Many AI models are trained on datasets heavily skewed toward White individuals, leading to poor generalization when generating images of people of color.

- Algorithmic Design Flaws: The feature extraction process in AI image generators may place higher weight on White facial structures, leading to more accurate White representations and distorted non-White features.

- Cultural Norms Embedded in AI: AI models trained in certain cultural contexts (such as the Chinese beauty industry) may reflect regional beauty standards, which often favor lighter skin tones and European facial features.

- Lack of Regulatory Oversight: Unlike facial recognition technology, AI image generators face little regulation or scrutiny, allowing racial biases to persist unchecked.

The study argues that these biases are not accidental but a reflection of systemic inequalities that have been transferred into AI models. It highlights how AI technologies, even when developed in non-White-majority countries, can still reinforce global racial hierarchies due to the influence of postcolonial beauty norms and digital globalization.

Moving forward: How to make AI image generators more equitable

The findings of this study raise urgent questions about AI ethics and fairness in image generation. Addressing racial bias in AI-generated images requires a multifaceted approach, including:

- More Representative Training Data – AI developers must diversify training datasets to ensure equal representation of all racial groups, particularly Black and East Asian individuals.

- Bias Audits and Transparency – Companies should conduct regular audits to identify and correct racial biases in their AI models.

- User Control Over AI Outputs – Apps should allow users to adjust AI-generated outputs to ensure they align with their actual racial identity rather than conforming to a default White standard.

- Stronger Ethical Guidelines – Governments and AI researchers must work together to establish regulations that ensure fair and unbiased AI-generated representations.

- Cultural Sensitivity in AI Design – AI developers should collaborate with diverse communities to understand and respect regional differences in beauty standards and identity.

As AI becomes more integrated into everyday life – from social media filters to facial recognition systems – there is an urgent need for fairer, more inclusive AI models. Tackling racial bias in AI-generated images is not just about technology – it is about representation, identity, and social justice in the digital age.