What can exploding stars tell us about how blood flows through an artery? Or swimming bacteria that explain how the layers of the ocean mix? A collaboration between researchers at universities, science philanthropies and national laboratories has taken an important step in training artificial intelligence models to find and exploit transferable knowledge between seemingly disparate fields to drive scientific discovery.

This initiative, called Polymathic AIuses technology similar to that which powers large language models such as OpenAI’s ChatGPT or Google’s Gemini. But instead of ingesting text, the project’s models learn using scientific datasets from astrophysics, biology, acoustics, chemistry, fluid dynamics and more. more so, essentially giving the models interdisciplinary scientific knowledge.

“These groundbreaking datasets are by far the most diverse large-scale collections of high-quality data for machine learning training ever assembled in these fields,” says Polymathic AI member Michael McCabe, a research engineer at the Flatiron Institute. from New York. “Curating these datasets is a crucial step in creating multidisciplinary AI models that will enable new discoveries about our universe.”

Today, the Polymathic AI team released two of its collections of open source training datasets – a colossal 115 terabytes total from dozens of sources – for the scientific community to use to train models of AI and enable new scientific discoveries. (For comparison, GPT-3 used 45 terabytes of uncompressed, unformatted text for training, which ended up being around 0.5 terabytes after filtering.)

“Freely available datasets provide an unprecedented resource for developing sophisticated machine learning models that can then solve a wide range of scientific problems,” says Ruben Ohana, a member of Polymathic AI and a researcher at the Center for Computational Mathematics (CCM ) from the Flatiron Institute. “The machine learning community has always been open source; this is why the pace has been so fast compared to other areas. We believe that sharing this open source data will benefit the scientific and machine learning communities. It’s a win-win situation: you have machine learning that can develop new models, and at the same time, scientific communities can see what machine learning can do for them.

The full datasets can be downloaded free from the Flatiron Institute and accessed at Cuddly facea platform hosting AI models and datasets. The Polymathic AI team provides more information on the datasets in two papers accepted for presentation at the leading machine learning conference, NeuroIPSwhich will be held in December in Vancouver, Canada.

“We’ve seen time and time again that the most effective way to advance machine learning is to take difficult challenges and make them accessible to the broader research community,” McCabe says. “Every time a new benchmark is released, it initially seems like an insurmountable problem, but once a challenge is made accessible to the broader community, we see more and more people taking up it and accelerate progress faster than any individual group could alone.”

The Polymathic AI project is led by researchers from the Simons Foundation and its Flatiron Institute, New York University, Cambridge University, Princeton University, the French National Center for Scientific Research and from the Lawrence Berkeley National Laboratory.

AI tools such as machine learning are becoming more common in scientific research, including being recognized in two of this year’s Nobel Prizes. However, these tools are usually specially designed for a specific application and trained using data from that domain. Rather, the Polymathic AI project aims to develop truly polymathic models, like people whose expert knowledge spans multiple domains. The project team itself reflects intellectual diversity, with physicists, astrophysicists, mathematicians, computer scientists and neuroscientists.

The first of two new collections of training datasets focuses on astrophysics. Nicknamed the Multimodal universethe dataset contains hundreds of millions of astronomical observations and measurementslike the portraits of galaxies taken by NASA’s James Webb Space Telescope and the measurements of the stars in our galaxy taken by the European Space Agency’s Gaia spacecraft.

“Machine learning has been around for about 10 years in astrophysics, but it is still very difficult to use across instruments, missions and scientific disciplines,” explains François Lanusse, researcher at Polymathic AI. “Data sets like the multimodal universe will allow us to create models that natively understand all of this data and can be used as a Swiss army knife for astrophysics.”

In total, the dataset reaches 100 terabytes and is a major undertaking. “Our work, led by a dozen institutes and two dozen researchers, is paving the way for machine learning to become an essential part of modern astronomy,” says Micah Bowles, a member of Polymathic AI and Schmidt AI in Science Fellow at the University of Oxford. “Assembling this dataset was only possible thanks to extensive collaboration not only from the Polymathic AI team, but also from many expert astronomers around the world.”

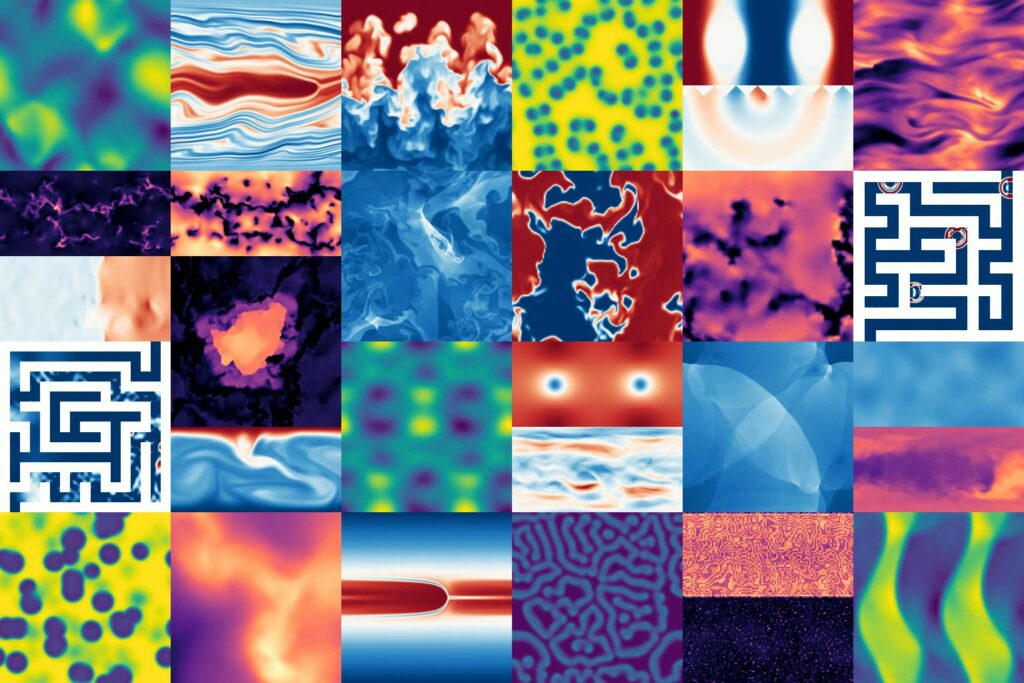

The other collection — called the well – understand over 15 terabytes of data from 16 diverse datasets. These datasets contain numerical simulations of biological systems, fluid dynamics, acoustic scattering, supernova explosions, and other complex processes. Although these various data sets may seem disconnected at first, they all require the modeling of mathematical equations called partial differential equations. Such equations appear in problems related to everything from quantum mechanics to embryo development, and can be incredibly difficult to solve, even for supercomputers. One of Well’s goals is to enable AI models to quickly and accurately produce approximate solutions to these equations.

“This dataset encompasses a diverse range of physics simulations designed to address key limitations of current (machine learning) models,” explains Polymathic AI member and CCM researcher Rudy Morel. “We look forward to seeing models that perform well in all of these scenarios, as this would be a significant step forward.” »

Collecting the data for these datasets was a challenge, says Ohana. The team collaborated with scientists to gather and create data for the project. “Creators of digital simulations are sometimes skeptical of machine learning because of all the hype, but they are curious about how it can benefit their research and accelerate scientific discovery,” he says.

The Polymathic AI team itself now uses the datasets to train AI models. In the coming months, they will deploy these models on various tasks to see how well these well-trained, well-rounded AIs solve complex scientific problems.

“Understanding how machine learning models generalize and interpolate across datasets from different physical systems is an exciting research challenge,” says Régaldo-Saint Blancard, a member of Polymathic AI and a researcher at CCM.

The Polymathic AI team began training machine learning models using the datasets, and “the initial results have been very interesting,” says Shirley Ho, Polymathic AI project manager and group leader at the Center for Computational Astrophysics from the Flatiron Institute. “I also look forward to seeing what other AI scientists will do with these datasets.” Just as the Protein Data Bank gave birth to AlphaFold, I can’t wait to see what the Well and the multimodal universe will help create. Ho will give a talk at the NeurIPS meeting highlighting the use and incredible potential of this work.