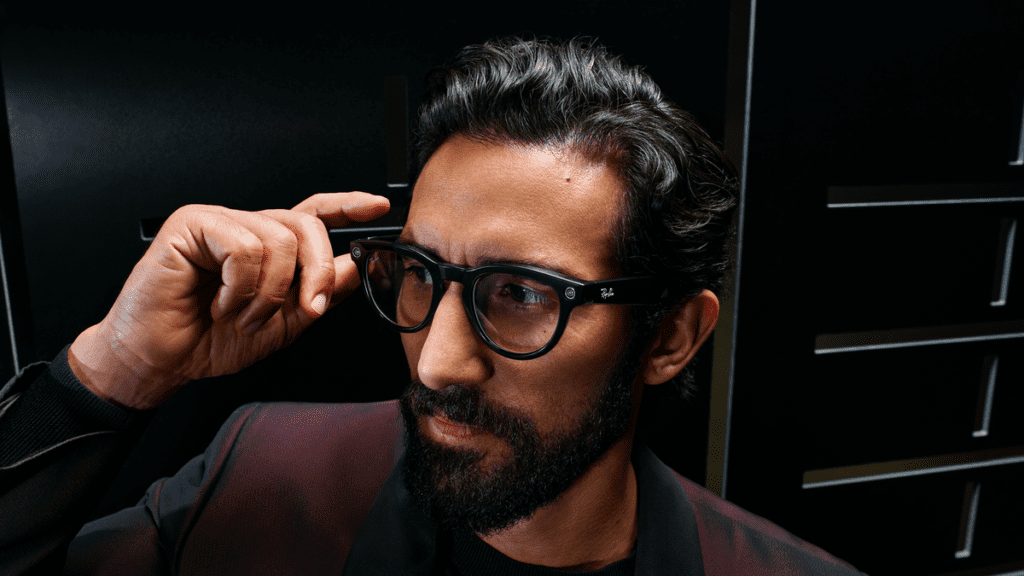

Ray-Ban Meta Glasses now has an early access program for multimodal AI, allowing you to ask him questions about what you look at.

The Ray-Ban Meta intelligent glasses were launched in Connect 2023 as a successor to Ray-Ban Stories, the 2021 first-person camera glasses that allow you to capture photos and videos in the first hand hands-free, make phone calls and listen to music. Compared to the original model, the new glasses have improved the quality of the camera, a network of upper microphones, water resistance and live diffusion in Instagram.

But their most important new feature is Meta AI. Currently available in the United States only, it’s a conversational assistant you can talk about by saying “Hey Meta”. Meta Ai is much more advanced than the current Alexa, Siri or Google Assistant, because it is fueled by the large language model of Meta’s Llama 2, the same type of technology that feeds Chatgpt.

In the new early access program, Meta AI will no longer be limited to the contribution of speech. It can now also respond to requests on what you look at or the last photo you have taken.

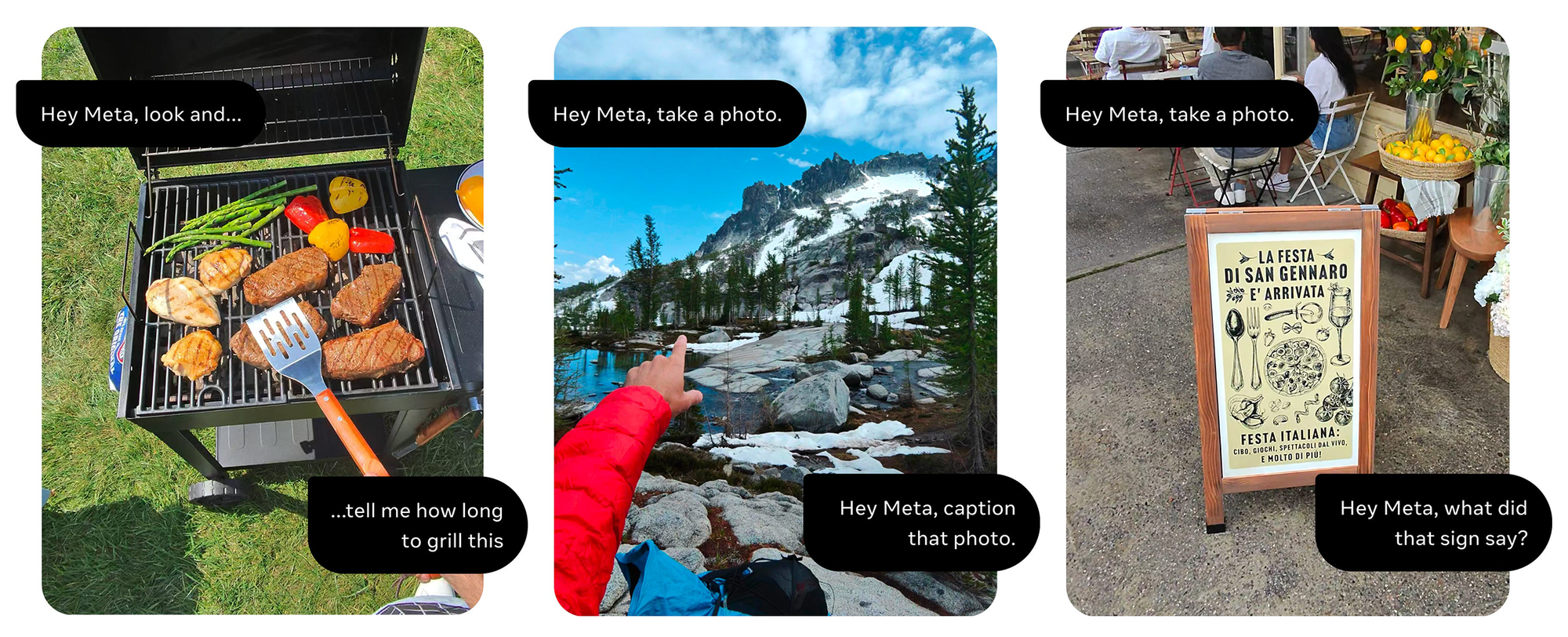

This multimodal capacity, called Look and Ask, has many potential use cases, such as answering questions about what you cook, suggesting a legend for a photo, or even translating a poster or signing another language.

The Look and Program of Early Access is available for a “limited number” of owners in the United States, and should deploy the time more widely next year.

Example of meta-ai translating a poster.

Another update arriving at Meta IA is real -time research capacity. The system will automatically decide to search for the web using Bing to find answers for questions relating to current events, sports scores, etc. This real-time research capacity will be “deployed in phases” to all customers based in the United States.

Meanwhile, there is still no official calendar for the audio meta-audio in one of the 14 other countries in which the glasses are sold.