There may be legitimate and valuable uses for generative AI

But its interaction with the arts and the practice of artists raises several issues.

I’ve been yawping about this for a minute, and will yawp on anon. Today I wanted to collect a list of the major issues, with links to some of my previous yawps. I hope this is useful in organizing your thinking.

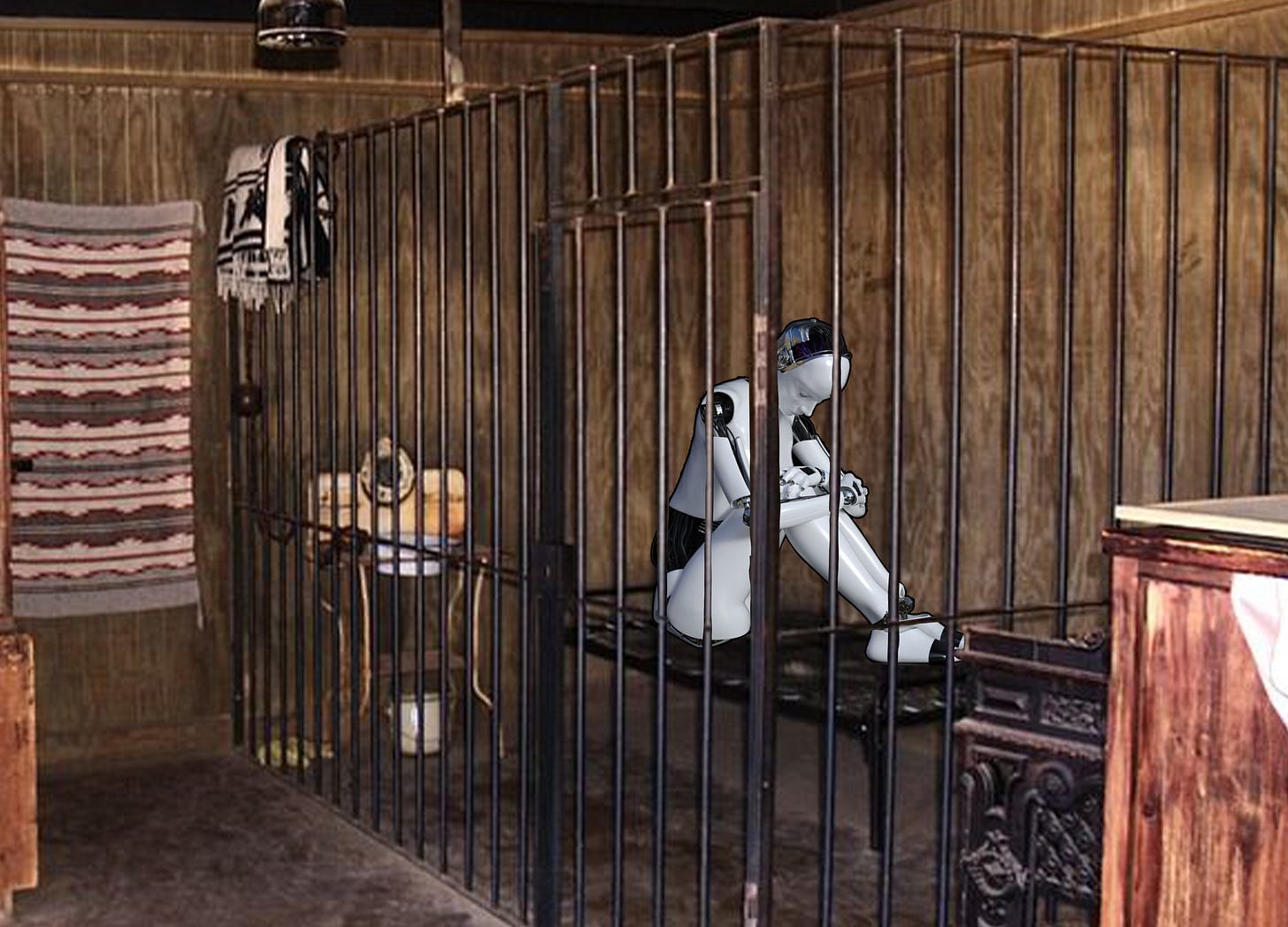

This is the issue that has me return to the topic of Generative AI over and over again. The purpose of art is to make a bridge between the Soul and the world. To do this, an artist must discover and develop their soul, through interior exploration, be it conscious or otherwise. And they must also develop their craft, the skills to take what has been discovered and developed in their soul and make it manifest in the physical world. The use of Generative AI almost completely bypasses both parts of this process. A prompter does not need to develop their soul, and they do not need to exhibit any special craft. What matters is no longer the connection between the Soul and the world, but just the product. Art becomes another commercial enterprise, where we are just trying to get the goods in the most efficient way possible.

In that environment, the Soul is left unattended, and withers and shrinks as a result.

The output of GenerativeAI is aesthetically bad. When it’s writing, it’s bland and characterless. When it’s visual art, it looks incoherent and uncanny. When it’s music, it fails to engage, or sounds awkward.

What’s more, I think there is something uniquely unpleasant about the way it’s bad. It’s not bad the way the product of an inexperienced artist is bad. It’s bad in its own unique, nauseating way.

I talk about that this at length in the podcast episode AI, Nausea, Postmodernism, Social Media

It’s a tool that gives the illusion of being good for making art, but in practice it’s not. This is because it expresses a weak connection between the intention of the user and the output. I call this connection Fidelity, and discuss it further in the article Fidelity of the Tool

Generative AI is environmentally expensive. It uses large amounts of power, and also requires large amounts of clean water for cooling.

“It’s estimated that a search driven by generative AI uses four to five times the energy of a conventional web search”

Kate Crawford in Nature https://www.nature.com/articles/d41586-024-00478-x

While their may be ways to mitigate these issues, as it stands, Generative AI is expressing an outsized environmental burden.

As of January 30th 2025, the Chinese AI product DeepSeek has just made a splash by being significantly cheaper to train than

This is probably the hottest issue amongst artists. The big Generative AI models (Dall-E, Midjourney, ChatGPT etc) have been trained on huge amounts of material scraped from the internet. The producers of this material were not generally asked for permission to train these models on their work. Now, in the most extreme cases, people are being replaced by models that were trained on their work without their consent. But less extreme cases still pose an ethical issue. Artists having their unique artistic style used to produce free imitations of their work. Artists being accused of using GenAI when their work looks like the output of GenAI because the model was trained on their art.

There’s a tiny grain of sand in this argument. I give the artists 99.99% of the moral high ground in this fight. The 0.01% on the other side is: people have been doing this kind of scraping, repurposing of content on the internet for years, for engineering, creative, and scientific purposes, and there wasn’t the same outcry. The circumstances are different now because of the use the scraped material is being put to. The artists were not (in general) upset about the consent issue per se, but rather with hindsight, on seeing what the impact of these particular scraping projects was, they are upset by the lack of consent. So, it’s not that there is no IP issue here, there clearly is. It’s just that the negative reaction is not purely motivated by IP concerns. It’s also weaponizing those IP concerns in defense of the Labor concerns discussed below. You can tell this is the case because people would not be raising the same fuss if AI companies were scraping their art but the output of the AIs was unusable garbage that no artist would be concerned about being replaced by..

To be clear, I’m on the side of the artists here. Their work has been stolen without their consent, and used to build technology which has the ambition, if not the capability, of replacing them. This is beyond galling, and I completely understand their outrage.

We will see in the long run how disruptive the technology will be to professional artists. It’s actually hard to know what the impact has been so far. I haven’t been able to find any studies published on it yet. All there is is anecdotes about freelancers having a harder time finding clients, or things like the Hasbro layoffs that are inferred to be due to AI.

One other datapoint is that the use of AI in screenwriting was a central issue in the SAG strikes last year. But this doesn’t mean it will cause disruption, just that the guild members feared it would.

I am slightly skeptical that this will be a major issue long term. My guess is that some companies will try to go this road, but the products will be bad, and the optics will be bad, and they will lose to people who continue to hire artists. But this is a guess, and if you’re a professional artist, I very much understand why you would be concerned.

Those are the big ones as far as I can see. More might be revealed in the coming years, and of course, each of these can and has been expounded on in much greater detail.

Is there any that I missed? And, if you had to pick, which one is closest to your heart?