GTC NVIDIA Blackwell ultra and the upcoming Vera and Rubin processors of Nvidia dominated the conversation at the conference on CORP GPU technology this week. But undoubtedly one of the most important announcements of the annual developer event was not at all a chip, but rather a software frame called Dynamo, designed to meet the challenges of large -scale AI inference.

Announced on stage in GTCIt was described by CEO Jensen Huang as the “operating system of an AI factory” and made comparisons with the real dynamo which launched an industrial revolution. “The dynamo was the first instrument that started the last industrial revolution,” said the chief. “The Industrial Energy Revolution – Water enters, electricity comes out.”

In its heart, open source inference suite is designed to better optimize inference engines such as Tensorrt LLM, SGLANG and VLLM to cross large amounts of GPU as quickly and effectively as possible.

As we have before discussedThe faster and cheaper token to a token, you can be thrown after a model, the better users.

Inference is more difficult than it seems

At a high level, LLM output performance can be divided into two main categories: Preposement and decode. The prefusion is dictated by the speed with which the matrix matrix matrix matrix accelerators can process the entry prompt. Plus the prompt – say, a summary task – the more it generally takes a long time.

Decode, on the other hand, is most people associate with LLM’s performance, and is equivalent to the speed with which GPUs can produce real tokens in response to the user’s prompt.

As long as your GPU has enough memory to adapt to the model, the decoder performance is generally depending on the speed at which this memory is and the number of tokens that you generate. A GPU with 8 TB / s of memory bandwidth will cause tokens more than twice faster than that of 3.35 TB / S.

Where things start to complicate, it is when you start looking for larger models to more people with longer entry and exit sequences, as you could see in a research assistant on AI or a model of reasoning.

Large models are generally distributed over several GPUs, and the way it is accomplished can have a major impact on performance and flow, which Huang discussed at length during his speech.

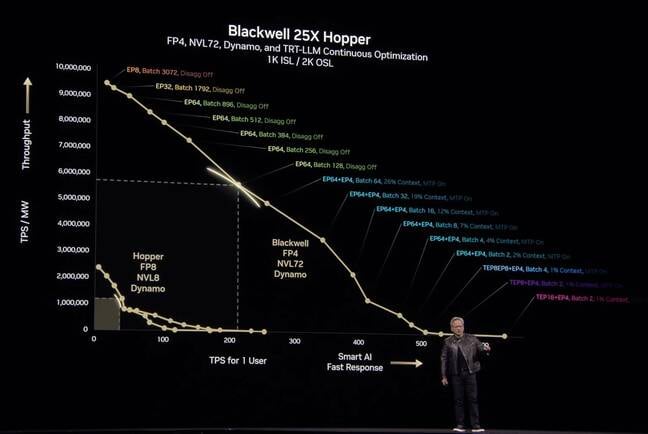

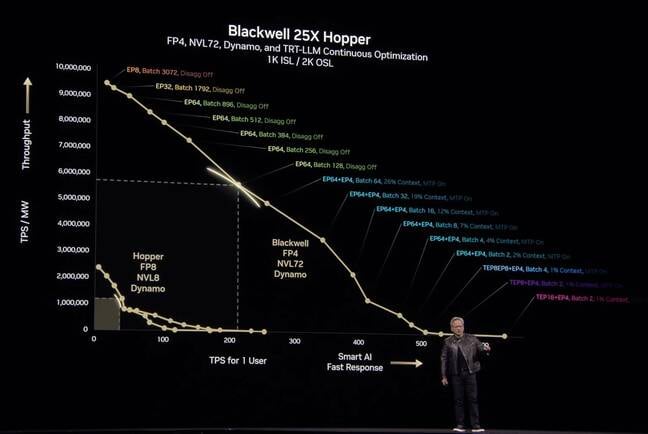

As you can see from this slide from the opening of the CEO of Nvidia, Jensen Huang, the inference performance can vary madly depending on how you distribute a model. The graphically graphically chip graphics for a user against the global tokens per second by megawatt … Click to enlarge

“Under the Pareto frontier are millions of points that we could have configured the data center. We could have parallelized and divide the work and he rooted the work in different ways, “he said.

What he means is that, according to how your model is parallelized, you may be able to serve millions of simultaneous users, but only at 10 tokens per second. Meanwhile, another combination is unable to serve only a few thousand simultaneous requests but generate hundreds of tokens in the blink of an eye.

According to Huang, if you can determine where on this curve, your workload offers the ideal mixture of individual performance while carrying out the maximum rate possible, you can charge a premium for your service while lowering operating costs. We imagine that it is balancing at least some LLM suppliers work when scaling their generative applications and services to more and more customers.

Branking the dynamo

Finding this right environment between performance and flow is one of the key capabilities offered by Dynamo, we are told.

In addition to providing users with information on the ideal mixture of expert parallelism, pipeline or tensor, Dynamo disinquests pre-filled and decoding on different accelerators.

According to NVIDIA, a Dynamo GPU planner determines the number of accelerators that should be dedicated to the prefusion and decoding depending on demand.

However, Dynamo is not only a GPU profileur. The frame also includes a quick routing functionality, which identifies and directs overlap requests to specific GPU groups to maximize the probability of a key hut (KV).

If you are not familiar, the KV cache represents the state of the model at any time. Thus, if several users ask similar questions in a short time, the model can withdraw from this cover rather than recalculate the state of the model again and again.

In addition to the intelligent router, Dynamo also offers a low latency communication library to speed up GPU-GPU data flows, and a memory management subsystem responsible for the thrust or reduction of HBM KV cache to or from the system memory or cold storage to maximize reactivity and minimize waiting times.

For systems based on the hopper performing LLAMA models, NVIDIA says that Dynamo can double the performance of inference. Meanwhile, for the biggest Blackwell NVL72 systems, the GPU giant claims an advantage of 30x in Deepseek-R1 on Hopper with the activated frame.

Large compatibility

Although Dynamo is obviously set to Nvidia’s hardware and software batteries, as is the triton inference server it replaces, the framework is designed to integrate into popular software libraries for model service, such as VLLM, Pytorch and Sglang.

This means that if you work with a heterogeneous calculation environment which also contains a certain number of AMD or Intel accelerators in addition to NVIDIA GPUs, you do not need to qualify and maintain another inference engine and can rather stick to VLLM or SGLANG if this is what you already use.

Obviously, Dynamo will not work with AMD or Intel Hardware, but it will work on any Nvidia GPU which will return to Ampère. So, if you always sweat a bunch of A100, you can still benefit from the new Nvidia ia ia.

Nvidia has already published instructions to put itself into service with Dynamo on Github And will also offer the frame as a container image – or NIM as they took it to call them – to facilitate deployment. ®

Read now: Nvidia turns her eye to the company