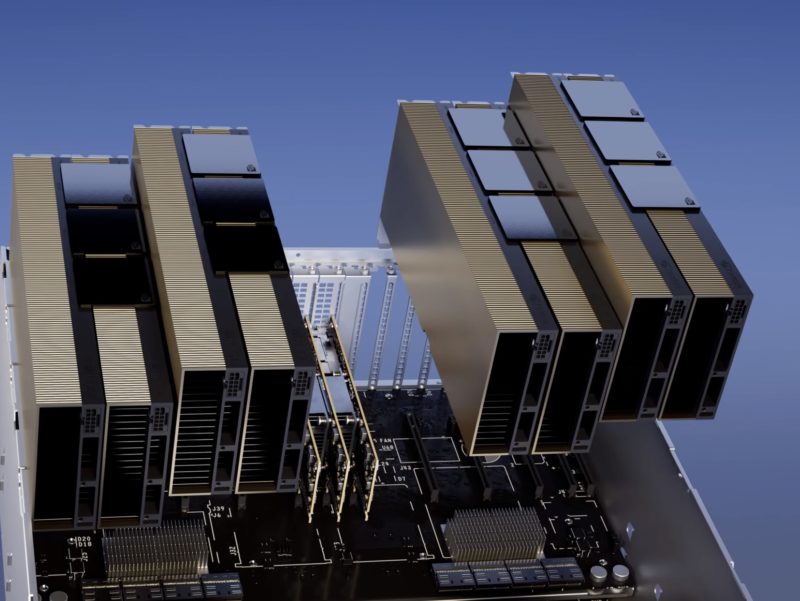

When NVIDIA H100 NVL 188GB The solution was launched for the first time, it was shown as a 2 cards solution with the HBM3 memory memory of 188 GB. At the time, we noted that it was mainly two PCIE cards linked on a NVLink bridge. Then the The 4 -track NVIDIA H200 NVL solution was presented at the OCP Summit 2024. Something our coverage has always shown was the NVL cards installed with NVLink bridges. A reasonable question is whether the cards can work alone, so we have tested it.

Can you execute the 94 GB 94 GB NVL NVL PCIe as a single GPU

The original NVIDIA H100 PCIE option was the 80 GB HBM2E card. This card contains the NVLink bridge connectors on it.

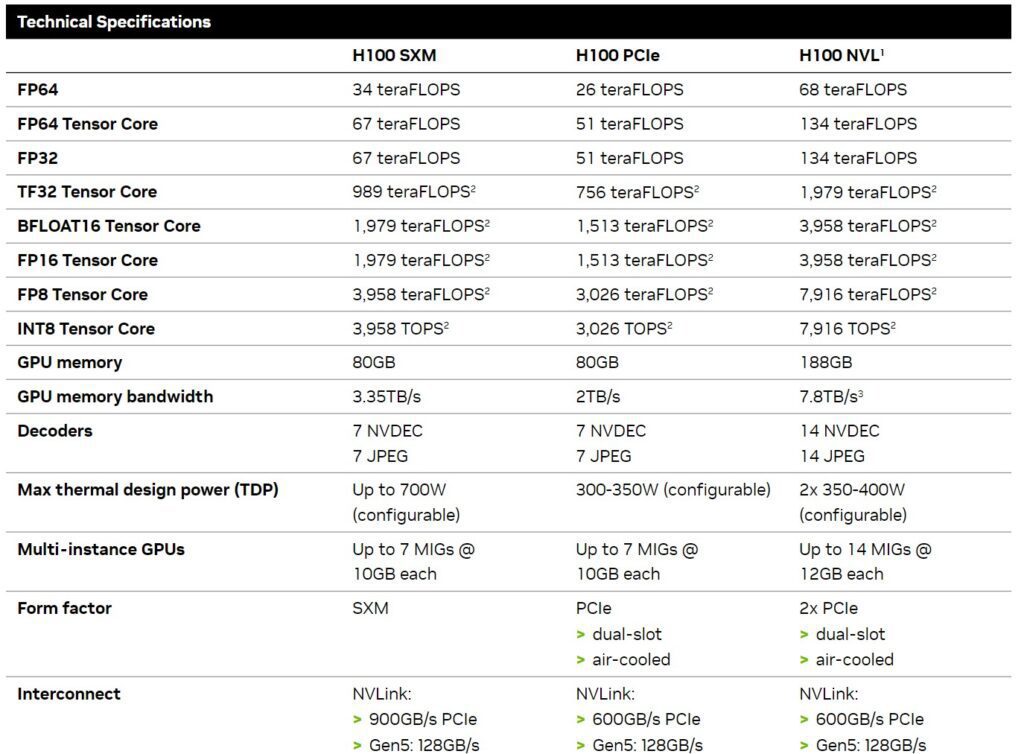

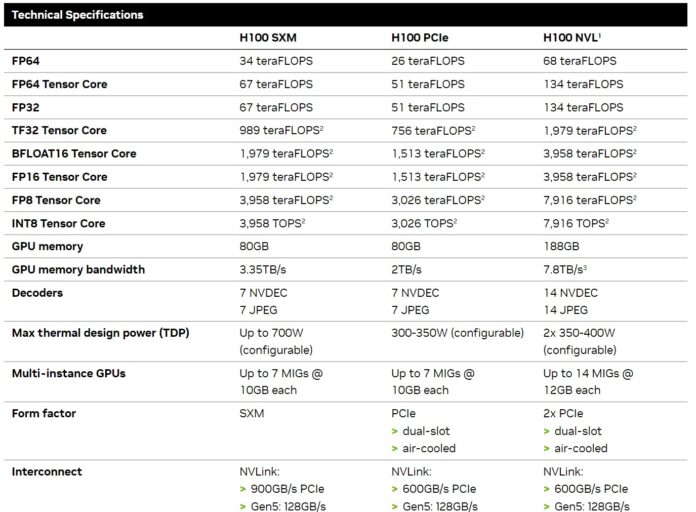

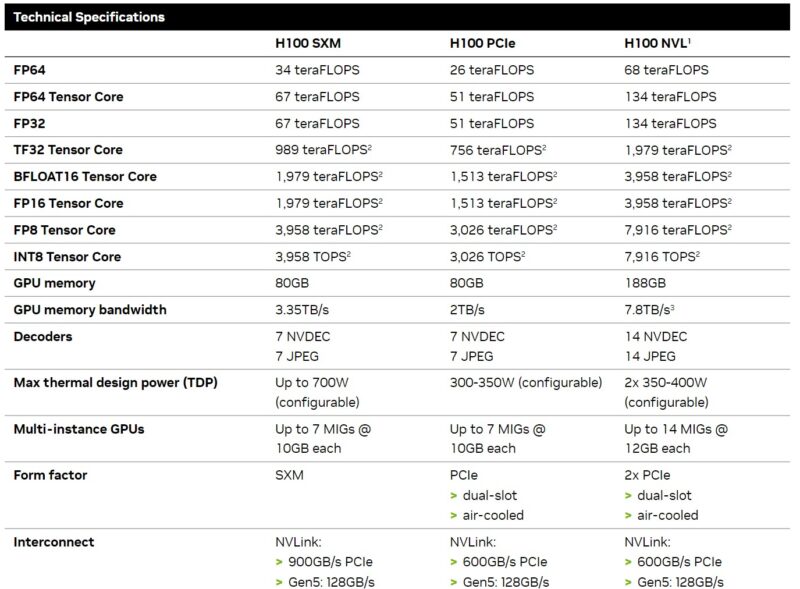

While Nvidia has deployed the H200, the PCIE card has been revised to become the Nvidia H100 NVL with 94 GB of HBM3 memory. This is significant because the SXM version of the H100 is still 80 GB of memory. However, this H100 NVL solution was almost always marketed with at least two GPUs. Here is the H100 Spec table when we did the L40 and H100 Piece.

You will notice that the H100 NVL was presented as two cards here and it is almost always shown as two cards with the NVLink bridges.

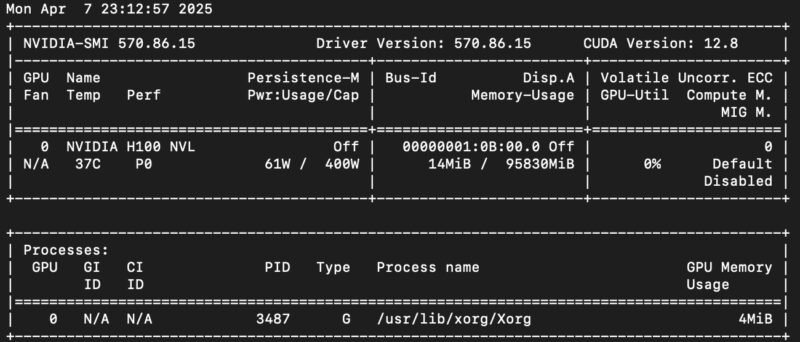

However, it was the one where it was as if you wanted a single GPU, perhaps for a 1U / 2U server which could only adapt a PCIE card with double location, but not two with bridges, that it should work. Recently, we have tried it, and indeed, here is the Nvidia-SMI release:

This is exactly what we expect. For people who buy servers with a GPU, the H100 NVL version is a significant upgrade on the side of the memory of the H100 non NVL. In some cases, as for the gross memory bandwidth or mig instances, the NVL version can also be better than the SXM version and a lower power level.

Last words

At NVIDIA GTC 2025 Keynote (Ryan Smith covered for SH), Jensen Huang, CEO of Nvidia, said that the generation of Topper was no longer worth buying. This could mean that the GPU generation H100 will be more available on the secondary market. This is particularly since the H200 NVL refreshment was released with 141 GB of memory by making an older generation. For those looking for a PCIE GPU to use one in a system, it could actually be an interesting option today or in the future while Blackwell continues to deploy.

It seems almost silly to publish, but there are so many online resources showing that they were not installed in pairs that we thought we should quickly set the record straight.