AI, in particular generating AI, has attracted a large part of the attention of the world of collaboration and communications in the past two years. Almost all market suppliers now have a generative AI solution, and most of them concentrate the majority of their competitive differentiation strategy on their AI capacities. This objective is to stimulate the adoption of customers.

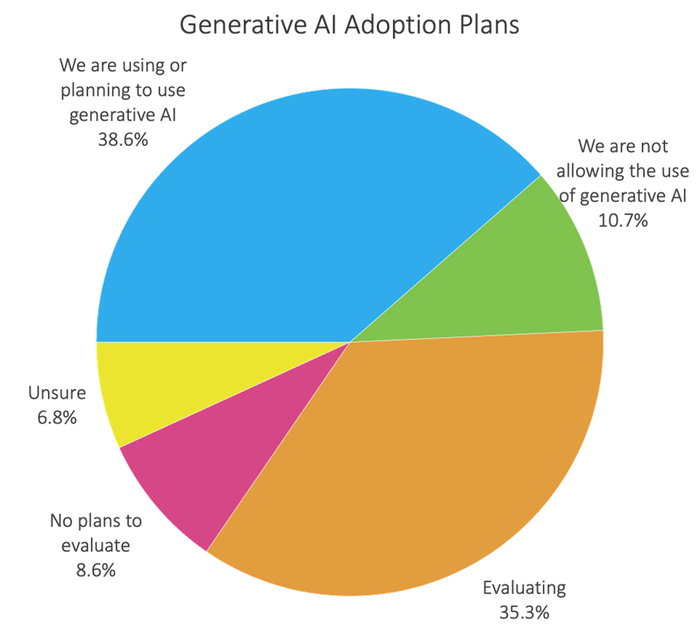

Among the 338 participants in Metrigy recently published Work collaboration and contact center Security and conformity: 2024-25 Almost 39% have already deployed a generative AI or plan to do so by the end of this year (2024). 35.3% additional estimated a potential future deployment. Among those who have the highest return on investment (king) for their collaboration expenses, more than 54% use or plan to use generative collaboration tools such as Google Gemini, Microsoft Copilot and Zoom IA Companion.

Safety problems limiting some

In our participant basin, almost 20% said that their organizations did not allow or did not plan to assess generative AI collaboration tools. There are several reasons, in particular the lack of return on investment or the advantages received, but also at the top of this list are concerns concerning the privacy, security and compliance generating the AI. Specific concerns include:

-

How to ensure the accuracy of the responses and protect the models of languages against hallucination and poisoning (accidental or deliberate)?

-

How to protect customer information stored in large language models (LLMS)?

-

How can we make sure that the implementation of an LLM does not create problems to protect data leakage, potentially not making sensitive data and content beyond those authorized to access it?

-

How to guarantee compliance with the generating content created by AI, including summaries and transcriptions, images, texts and other types of documents.

-

How to ensure the accuracy of transcriptions and summaries?

Generative strategies of missing AI

Unfortunately, only 38.5% of these companies are currently adopting generative IA collaboration tools have developed and implemented an official security strategy around it. This number is much higher among those with the highest collaboration king of our study. In this group, more than 51% of people using generative AI have a safety and compliance strategy in place. This indicated a clear correlation between a high king and a proactive approach to the security and compliance generating AI.

Among those who have a strategy in place today, the test of the precision of AI’s response is the main objective. The largely published history of 2023 of Chatbot ai-ai-aa-ai-ai The creation of a frequent advantage of leaflets has ensured that many organizations ensure the reasonable diligence of the generative responses of the IA chatbot to guarantee precision. This has led to an increasing interest in techniques such as the generation (RAG) (RAG) of recovery which allow chatbots to reference the knowledge bases designed by the company outside the sources of formation data of the model and also quote these sources when they answer questions.

An additional common component of a generative Safety and Compliance of the AI strategy includes the guarantee that generative AI language models respect the classifications of documents to control access to content, including the possibility of classifying the content generated by AI. Those who have generative safety and compliance plans of AI often also ensure that the content generated is kept in accordance with compliance requirements.

Finally, around half of the companies in our study require their suppliers to support a federated generative AI model in which company data can reside in the linguistic models of an organization, potentially improving the security, conformity and confidentiality of data. In addition, around 42% require suppliers to offer the possibility of locating data storage to guarantee compliance with specific regional regulatory requirements, such as GDPR in the EU.

Success requires Ciso – Collaboration Partnership

Among those who have the highest king for their collaboration expenses, almost 74% have a chief involvement in information security (CISO) in the generative security of IA security and compliance (against less than 68% of our group not registered). Other groups with a safety and involvement of compliance include application owners, as well as a dedicated AI team if you exist.

Generative AI security concerns will probably go beyond collaboration applications in other applications such as CRM and HR which can also take advantage of generator. Many companies also build their own generative AI platforms today for a variety of use cases, taking advantage of the offers focused on Google, Microsoft, Openai developers, etc. As such, AI security and conformity generating strategies in the field of collaboration must be closely integrated into the general security and compliance strategies generating AI in all other areas of application.

Conclusion

Generative safety and compliance problems should not be ignored. Organizations that adopt a proactive approach to guarantee the safety and conformity of their generative AI tools demonstrate a higher overall return on investment for their collaboration. Success requires ensuring that AI generating capacities are tested, protected against poisoning and are able to meet compliance requirements. The creation and implementation of a generative safety and compliance strategy of AI require close coordination between the CISO and the respective applications management teams.

About Metrigy: Metrigy is an innovative research and advice company by focusing on the rapid changing areas of collaboration in the workplace, digital workplace, digital transformation, customer experience and employee experience – as well as several related technologies. Metrigy provides strategic advice and informative content, supported by primary metrics and analysis, for technology providers and business organizations