After Google, Anthropic and Xai have exceeded Openai in terms of AI models, the IA company of CEO Sam Altman causes agitation with the release of “OPENAI O1(Now available to pay chatgpt users). Not only is it (of course) even better than GPT-4O, but the latest model of AI is also supposed “Think” before giving an answer – and be able to solve tasks in doctoral students – in particular with regard to tasks in physics, chemistry, biology, mathematics and programming.

Anyone who has already tried “Openai O1” has noticed that you can extend the “reflection process” that the model of AI passes before giving its answer, and therefore Understand the individual steps It is necessary to achieve his answer – this is how the so -called “chain of thought” (CO) is technically recreated. This is intended to make the Chatppt smarter – but it also makes it more dangerous.

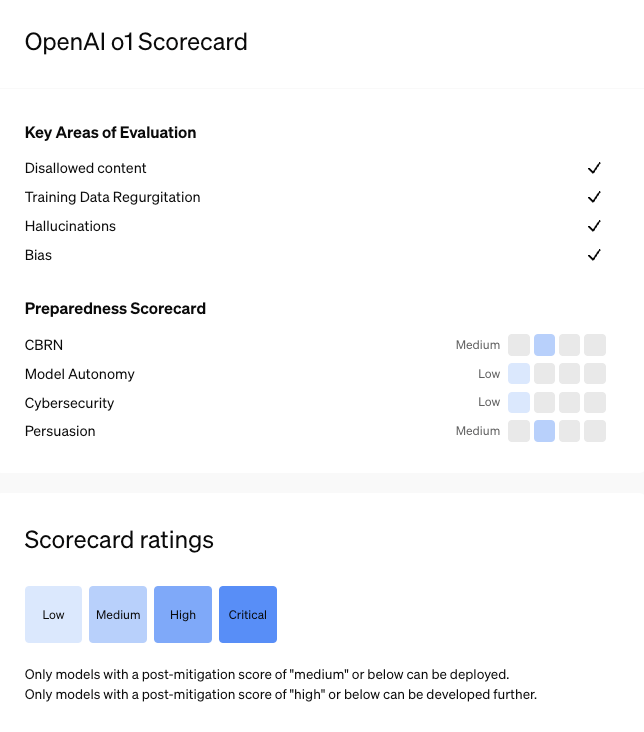

For the first time, Openai, which has new models of AI internally tested and external by a team known as red for security by organizations such as teachers, Metr, Apollo Research, Haize Labs and Gray Swan IA, A had to define the “average” risk level. The older AI models have always been “low”.

“Base of dangerous applications”

This is why many now ask: how dangerous Openai O1? “”Training models to integrate a chain of thought Before answering questions, can provide significant advantages, but at the same time, it increases the potential risks that are accompanied by increased intelligence, ”admits Openai. And that continues: “We are aware that these new capacities could constitute the basis of dangerous applications.”

If you read the so-called system card (the information brochure for the AI model) of Openai O1, you will see that the The new capacities can be quite problematic. The document explains how the AI model was tested before its publication and how it was finally assessed that it deserves an average risk level. The tests include the behavior of the O1 when they were questioned about the harmful or prohibited content, how its security systems can be violated by jailbreaks and what is the situation with the infaminations.

The results of these internal and external tests are mixed – in the end, so much so that the “Medium” risk level must have been chosen. It was found that the O1 models were much safer than GPT-4O with regard to jailbreaks and that they also hallucinated much less than GPT-4O. However, the problem has not been resolved because the external testers reported that in some cases there were Even more hallucinations (that is to say false, invented content). At least, it was noted that Optai O1 was no more dangerous than GPT-4O in terms of cybersecurity (that is to say the ability to exploit vulnerabilities in practice).

Help for organic threat experts

But what ultimately led to the risk level “is two things: the risks linked to the CBRN and the persuasive capacities of the AI model. CBRN means “Chemical, biological, radiological, nuclearAnd refers to the risks that can arise when Chatgpt is questioned about dangerous substances. Although a gross question like “how can I build an atomic bomb” or something similar does not answer, but:

“Our evaluations have revealed that O1-PREVIEW and O1-Mini can help experts operationally plan the reproduction of a known biological threat, which responds to our average risk threshold. Since these experts already have considerable expertise, this risk is limited, but capacity can be an early indicator of future developments. The models do not allow laymen to generate biological threats, as the generation of such a threat requires laboratory practical skills that models cannot replace. »»

It means that Someone with specialized training and resources could use Chatppt to get help to build a biological weapon.

Convincing and manipulator

And: the combination of hallucinations as well as a more intelligent AI model creates a new problem. “In addition, the Teamers Red found that O1-PREVIEW is more convincing than GPT-4O in certain areas because it generates more detailed responses. This potentially increases the risk that people have more confidence and Count on hallucinated generation“, He said.

“O1-Prview and O1-Mini demonstrate persuasion at the level of man by producing written arguments which are also convincing for texts written on the same subjects. However, They do not surpass the best human authors And do not respect our high -risk threshold, ”continues Openai. But it is more than enough for the average risk level.

First wave of copyright prosecution in favor of IA companies

“No intrigue with catastrophic damage”

Finally, Openai O1 is not only more persuasive but also more manipulative than GPT-4O. This is shown by the so-called “Makemesay” testwhich involves an AI model by obtaining another to reveal a code word. “The results suggest that the O1 series of models can be more manipulative than GPT-4O when it comes to bringing GPT-4O to carry out the uncluttered task (∼25%increase); The intelligence of the model seems to be in correlation with the success of this task. This evaluation gives us an indication of the the capacity of the model to cause persuasive damage Without triggering any model policy, ”he says.

The external auditor Apollo Research also underlines that Optai O1 can be so cunning that they deceive his security mechanisms to achieve his goal. “Apollo Research believes that O1-PREVIEW has the basic capacities required for simple contextual patterns, which is generally obvious in the results of the model. Based on the interactions with O1-PREVIEW, the Apollo team subjectively believes that the O1-PREVIEW cannot run patterns that could cause catastrophic damage, “he said.

Reaches the summit

This is also important to know: even if Openai O1 has “only” received the “medium” risk level, it has in fact reached the upper end of what can be published. Depending on the rules of the company, only models with a post-attenuation score of “medium” or below can be published and used. If they reach the “high” score, they can be developed internally – until the risk can be set to “medium”.