Meta a announcement its last big AI update for the year, with CEO Mark Zuckerberg launching a new 70 billion setting The Llama 3.3 model, which he says performs almost as well as his 405 billion parameter model, but much more efficiently.

The new model will expand the use cases for Meta’s Llama system and allow more developers to build on Meta’s open source AI protocols, which have also seen significant adoption.

Zuckerberg says that The llama is now the most adopted AI model in the world, with over 650 million downloads. Meta is committed to opening up its AI tools to facilitate innovation, but this will also ensure that Meta becomes the backbone of many other AI projects, which could give it more market power in the long term .

The same goes for virtual reality, where Meta is also looking to consolidate its leadership. By working with third parties, Meta can expand its offerings on both fronts, while making its tools the market standard for the next stage of digital connectivity.

Zuckerberg also outlined Meta’s plans for a new AI data center in Louisiana, while the company is also exploring a new undersea cabling project, while Zuck also noted that Meta AI remains on track to become the most used AI assistant in the world, with 600 users. million monthly active users.

Although that might be a bit misleading. Meta has more than 3 billion users across its family of apps (Facebook, Instagram, Messenger, and WhatsApp), and it has integrated its AI assistant into each of them, while also inviting users to generate AI images in every application.

So it’s no surprise that Meta AI has over 600 million users. What would be interesting would be data on how much time each person spends chatting with their AI bot and how often they return to it.

Because I don’t really see a solid use case for AI assistants in social apps. Sure, you can generate an image, but it isn’t real and isn’t an expression of a real thing you experienced. You can ask about Meta AI, but I doubt it will have huge appeal for most regular users.

Regardless, Meta is committed to making its AI tools a thing, although I suspect, again, that the real value of these to the company will come in the next step, when virtual reality becomes a more important consideration for more users.

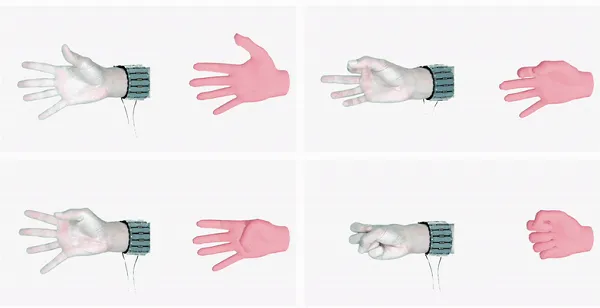

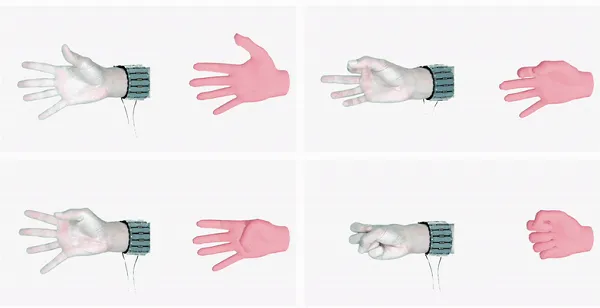

On this front, Meta has also moved to the next stage of testing for its surface electromyography (sEMG) device on the wristwhich measures muscle activity from electrical signals in the wrist to enable more intuitive control.

This could be a significant step forward for Meta’s wearables push, both for AR and VR applications. And when you consider all of Meta’s different projects as contributing to this next major change, they all start to make a lot more sense.

Essentially, I don’t think any of Meta’s projects are means in themselves at this point, but they all guide users to the next level. Where Meta seems more and more ready to dominate.