Fjelland, R. Why general artificial intelligence will not be realized. Hum. Soc. Sci. Commun. 7(1), 1–9 (2020).

Yampolskiy, R. On the Differences between Human and Machine Intelligence. In AISafety@ IJCAI (2021).

Hellström, T. & Bensch, S. Apocalypse now: no need for artificial general intelligence. AI Soc. 39(2), 811–813 (2024).

Barrett, A. M. & Baum, S. D. A model of pathways to artificial superintelligence catastrophe for risk and decision analysis. J. Exp. Theor. Artif. Intell. 29(2), 397–414 (2017).

Gill, K. S. Artificial super intelligence: beyond rhetoric. AI Soc. 31, 137–143 (2016).

Pueyo, S. Growth, degrowth, and the challenge of artificial superintelligence. J. Clean. Prod. 197, 1731–1736 (2018).

Saghiri, A. M., Vahidipour, S. M., Jabbarpour, M. R., Sookhak, M. & Forestiero, A. A survey of artificial intelligence challenges: Analyzing the definitions, relationships, and evolutions. Appl. Sci. 12(8), 4054 (2022).

Ng, G. W. & Leung, W. C. Strong artificial intelligence and consciousness. J. Artif. Intell. Conscious. 7(01), 63–72 (2020).

Yampolskiy, R. V. Agi control theory. In Artificial General Intelligence: 14th International Conference, AGI 2021, Palo Alto, CA, USA, October 15–18, 2021, Proceedings 14 (pp. 316–326). Springer International Publishing (2022a).

Yampolskiy, R. V. On the controllability of artificial intelligence: An analysis of limitations. J. Cyber Secur. Mob. 321–404 (2022b).

Ramamoorthy, A. & Yampolskiy, R. Beyond mad? The race for artificial general intelligence. ITU J. 1(1), 77–84 (2018).

Cao, L. Ai4tech: X-AI enabling X-Tech with human-like, generative, decentralized, humanoid and metaverse AI. Int. J. Data Sci. Anal. 18(3), 219–238 (2024).

Goertzel, B. Artificial general intelligence and the future of humanity. The transhumanist reader: Classical and contemporary essays on the science, technology, and philosophy of the human future 128–137 (2013).

Li, X., Zhao, L., Zhang, L., Wu, Z., Liu, Z., Jiang, H. & Shen, D. Artificial general intelligence for medical imaging analysis. IEEE Rev. Biomed. Eng. (2025).

Rathi, S. Approaches to artificial general intelligence: An analysis (2022). arXiv preprint arXiv:2202.03153.

Arora, A. & Arora, A. The promise of large language models in health care. The Lancet 401(10377), 641 (2023).

Bikkasani, D. C. Navigating artificial general intelligence (AGI): Societal implications, ethical considerations, and governance strategies. AI Ethics 1–16 (2024).

Li, J. X., Zhang, T., Zhu, Y. & Chen, Z. Artificial general intelligence for the upstream geoenergy industry: A review. Gas Sci. Eng. 205469 (2024).

Nedungadi, P., Tang, K. Y. & Raman, R. The transformative power of generative artificial intelligence for achieving the sustainable development goal of quality education. Sustainability 16(22), 9779 (2024).

Zhu, X., Chen, S., Liang, X., Jin, X. & Du, Z. Next-generation generalist energy artificial intelligence for navigating smart energy. Cell Rep. Phys. Sci. 5(9), 102192 (2024).

Faroldi, F. L. Risk and artificial general intelligence. AI Soc. 1–9 (2024).

Simon, C. J. Ethics and artificial general intelligence: technological prediction as a groundwork for guidelines. In 2019 IEEE International Symposium on Technology and Society (ISTAS) 1–6 (IEEE, 2019).

Chouard, T. The Go files: AI computer wraps up 4–1 victory against human champion. Nat. News 20, 16 (2016).

Morris, M. R., Sohl-Dickstein, J. N., Fiedel, N., Warkentin, T. B., Dafoe, A., Faust, A., Farabet, C. & Legg, S. Position: Levels of AGI for operationalizing progress on the path to AGI. In International Conference on Machine Learning (2023).

Weinbaum, D. & Veitas, V. Open ended intelligence: The individuation of intelligent agents. J. Exp. Theor. Artif. Intell. 29(2), 371–396 (2017).

Sublime, J. The AI race: Why current neural network-based architectures are a poor basis for artificial general intelligence. J. Artif. Intell. Res. 79, 41–67 (2024).

Wickramasinghe, B., Saha, G. & Roy, K. Continual learning: A review of techniques, challenges and future directions. IEEE Trans. Artif. Intell. (2023).

Fei, N. et al. Toward artificial general intelligence via a multimodal foundation model. Nat. Commun. 13(1), 3094 (2022).

Kelley, D. & Atreides, K. AGI protocol for the ethical treatment of artificial general intelligence systems. Procedia Comput. Sci. 169, 501–506 (2020).

McCormack, J. Autonomy, intention, Performativity: Navigating the AI divide. In Choreomata (pp. 240–257). Chapman and Hall/CRC (2023).

Khamassi, M., Nahon, M. & Chatila, R. Strong and weak alignment of large language models with human values. Sci. Rep. 14(1), 19399 (2024).

McIntosh, T. R., Susnjak, T., Liu, T., Watters, P., Ng, A. & Halgamuge, M. N. A game-theoretic approach to containing artificial general intelligence: Insights from highly autonomous aggressive malware. IEEE Trans. Artif. Intell. (2024).

Salmi, J. A democratic way of controlling artificial general intelligence. AI Soc. 38(4), 1785–1791 (2023).

Bubeck, S., Chandrasekaran, V., Eldan, R., Gehrke, J., Horvitz, E., Kamar, E. & Zhang, Y. Sparks of artificial general intelligence: Early experiments with gpt-4 (2023). arXiv preprint arXiv:2303.12712.

Faraboschi, P., Frachtenberg, E., Laplante, P., Milojicic, D. & Saracco, R. Artificial general intelligence: Humanity’s downturn or unlimited prosperity. Computer 56(10), 93–101 (2023).

Wei, Y. Several important ethical issues concerning artificial general intelligence. Chin. Med. Ethics 37(1), 1–9 (2024).

McLean, S. et al. The risks associated with artificial general intelligence: A systematic review. J. Exp. Theor. Artif. Intell. 35(5), 649–663 (2023).

Shalaby, A. Digital sustainable growth model (DSGM): Achieving synergy between economy and technology to mitigate AGI risks and address Global debt challenges. J. Econ. Technol. (2024a).

Croeser, S. & Eckersley, P. Theories of parenting and their application to artificial intelligence. In Proceedings of the 2019 AAAI/ACM Conference on AI, Ethics, and Society (pp. 423–428) (2019).

Lenharo, M. What should we do if AI becomes conscious? These scientists say it is time for a plan. Nature (2024). https://doi.org/10.1038/d41586-024-04023-8

Shankar, V. Managing the twin faces of AI: A commentary on “Is AI changing the world for better or worse?”. J. Macromark. 44(4), 892–899 (2024).

Wu, Y. Future of information professions: Adapting to the AGI era. Sci. Tech. Inf. Process. 51(3), 273–279 (2024).

Sukhobokov, A., Belousov, E., Gromozdov, D., Zenger, A. & Popov, I. A universal knowledge model and cognitive architecture for prototyping AGI (2024). arXiv preprint arXiv:2401.06256.

Salmon, P. M. et al. Managing the risks of artificial general intelligence: A human factors and ergonomics perspective. Hum. Fact. Ergon. Manuf. Serv. Ind. 33(5), 366–378 (2023).

Bory, P., Natale, S. & Katzenbach, C. Strong and weak AI narratives: An analytical framework. AI Soc. 1–11 (2024).

Gai, F. When artificial intelligence meets Daoism. In Intelligence and Wisdom: Artificial Intelligence Meets Chinese Philosophers 83–100 (2021).

Menaga, D. & Saravanan, S. Application of artificial intelligence in the perspective of data mining. In Artificial Intelligence in Data Mining (pp. 133–154). Academic Press (2021).

Adams, S., Arel, I., Bach, J., Coop, R., Furlan, R., Goertzel, B., Sowa, J. (2012). Mapping the landscape of human-level artificial general intelligence. AI Magazine 33(1), 25–42.

Beerends, S. & Aydin, C. Negotiating the authenticity of AI: how the discourse on AI rejects human indeterminacy. AI Soc. 1–14 (2024).

Besold, T. R. Human-level artificial intelligence must be a science. In Artificial General Intelligence: 6th International Conference, AGI 2013, Beijing, China, July 31–August 3, 2013 Proceedings 6 (pp. 174-177). Springer Berlin Heidelberg (2013).

Eth, D. The technological landscape affecting artificial general intelligence and the importance of nanoscale neural probes. Informatica 41(4) (2017).

Nvs, B. & Saranya, P. L. Water pollutants monitoring based on Internet of Things. In Inorganic Pollutants in Water (pp. 371–397) (2020). Elsevier.

Flowers, J. C. Strong and Weak AI: Deweyan Considerations. In AAAI spring symposium: Toward conscious AI systems (Vol. 2287, No. 7) (2019).

Mitchell, M. Debates on the nature of artificial general intelligence. Science 383(6689), eado7069 (2024).

Grech, V. & Scerri, M. Evil doctor, ethical android: Star Trek’s instantiation of conscience in subroutines. Early Hum. Dev. 145, 105018 (2020).

Noller, J. Extended human agency: Towards a teleological account of AI. Hum. Soc. Sci. Commun. 11(1), 1–7 (2024).

Nominacher, M. & Peletier, B. Artificial intelligence policies. The digital factory for knowledge: Production and validation of scientific results 71–76 (2018).

Isaac, M., Akinola, O. M., & Hu, B. Predicting the trajectory of AI utilizing the Markov model of machine learning. In 2023 IEEE 3rd International Conference on Computer Communication and Artificial Intelligence (CCAI) (pp. 30–34). IEEE (2023).

Stewart, W. The human biological advantage over AI. AI & Soc. 1–10 (2024).

Vaidya, A. J. Can machines have emotions? AI Soc. 1–16 (2024).

Triguero, I., Molina, D., Poyatos, J., Del Ser, J. & Herrera, F. General purpose artificial intelligence systems (GPAIS): Properties, definition, taxonomy, societal implications and responsible governance. Inf. Fus. 103, 102135 (2024).

Bécue, A., Gama, J. & Brito, P. Q. AI’s effect on innovation capacity in the context of industry 5.0: A scoping review. Artif. Intell. Rev. 57(8), 215 (2024).

Yue, Y. & Shyu, J. Z. A paradigm shift in crisis management: The nexus of AGI-driven intelligence fusion networks and blockchain trustworthiness. J. Conting. Crisis Manag. 32(1), e12541 (2024).

Chiroma, H., Hashem, I. A. T. & Maray, M. Bibliometric analysis for artificial intelligence in the internet of medical things: Mapping and performance analysis. Front. Artif. Intell. 7, 1347815 (2024).

Wang, Z., Chen, J., Chen, J. & Chen, H. Identifying interdisciplinary topics and their evolution based on BERTopic. Scientometrics https://doi.org/10.1007/s11192-023-04776-5 (2023).

Wang, J., Liu, Z., Zhao, L., Wu, Z., Ma, C., Yu, S. & Zhang, S. Review of large vision models and visual prompt engineering. Meta-Radiol. 100047 (2023).

Daase, C. & Turowski, K. Conducting design science research in society 5.0—Proposal of an explainable artificial intelligence research methodology. In International Conference on Design Science Research in Information Systems and Technology (pp. 250–265). Springer, Cham (2023).

Yang, L., Gong, M. & Asari, V. K. Diagram image retrieval and analysis: Challenges and opportunities. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition Workshops (pp. 180–181) (2020).

Krishnan, B., Arumugam, S. & Maddulety, K. ‘Nested’disruptive technologies for smart cities: Effects and challenges. Int. J. Innov. Technol. Manag. 17(05), 2030003 (2020).

Krishnan, B., Arumugam, S. & Maddulety, K. Impact of disruptive technologies on smart cities: challenges and benefits. In International Working Conference on Transfer and Diffusion of IT (pp. 197–208). Cham: Springer International Publishing (2020).

Long, L. N. & Cotner, C. F. A review and proposed framework for artificial general intelligence. In 2019 IEEE Aerospace Conference (pp. 1–10). IEEE (2019).

Everitt, T., Lea, G. & Hutter, M. AGI safety literature review (2018). arXiv preprint arXiv:1805.01109.

Wang, P. & Goertzel, B. Introduction: Aspects of artificial general intelligence. In Advances in Artificial General Intelligence: Concepts, Architectures and Algorithms (pp. 1–16). IOS Press (2007).

Yampolskiy, R. & Fox, J. Safety engineering for artificial general intelligence. Topoi 32, 217–226 (2013).

Dushkin, R. V. & Stepankov, V. Y. Hybrid bionic cognitive architecture for artificial general intelligence agents. Procedia Comput. Sci. 190, 226–230 (2021).

Nyalapelli, V. K., Gandhi, M., Bhargava, S., Dhanare, R. & Bothe, S. Review of progress in artificial general intelligence and human brain inspired cognitive architecture. In 2021 International Conference on Computer Communication and Informatics (ICCCI) (pp. 1–13). IEEE (2021).

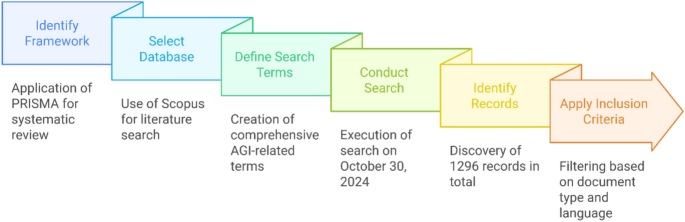

Page, M. J. et al. The PRISMA 2020 statement: an updated guideline for reporting systematic reviews. BMJ 372, n71. https://doi.org/10.1136/bmj.n71 (2021).

Donthu, N., Kumar, S., Pandey, N., Pandey, N. & Mishra, A. Mapping the electronic word-of-mouth (eWOM) research: A systematic review and bibliometric analysis. J. Bus. Res. 135, 758–773 (2021).

Comerio, N. & Strozzi, F. Tourism and its economic impact: A literature review using bibliometric tools. Tour. Econ. 25(1), 109–131 (2019).

Raman, R., Gunasekar, S., Dávid, L. D. & Nedungadi, P. Aligning sustainable aviation fuel research with sustainable development goals: Trends and thematic analysis. Energy Rep. 12, 2642–2652 (2024).

Raman, R., Gunasekar, S., Kaliyaperumal, D. & Nedungadi, P. Navigating the nexus of artificial intelligence and renewable energy for the advancement of sustainable development goals. Sustainability 16(21), 1–25 (2024).

Egger, R. & Yu, J. A topic modeling comparison between lda, nmf, top2vec, and bertopic to demystify twitter posts. Front. Sociol. 7, 886498 (2022).

Devlin, J., Chang, M. W., Lee, K. & Toutanova, K. BERT: Pre-training of deep bidirectional transformers for language understanding. NAACL HLT 2019—2019 Conference of the North American Chapter of the Association for Computational Linguistics: Human Language Technologies—Proceedings of the Conference, 1(Mlm), 4171–4186 (2019).

Grootendorst, M. BERTopic: Neural topic modeling with a class-based TFIDF procedure (2022). http://arxiv.org/abs/2203.05794

Alamsyah, A. & Girawan, N. D. Improving clothing product quality and reducing waste based on consumer review using RoBERTa and BERTopic language model. Big Data Cogn. Comput. 7(4), 168 (2023).

Raman, R. et al. Green and sustainable AI research: An integrated thematic and topic modeling analysis. J. Big Data 11(1), 55 (2024).

Um, T. & Kim, N. A study on performance enhancement by integrating neural topic attention with transformer-based language model. Appl. Sci. 14(17), 7898 (2024).

Gan, L., Yang, T., Huang, Y., Yang, B., Luo, Y. Y., Richard, L. W. C. & Guo, D. Experimental comparison of three topic modeling methods with LDA, Top2Vec and BERTopic. In International Symposium on Artificial Intelligence and Robotics (pp. 376–391). Singapore: Springer Nature Singapore (2023).

Yi, J., Oh, Y. K. & Kim, J. M. Unveiling the drivers of satisfaction in mobile trading: Contextual mining of retail investor experience through BERTopic and generative AI. J. Retail. Consum. Serv. 82, 104066 (2025).

Oh, Y. K., Yi, J. & Kim, J. What enhances or worsens the user-generated metaverse experience? An application of BERTopic to Roblox user eWOM. Internet Res. (2023).

Kim, K., Kogler, D. F. & Maliphol, S. Identifying interdisciplinary emergence in the science of science: Combination of network analysis and BERTopic. Hum. Soc. Sci. Commun. 11(1), 1–15 (2024).

Khodeir, N. & Elghannam, F. Efficient topic identification for urgent MOOC Forum posts using BERTopic and traditional topic modeling techniques. Educ. Inf. Technol. 1–27 (2024).

McInnes, L., Healy, J. & Melville, J. UMAP: Uniform manifold approximation and projection for dimension reduction (2018).

Douglas, T., Capra, L. & Musolesi, M. A computational linguistic approach to study border theory at scale. Proc. ACM Hum. Comput. Interact. 8(CSCW1), 1–23 (2024).

Pelau, C., Dabija, D. C. & Ene, I. What makes an AI device human-like? The role of interaction quality, empathy and perceived psychological anthropomorphic characteristics in the acceptance of artificial intelligence in the service industry. Comput. Hum. Behav. 122, 106855 (2021).

Yang, Y., Liu, Y., Lv, X., Ai, J. & Li, Y. Anthropomorphism and customers’ willingness to use artificial intelligence service agents. J. Hosp. Mark. Manag. 31(1), 1–23 (2022).

Kaplan, A. & Haenlein, M. Rulers of the world, unite! The challenges and opportunities of artificial intelligence. Bus. Horizons 63(1), 37–50 (2020).

Sallab, A. E., Abdou, M., Perot, E. & Yogamani, S. Deep reinforcement learning framework for autonomous driving (2017). arXiv preprint arXiv:1704.02532.

Taecharungroj, V. “What can ChatGPT do?” Analyzing early reactions to the innovative AI chatbot on Twitter. Big Data Cogn. Comput. 7(1), 35 (2023).

Hohenecker, P. & Lukasiewicz, T. Ontology reasoning with deep neural networks. J. Artif. Intell. Res. 68, 503–540 (2020).

Došilović, F. K., Brčić, M. & Hlupić, N. Explainable artificial intelligence: A survey. In 2018 41st International convention on information and communication technology, electronics and microelectronics (MIPRO) (pp. 0210–0215). IEEE (2018).

Silver, D., Singh, S., Precup, D. & Sutton, R. S. Reward is enough. Artif. Intell. 299, 103535 (2021).

McCarthy, J. From here to human-level AI. Artif. Intell. 171(18), 1174–1182 (2007).

Wallach, W., Franklin, S. & Allen, C. A conceptual and computational model of moral decision making in human and artificial agents. Top. Cogn. Sci. 2(3), 454–485 (2010).

Franklin, S. Artificial Minds. MIT Press, p. 412 (1995).

Yampolskiy, R. V. Turing test as a defining feature of AI-completeness. Artificial Intelligence, Evolutionary Computing and Metaheuristics: In the Footsteps of Alan Turing, 3–17 (2013).

Arcas, B. A. Do large language models understand us?. Daedalus 151(2), 183–197 (2022).

Pei, J. et al. Towards artificial general intelligence with hybrid Tianjic chip architecture. Nature 572(7767), 106–111 (2019).

Stanley, K. O., Clune, J., Lehman, J. & Miikkulainen, R. Designing neural networks through neuroevolution. Nat. Mach. Intell. 1(1), 24–35 (2019).

Sejnowski, T. J. The unreasonable effectiveness of deep learning in artificial intelligence. Proc. Natl. Acad. Sci. 117(48), 30033–30038 (2020).

Wang, J. & Pashmforoosh, R. A new framework for ethical artificial intelligence: Keeping HRD in the loop. Hum. Resour. Dev. Int. 27(3), 428–451 (2024).

Boltuc, P. Human-AGI Gemeinschaft as a solution to the alignment problem. In International Conference on Artificial General Intelligence (pp. 33–42). Cham: Springer Nature Switzerland (2024).

Naudé, W. & Dimitri, N. The race for an artificial general intelligence: Implications for public policy. AI Soc. 35, 367–379 (2020).

Cichocki, A. & Kuleshov, A. P. Future trends for human-AI collaboration: A comprehensive taxonomy of AI/AGI using multiple intelligences and learning styles. Comput. Intell. Neurosci. 2021(1), 8893795 (2021).

Lake, B. M., Ullman, T. D., Tenenbaum, J. B. & Gershman, S. J. Building machines that learn and think like people. Behav. Brain Sci. 40, e253 (2017).

Shalaby, A. Classification for the digital and cognitive AI hazards: urgent call to establish automated safe standard for protecting young human minds. Digit. Econ. Sustain. Dev. 2(1), 17 (2024).

Liu, C. Y. & Yin, B. Affective foundations in AI-human interactions: Insights from evolutionary continuity and interspecies communications. Comput. Hum. Behav. 161, 108406 (2024).

Dong, Y., Hou, J., Zhang, N. & Zhang, M. Research on how human intelligence, consciousness, and cognitive computing affect the development of artificial intelligence. Complexity 2020(1), 1680845 (2020).

Muggleton, S. Alan turing and the development of artificial intelligence. AI Commun. 27(1), 3–10 (2014).

Liu, Y., Zheng, W. & Su, Y. Enhancing ethical governance of artificial intelligence through dynamic feedback mechanism. In International Conference on Information (pp. 105–121). Cham: Springer Nature Switzerland (2024).

Qu, P. et al. Research on general-purpose brain-inspired computing systems. J. Comput. Sci. Technol. 39(1), 4–21 (2024).

Nadji-Tehrani, M. & Eslami, A. A brain-inspired framework for evolutionary artificial general intelligence. IEEE Trans. Neural Netw. Learn. Syst. 31(12), 5257–5271 (2020).

Huang, T. J. Imitating the brain with neurocomputer a “new” way towards artificial general intelligence. Int. J. Autom. Comput. 14(5), 520–531 (2017).

Zhao, L. et al. When brain-inspired ai meets agi. Meta-Radiol. 1, 100005 (2023).

Qadri, Y. A., Ahmad, K. & Kim, S. W. Artificial general intelligence for the detection of neurodegenerative disorders. Sensors 24(20), 6658 (2024).

Kasabov, N. K. Neuroinformatics, neural networks and neurocomputers for brain-inspired computational intelligence. In 2023 IEEE 17th International Symposium on Applied Computational Intelligence and Informatics (SACI) (pp. 000013–000014). IEEE (2023).

Stiefel, K. M. & Coggan, J. S. The energy challenges of artificial superintelligence. Front. Artif. Intell. 6, 1240653 (2023).

Yang S. & Chen, B. Effective surrogate gradient learning with high-order information bottleneck for spike-based machine intelligence. IEEE Trans. Neural Netw. Learn. Syst. (2023).

Yang, S. & Chen, B. SNIB: Improving spike-based machine learning using nonlinear information bottleneck. IEEE Trans. Syst. Man Cybern. Syst. 53, 7852 (2023).

Walton, P. Artificial intelligence and the limitations of information. Information 9(12), 332 (2018).

Ringel Raveh, A. & Tamir, B. From homo sapiens to robo sapiens: the evolution of intelligence. Information 10(1), 2 (2018).

Chen, B. & Chen, J. China’s legal practices concerning challenges of artificial general intelligence. Laws 13(5), 60 (2024).

Mamak, K. AGI crimes? The role of criminal law in mitigating existential risks posed by artificial general intelligence. AI Soc. 1–11 (2024).

Jungherr, A. Artificial intelligence and democracy: A conceptual framework. Soc. Media+ Soc. 9(3), 20563051231186353 (2023).

Guan, L. & Xu, L. The mechanism and governance system of the new generation of artificial intelligence from the perspective of general purpose technology. Xitong Gongcheng Lilun yu Shijian/Syst. Eng. Theory Pract. 44(1), 245–259 (2024).

Pregowska, A. & Perkins, M. Artificial intelligence in medical education: Typologies and ethical approaches. Ethics Bioethics 14(1–2), 96–113 (2024).

Bereska, L. & Gavves, E. Taming simulators: Challenges, pathways and vision for the alignment of large language models. In Proceedings of the AAAI Symposium Series (Vol. 1, No. 1, pp. 68–72) (2023).

Chen, G., Zhang, Y. & Jiang, R. A novel artificial general intelligence security evaluation scheme based on an analytic hierarchy process model with a generic algorithm. Appl. Sci. 14(20), 9609 (2024).

Sotala, K. & Yampolskiy, R. Risks of the journey to the singularity. Technol. Singular. Manag. J. 11–23 (2017).

Mercier-Laurent, E. The future of AI or AI for the future. Unimagined Futures–ICT Opportunities and Challenges 20–37 (2020).

Miller, J. D. Some economic incentives facing a business that might bring about a technological singularity. In Singularity hypotheses: A scientific and philosophical assessment (pp. 147–159). Berlin, Heidelberg: Springer Berlin Heidelberg (2013).

Bramer, W. M., Rethlefsen, M. L., Kleijnen, J. & Franco, O. H. Optimal database combinations for literature searches in systematic reviews: A prospective exploratory study. Syst. Rev. 6, 1–12 (2017).

Mishra, V. & Mishra, M. P. PRISMA for review of management literature–method, merits, and limitations—An academic review. Advancing Methodologies of Conducting Literature Review in Management Domain, 125–136 (2023).

de Groot, M., Aliannejadi, M. & Haas, M. R. Experiments on generalizability of BERTopic on multi-domain short text (2022). arXiv preprint arXiv:2212.08459.

Letrud, K. & Hernes, S. Affirmative citation bias in scientific myth debunking: A three-in-one case study. PLoS ONE 14(9), e0222213 (2019).

McCain, K. W. Assessing obliteration by incorporation in a full-text database: JSTOR, Economics, and the concept of “bounded rationality”. Scientometrics 101, 1445–1459 (2014).