The generative AI unlocks new capacities for PCs and workstations, including game assistants, improved content and productivity creation tools and more.

NVIDIA NIM microservices, available now, and BluePrints AiIn the coming weeks, accelerate the development of AI and improve its accessibility. Announced at These Trade fair in January, NVIDIA NIM provides models of pre-muddled and ultramodern AIs optimized for the NVIDIA RTX platform, including NVIDIA GeForce RTX 50 Series And now the new NVIDIA Blackwell RTX PRO GPUS. Microservices are easy to download and run. They cover the higher methods for the development of PCs and are compatible with applications and higher ecosystem tools.

The function of assistant of the Experimental System of Project G-Assist has also been published today. The G-Assist project shows how AI assistants can improve applications and games. The system wizard allows users to perform diagnostics in real time, obtain recommendations on performance optimizations or software and control system devices – all via simple voice or text controls. Developers and enthusiasts can extend its capacities with a simple plug-in architecture and a new plug-in generator.

In the middle of a pivotal moment of computer science – where revolutionary influence models and a community of world developers lead an explosion in the tools and workflows fueled by AI – NIM microservices, Blueprints and G -Assistant help bring key innovations to PCs. This RTX AI garage The series of blogs will continue to provide updates, ideas and resources to help developers and enthusiasts to build the next Wave of AI on PCs and RTX AI workstations.

Ready, set, nim!

Although the pace of innovation with AI is incredible, it can always be difficult for the PC developer community to start technology.

Bringing AI models from research to PC requires the conservation of model variants, adaptation to manage all input and output data and quantification to optimize the use of resources. In addition, the models must be converted to operate with optimized inference software and connected to new AI (API) application programming interfaces. This requires substantial efforts, which can slow the adoption of AI.

NVIDIA NIM microservices help solve this problem by providing prepacké, optimized and easily downloadable AI models that connect to the standard industry APIs. They are optimized for performance on RTX PCs and workstations, and include the best AI models in the community, as well as for the models developed by Nvidia.

https://www.youtube.com/watch?v=K_MNB6RLHA

NIM microservices support a range of AI applications, including major language models (Llms), vision language models, image generation, speech processing, generation with recovery (CLOTH) – Research based on, PDF extraction and computer vision. Ten nim microservices for RTX are available, supporting a range of applications, including the generation of language and image, computer vision, speech AI and more. Start with these NIM microservices today:

NIM microservices are also available via the best AI IA tools and frameworks.

For AI lovers, All And Chatrtx now supports NIM, which facilitates discussion with LLMS agents and AI via a simple and friendly interface. With these tools, users can create personalized AI assistants and integrate their own documents and data, helping to automate tasks and improve productivity.

https://www.youtube.com/watch?v=q7ezjupnba4

For developers who seek to build, test and integrate AI into their applications, Flower And Langflow Now support NIM and offer low and code solutions with visual interfaces to design AI work flows with minimum coding expertise. Sustain Comfyui Arrives soon. With these tools, developers can easily create complex AI applications such as chatbots, image generators and data analysis systems.

Furthermore, Microsoft vs code Ai Toolkit,, Crew And Lubricole Now support NIM and provide advanced capacities to integrate microservices into the application code, helping to ensure transparent integration and optimization.

Visit the NVIDIA Technical Blog And Build.nvidia.com To start.

NVIDIA AI Blueprints will offer pre-constructed workflows

Nvidia ai Blueprints gives the developers of the AI one step ahead in the construction AI Generative Work flow with NVIDIA NIM microservices.

The plans are extensible reference samples ready to use that bring together all that is necessary – Source code, data examples, documentation and a demonstration application – to create and personalize the advanced workflows which are executed locally. Developers can modify and extend AI plans to modify their behavior, use different models or completely implement new features.

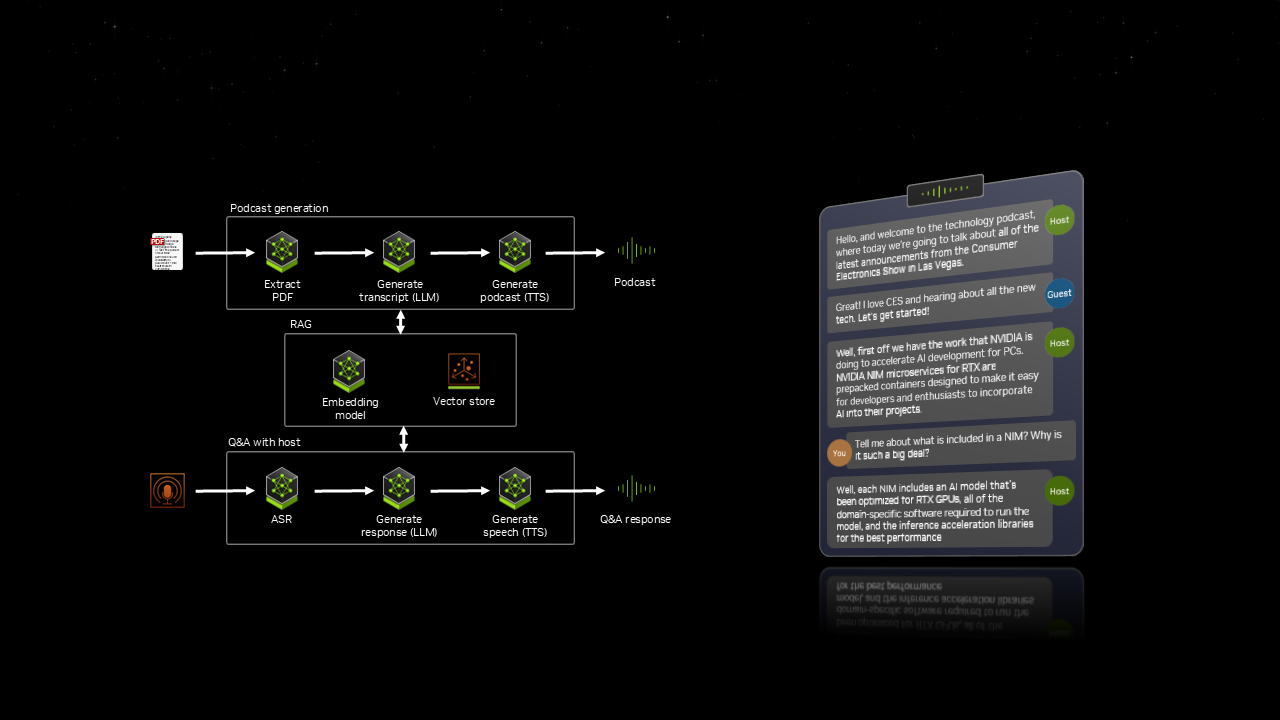

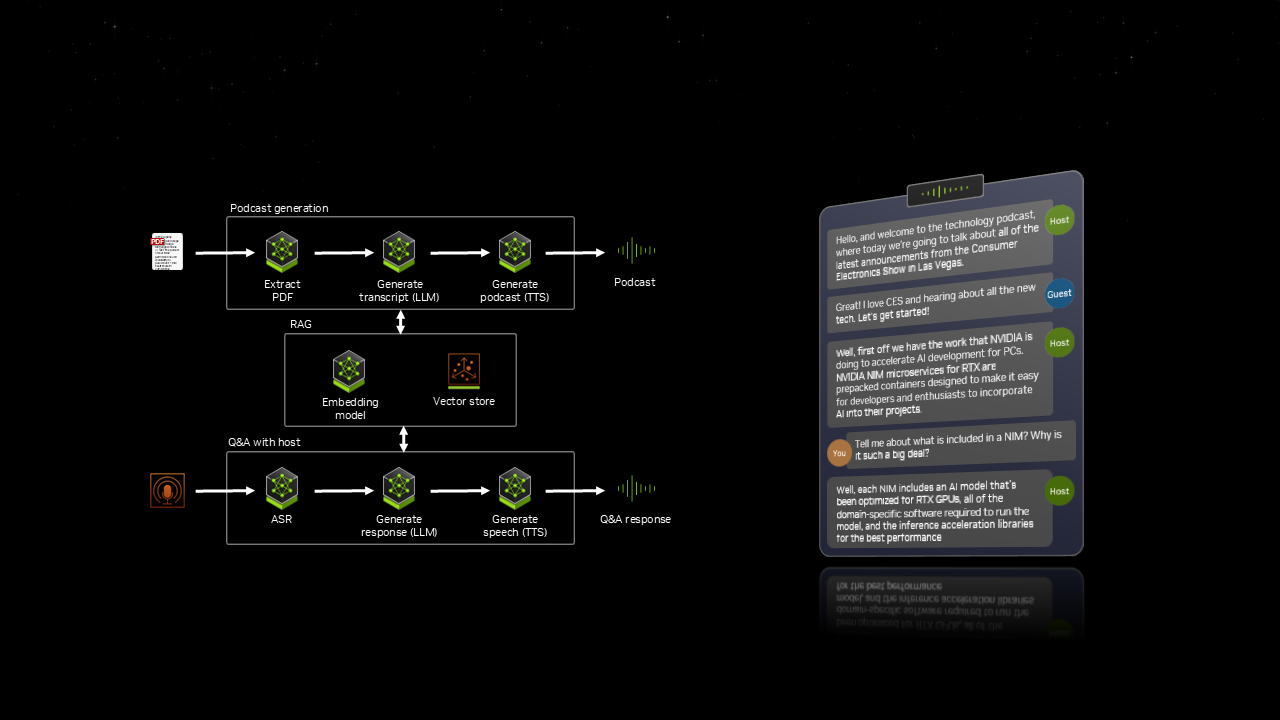

THE Pdf at podcast ai blueprint Will transform documents into audio content so that users can learn on the go. By extracting the text, images and tables from a PDF, the workflow uses AI to generate an informative podcast. For deeper dives in subjects, users can then have an interactive discussion with Podcast hosts powered by AI.

THE BluePrint Ai for AI Generative Guided in 3D will give artists better control over the generation of images. Although AI can generate incredible images from simple text prompts, image composition control using only words can be difficult. With this plan, creators can use single 3D objects arranged in a 3D rendering like Blender to guide the generation of AI images. The artist can create 3D assets by hand or generate them using AI, place them in the scene and define the camera from the 3D window. Then, a prepacké workflow powered by the NIM Flux microservice will use the current composition to generate high quality images that correspond to the 3D scene.

Nvidia Nim on RTX with the Windows subsystem for Linux

One of the key technologies that allows NIM microservices to run on PCS is the Windows subsystem for Linux (WSL).

Microsoft and Nvidia have collaborated to bring Cuda and RTX acceleration in WSL, which allows you to run optimized and containerized microservices under Windows. This allows the same NIM microservice to run anywhere, PCs and workstations in the data center and the cloud.

Start with NVIDIA NIM on RTX AI PCS in Build.nvidia.com.

Project G-Asist widens PC AI features with personalized plug-ins

As part of Project G-Assist, an experimental version of the system assistant function for users of GeForce RTX Desktop is now available via the NVIDIA ApplicationWith the support of laptops to come soon.

G -ASIST helps users to control a wide range of PC settings – including optimization of game and system, image frequencies and other key performance statistics, and control selected devices such as lighting – all via vocal or basic text controls.

G-Assist is built on Nvidia as – the same game developers of the AI technological suite use To breathe life non-cheerful characters. Unlike AI tools that use massive cloud AI models that require online access and paid subscriptions, G-Assist is used locally on a GeForce RTX GPU. This means that it is reactive, free and can run without internet connection. Software manufacturers and suppliers already use ACE to create personalized AI assistants like G-Assist, including MSI robot engineTHE Streamlabs Intelligent Ai Assistant And the abilities to come to the HP omen center center.

G-Assist was built for community-oriented expansion. Start with this nvidia GitHub repositoryIncluding samples and instructions to create plug-ins that add new features. Developers can define functions in single JSON formats and submit configuration files in an designated directory, allowing G-Assist to load and interpret them automatically. Developers can even submit plug-ins to Nvidia for potential examination and inclusion.

Plug-ins samples currently available include Spotify, to allow music and volume control, and Google Gemini-allowing G-Assist to invoke a much larger cloud for more complex conversations, brainstorming sessions and web research using a Free key ai studio.

In the clip below, you will see G-Asist ask Gemini on which legend to pick up Legends Apex When the solo queue, and if it is wise to switch to nightmare mode Diablo IV:

https://www.youtube.com/watch?v=IUGXMMLWIN8

For more personalization, follow the Instructions in the GitHub repository To generate P-assistant plug-ins using a “build” based on Chatgpt. With this tool, users can write and export code, then integrate it into G -Assist – Activate fast and assisted features that respond to text and voice controls.

Look at how a developer used the Plug-in Builder to create a Twitch plug-in for G-Assist to check if a streamer is live:

https://www.youtube.com/watch?v=ptakdh1jpvu

More details on how to create, share and load plug-ins are available in the NVIDIA GitHub repository.

Discover the G-Assist article For system requirements and additional information.

Build, create, innovate

NVIDIA NIM microservices for RTX are available in Build.nvidia.comOffering developers and AI enthusiasts with powerful tools and loans to create AI applications.

Download the G-Assist project via the NVIDIA Applications “Home” tab, in the “Discovery” section. G-Assist currently supports the GEFORCE RTX office GPUs, as well as a variety of Voice and text commands in English. Future updates will add the management of GeForce RTX laptop GPUs, new and improved G-assistant capacities, as well as the management of additional languages. Press “Alt + G” after installation to activate G-Assist.

Each week, RTX AI garage Includes innovations and content of AI focused on the community for those who seek to know more about NIM microservices and AI plans, as well as the creation of AI agents, creative workflows, digital humans, productivity applications and more on PCs and IA workstations.

Connect on Nvidia ai Pc on Facebook,, Instagram,, Tiktok And X – and stay informed as a subscription to RTX AI PC Newsletter.

Follow Nvidia Workstation on Liendin And X.

See notice Regarding information on software products.