Nvidia, known for its sophisticated computer chips, is doubling down on its artificial intelligence offering by developing a new generative AI audio model capable of “producing sounds never heard before.”

The new AI model is called Fugattowhich stands for Foundational Generative Audio Transformer Opus 1. Nvidia says it is capable of generating, transforming and manipulating sound using text and audio input, creating sounds like a trumpet bark or a saxophone meow. The model can also generate “high-quality singing voices” from text prompts.

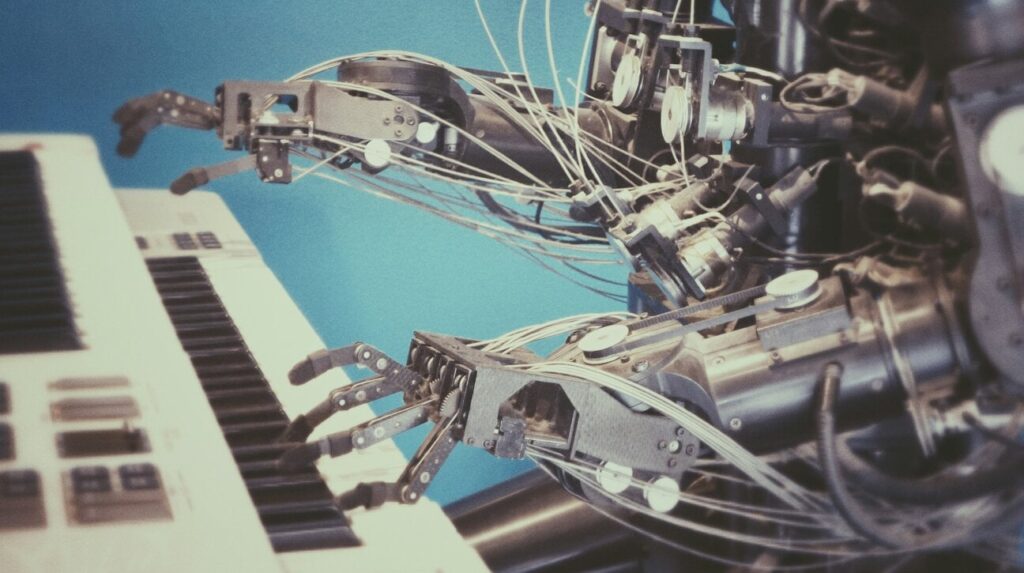

Fugatto’s key features include creating snippets of music from text prompts, editing existing songs by adding or removing instruments, changing voice characteristics like accent and emotion, and the generation of entirely new sounds. Nvidia described its new technology as “a Swiss Army knife for sound.”

Nvidia demonstrated Fugatto’s capabilities in a videoshowing how users can generate sounds using prompts such as: “Create a sound where a train passes and becomes a lush string orchestra.” Fugatto also allows users to isolate vocals from songs, among other features, as shown in the video.

“This thing is wild,” said I do Zmishlanymulti-platinum producer, songwriter and co-founder of Single take audiomember of the NVIDIA Inception program for cutting-edge startups.

“We wanted to create a model that understands and generates sound like humans do.”

Raphael Valle, Nvidia

“Sound is my inspiration. This is what drives me to create music. The idea of being able to create entirely new sounds on the fly in the studio is incredible.

Fugatto uses ComposableARTa technique that allows users to combine instructions not initially seen during training. This means users can request complex audio transformations, such as text spoken with a sense of sadness with a French accent, Nvidia explained.

The model also introduces temporal interpolation, allowing the creation of evolving soundscapes. For example, users can generate a rainstorm that gradually builds, with crescendos of thunder fading into the distance.

Nvidia noted that Fugatto is a transformer model with 2.5 billion parameterstrained on NVIDIA DGX systems using 32 NVIDIA H100 Tensor Core GPUs, the same GPUs that power Vultrwhich claims to be the world’s largest private cloud computing platform.

Meanwhile, Nvidia said its research team – from India, Brazil, China, Jordan and South Korea – spent more than a year developing a dataset containing millions of samples audio to develop Fugatto.

Fugatto can be applied across multiple industries, including music production, advertising, language learning and video game development, Nvidia said.

Raphael Valleyhead of applied audio research at NVIDIA and a contributor to the project, described Fugatto as “our first step toward a future where unsupervised multi-task learning in audio synthesis and transformation emerges from the data and scale of models”.

“We wanted to create a model that understands and generates sound like humans do,” Valle said.

Nvidia is the latest tech company to launch an AI audio tool, joining other companies like Stability AI, OpenAIAnd Google deep mind. However, Nvidia has not yet announced a timetable for the public release or commercial availability of Fugatto.

Jensen Huangfounder and CEO of NVIDIA, said last week when the company published its third quarter results: “The AI era is in full swing, propelling a global shift toward NVIDIA computing. »

“AI is transforming every industry, business and country. Businesses are adopting agentic AI to revolutionize workflows. Investments in industrial robotics are increasing with breakthroughs in physical AI. And countries have realized the importance of developing their AI and national infrastructure.

Music Business Worldwide