Has a human wrote this, or Cat? It can be difficult to say – perhaps too hard, its Creator Openai thinks, which is why he works on a means of “filigree” the content generated by AI.

In a conference At the University of Texas in Austin, the computer teacher Scott Aaronson, currently a guest researcher in Openai, revealed that Optaai develops a tool for “the filigree statistically the results of a text (AI system)”. Each time a system – say, Chatgpt – generates text, the tool would integrate an “imperceptible secret signal” indicating where the text came from.

Openai engineer Hendrik Kirchner has built a work prototype, says Aaronson, and hope is to build it in future systems developed in Openai.

“We want it to be much more difficult to take an outing (from an AI system) and to pass it as if it came from a human,” said Aaronson in his remarks. “This could be useful to prevent academic plagiarism, of course, but also, for example, the mass generation of propaganda – you know, spam each blog with comments apparently on the subject supporting the invasion of Ukraine by Russia without even increasing them in Moscow. Or someone’s writing style identification in order to increase them. “

Random

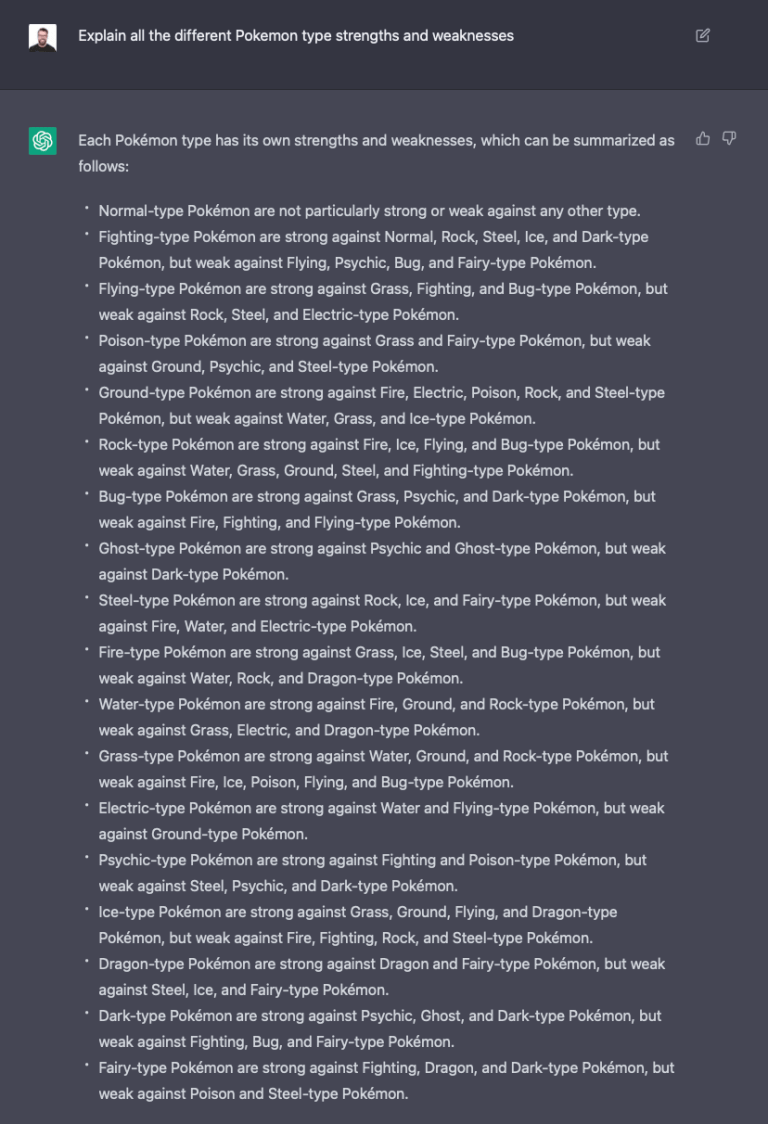

Why need a watermark? Chatgpt is a solid example. The chatbot developed by Openai has taken THE Internet by stormshowing an ability not only to answer difficult questions, but write poetry, resolve programming puzzles and poetic spam on a certain number of philosophical subjects.

Although Chatgpt is very fun – and really useful – the system raises obvious ethical concerns. Like many pre-text generating systems, Chatgpt could be used to write high-quality phishing emails and harmful malware, or cheat at school. And as a tool tool to the questions, it is in fact inconsistent – a gap that led the programming of questions and follows programming to prohibit responses from Chatgpt until further notice.

To enter the technical foundations of the OpenAi watermark tool, it is useful to know why systems like Chatgpt work as well as them. These systems include the input and exit text like “tokens” chains, which can be words but also punctuation marks and parts of the words. In their nuclei, the systems constantly generate a mathematical function called probability distribution to decide the following token jet (for example) for the exit, taking into account all the previously released tokens.

In the case of OpenAi hosted systems like Chatgpt, once the distribution has been generated, the Openai server does the sampling of tokens depending on the distribution. There is a certain chance in this selection; This is why the same text prompt can give a different answer.

OPENAI’s watermark tool acts as a “WRAPPER” on existing text generation systems, said AARONSON during the conference, taking advantage of a cryptographic function operating at the server level for “pseudorandomly” Select the following token. In theory, the text generated by the system would always seem random for you or me, but anyone having the “key” of the cryptographic function could discover a watermark.

“Empirically, a few hundred tokens seem to be sufficient to obtain a reasonable signal that yes, this text came from (an AI system). In principle, you can even take a long text and isolate the parts probably come (the system) and which parts have probably not done it.” Aaronson said. “(The tool) can do the watermark using a secret key and it can check the watermark using the same key.”

Key limitations

The text of filigree generated by Ai-Généré is not a new idea. Previous attempts, most of the rules, have relied on techniques such as synonym substitutions and words specific to syntax. But apart from theoretical research Published by the German Institute CISPA last March, Openai seems to be one of the first approaches based on the cryptography of the problem.

When he contacted for comments, Aaronson refused to reveal more about the filigree prototype, except that he provides for a research document in the coming months. Openai also refused, saying that only the watermark is one of several “techniques of origin” which he explores to detect the results generated by the AI.

University university academics and industrial experts, however, shared mixed opinions. They note that the tool is on the server side, which means that it would not necessarily work with all text generating systems. And they argue that it would be trivial for the opponents to contribute.

“I think it would be quite easy to get around it by reformulating, using synonyms, etc.,” said Srini Devadas, computer teacher at MIT, at Techcrunch by e-mail. “He’s a bit a tug.”

Jack Hessel, a scientific researcher from the Allen Institute for IA, stressed that it would be difficult for the text generated by the fingerprint imperceptibly because each token is a discreet choice. A too obvious digital imprint can lead to strange words that degrade control, while too subtle would leave room for doubt when the fingerprint is sought.

Yoav Shoham, the co-founder and CO-PDG of AI21 laboratoriesAn OPENAI rival, does not think that the statistical watermark will be sufficient to help identify the source of the text generated by AI. He calls a “more complete” approach which includes a differential watermark, in which different parts of the text are waterproofing differently and the AI systems which cite more precisely the factual text sources.

This specific watermark technique also requires a lot of confidence – and power – in Openai, have noted experts.

“An ideal digital imprint would not be discernible by a human reader and allow a very confident detection,” said Hessel by e-mail. “Depending on how it is configured, it may be that Optai himself is the only party to provide this detection with confidence due to the functioning of the” signature “process.

In his conference, Aaronson has recognized that the program would only really work in a world where companies like Openai are ahead of advanced systems – and they all agree to be responsible players. Even if Openai shared the watermark tool with other suppliers of text -generating systems, such as Cohere and AI21Labs, that would not prevent others from choosing not to use it.

“If (this) becomes a free for everyone, then a large part of the security measures become more difficult and could even be impossible, at least without government regulations,” said Aaronson. “In a world where anyone could build their own text model that was just as good as (Chatgpt, for example) … What would you do there?”

This is how it happens in the field of text in the image. Unlike Openai, of which Dall-E 2 The image generation system is only available via an API, Stability ai Open has opened its text -like text technologies (called Stable diffusion). Although Dall-E 2 has a number of API filters to prevent the generation of problematic images (plus filigranes on the images it generates), the stable open source dissemination does not do so. The bad players used it to create Porn deeplyAmong other toxicities.

For his part, Aaronson is optimistic. In the conference, he expressed the conviction that if Openai can demonstrate that the watermark works and has no impact on the quality of the generated text, it has the potential to become an industry standard.

Not everyone agrees. As Devadas points out, the tool needs a key, which means that it cannot be completely open source – potentially limiting its adoption to organizations which agree to associate with Openai. (If the key were to be made public, anyone could deduce the model behind the filigranes, beating its goal.)

But it may not be so eccentric. A quora representative said that the company would be interested in using such a system, and it would probably not be the only one.

“You can worry that all these things have the idea of trying to be safe and responsible during the AI scale … As soon as it really hurts the results of Google and Meta and Alibaba and the other major actors, a large part of the window,” said Aaronson. “On the other hand, we have seen in the past 30 years that major Internet companies can agree on certain minimum standards, whether due to the fear of being prosecuted, the desire to be considered a responsible player, or anything else.”