Social media analysis of AI research controversies: what are the main topics of disagreement, who participates, and how?

Building on the consultation, the UK team of Shaping AI identified a set of controversies about AI for the period 2012-2022 for further analysis. In order to delve deeper into the “research layer” of AI controversies, the UK team of Shaping AI identified 5 controversies from the consultation that stood out for their knowledge-intensity.”[2]

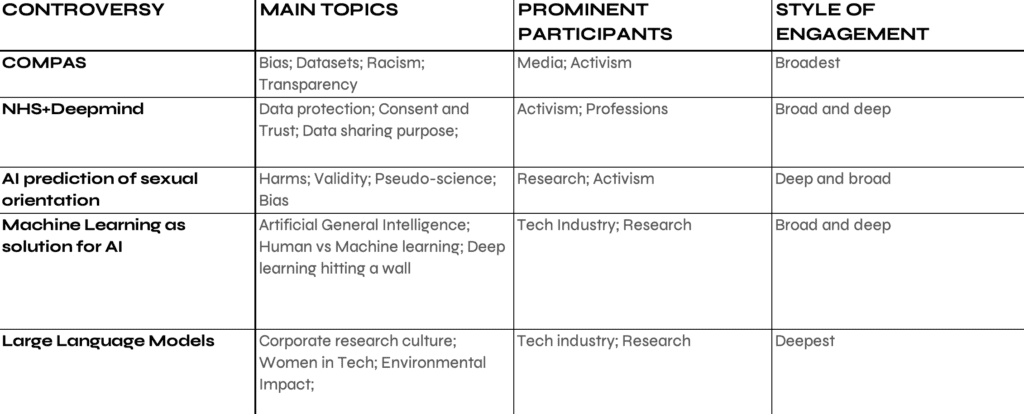

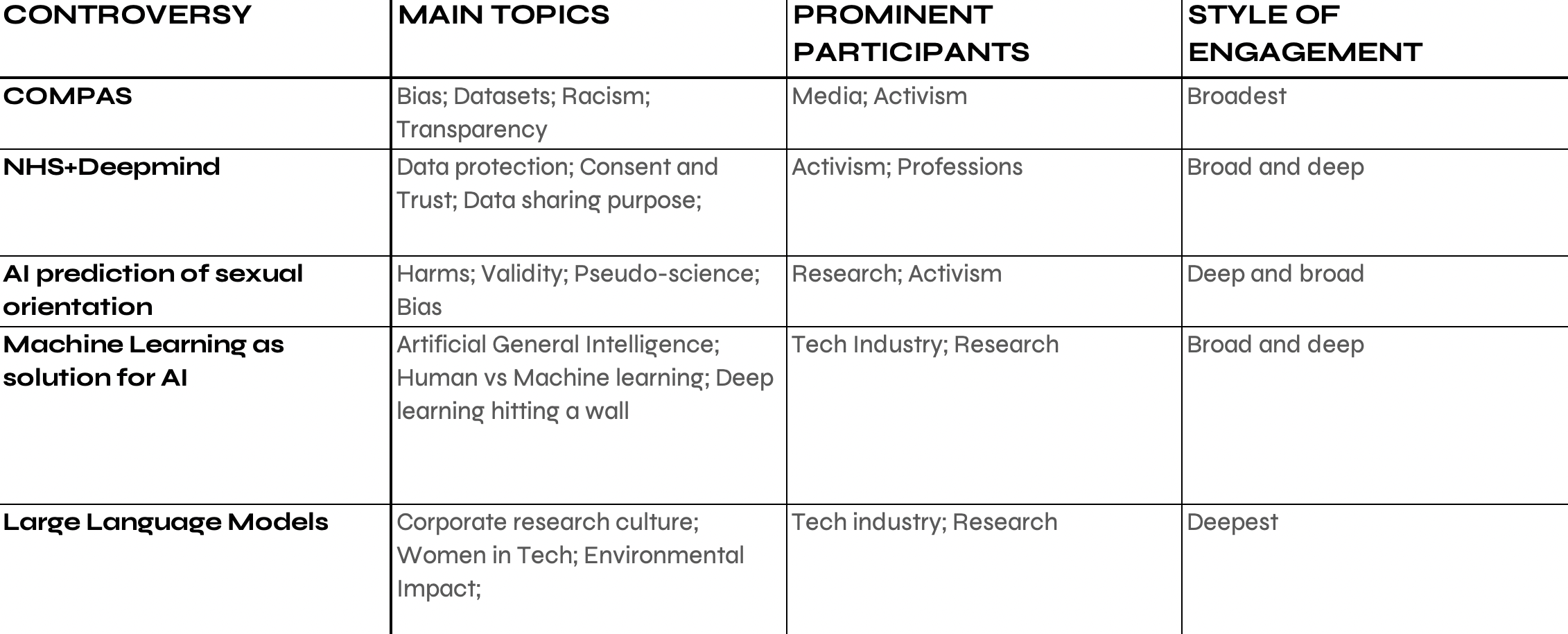

The selected AI controversies are:

- COMPAS: a controversy about Algorithmic discrimination and the use of algorithmic recommender systems in US courts sparked by the ProPublica report “Machine Bias” (2016)

- NHS+Deepmind: a controversy about data sharing between UK public sector organisations and big tech sparked by the Powles and Hodson (2017) paper ” Google DeepMind and healthcare in an age of algorithms”

- Predicting sexual orientation with AI (Gaydar): A controversy about the use of neural networks in predictive social research sparked by the Wang and Kosinkski (2017) paper “Deep neural networks are more accurate than humans at detecting sexual orientation from facial images.”

- Large Language Models (Stochastic Parrots): A controversy about Large Models sparked by the Bender et al. (2020) paper “On the Dangers of Stochastic Parrots”

- Machine Learning as a solution for AI: an extended controversy about the capacity of Machine Learning — and specifically the use of trained multilayer neural networks with large numbers of parameters — to solve the problem of AGI (Artificial General Intelligence).

To analyse these research controversies, we turned to Twitter as this media platform was identified by our UK experts as a prominent debate forum (alongside Reddit and Discord), and is well suited to controversy analysis (Housley et al, 2019; Marres, 2015; Madsen and Munk, 2019). For each of the 5 controversies we constructed an English-language Twitter data set[3] and conducted a controversy analysis focused on Twitter conversations: for all controversies, we analysed the topics of disagreement, their actor composition, and the overall “style of engagement”[4]. The provisional results of this analysis are presented below.

Our analysis shows that AI research controversies on Twitter, like those identified in the expert consultation, are primarily concerned with the underlying architectures of AI research and with structural transformations of science, economy, and society through contemporary AI. Engaging with research literature, the selected controversies highlight significant societal risks, harms and problems of AI: disinformation, discriminatory impacts of the use of AI in the public sector, lack of transparency of data sets and methods, growing corporate control over research, the environmental impacts of large language models, and the transfer of personal and public data to private actors. All of the research controversies under scrutiny identified problems with the consolidation of power in and lack of oversight over the technological economy (Slater and Barry, 2005) that underpins AI research and innovation.

Table 1: Main topics of disagreement, main actors and overall style of engagement in selected AI research controversies on Twitter.

Also of note is that participation in the social media discourse on the AI controversies under scrutiny was diverse but relatively narrow. Most controversies were dominated by actors with direct knowledge of AI research and of the societal impact of technology, with participants from the tech industry as well as activism especially dominant. Academic researchers are a notable presence too, but policymakers and professions play a less prominent role. The controversy about the NHS-DeepMind Data sharing agreement was the only debate in which policymakers and legal and medical professionals played a prominent role on Twitter. Twitter of course provides a highly partial perspective on what makes AI controversial, and we therefore invited UK-based experts from the 2021 consultation to review the 5 AI controversies from a UK perspective during our “Shifting Controversies” expert workshop in March 2023.

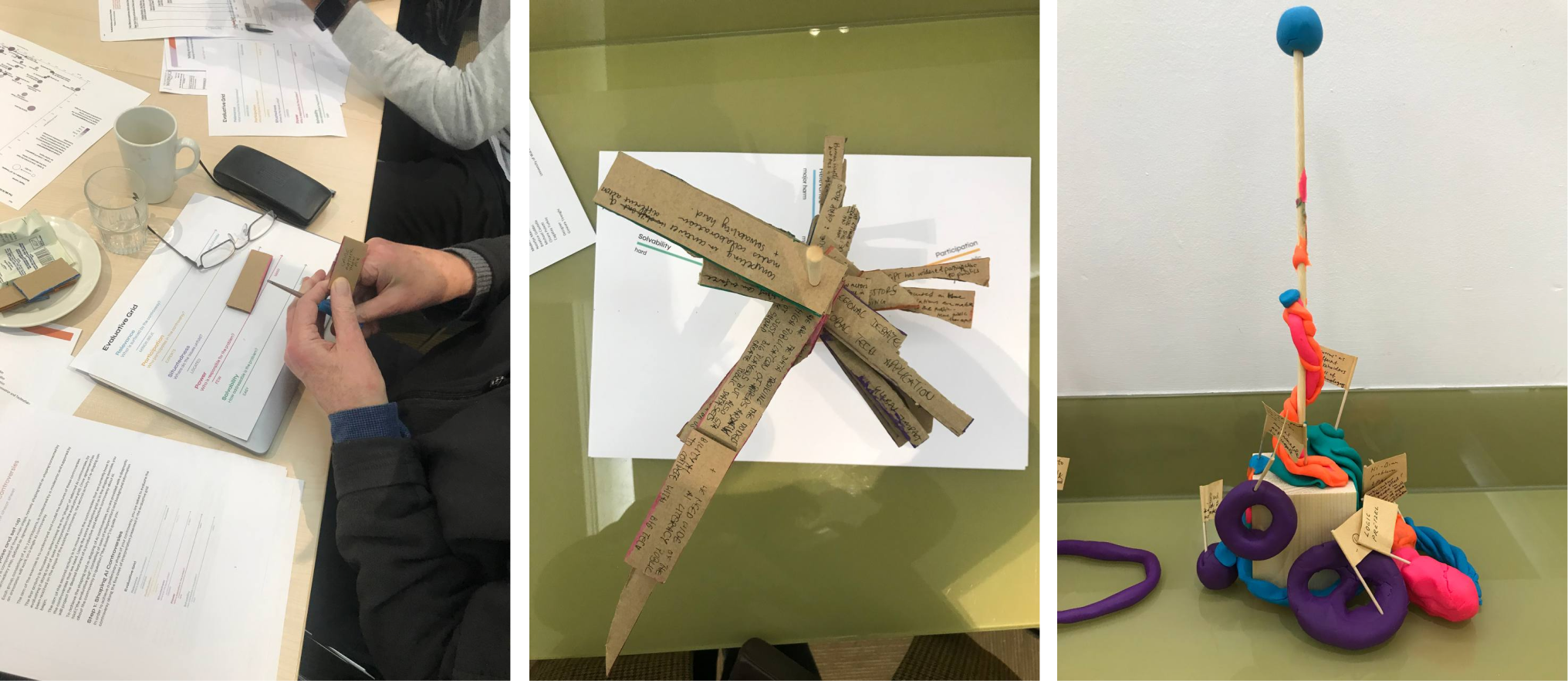

Figure 6: The “controversy shape shifter” in use: shaping and re-shaping AI controversies with design methods