The future of running LLMs may no longer rely on expensive infrastructure or GPUs. While India works on developing its own foundational model under the IndiaAI mission, a startup is taking a different approach by exploring how to efficiently run LLMs on CPUs.

Founded on the principle of making AI accessible to all, Ziroh Labs has developed a platform called Kompact AI that enables the running of sophisticated LLMs on widely available CPUs, eliminating the need for costly and often scarce GPUs for inference—and soon, for fine-tuning models with up to 50 billion parameters.

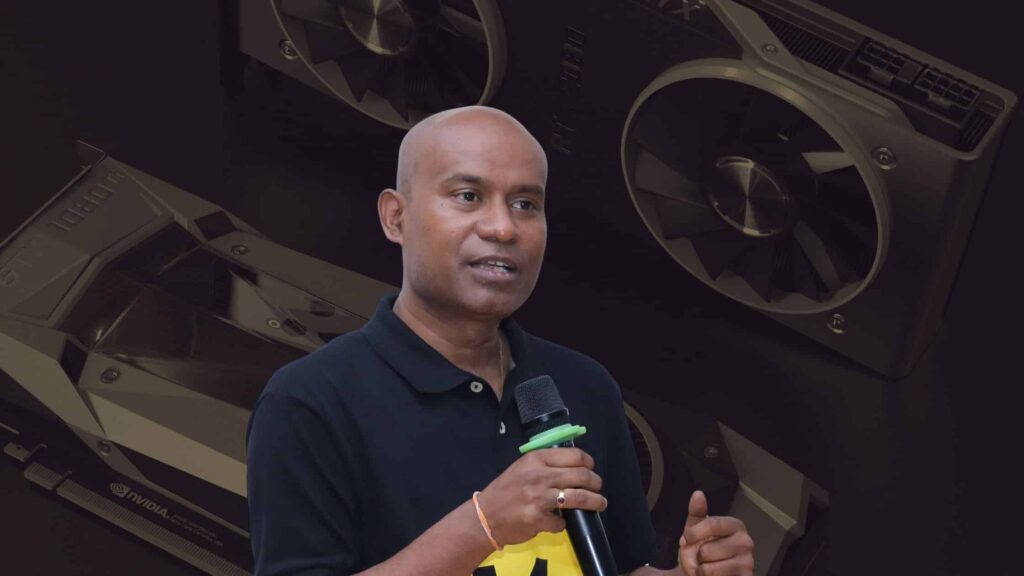

“With a 50 billion-parameter model, no GPU will be necessary during fine-tuning or inference,” said Hrishikesh Dewan, co-founder of Ziroh Labs, in an exclusive interview with AIM. He further added that work on fine-tuning capabilities is already underway and will be released in the next three months, claiming that nobody will need GPUs to train their models anymore.

Ziroh Labs has also partnered with IIT Madras and the IITM Pravartak Technologies Foundation to launch the Centre for AI Research (CoAIR) to solve India’s compute accessibility challenges using AI models optimised for CPUs and edge devices.

Ziroh Labs is based in California, US, and Bengaluru, India. Dewan shared that Kompact AI has been entirely developed in the Bengaluru office — from the core science and engineering to every aspect of its design and execution.

The company has already optimised 17 AI models, including DeepSeek, Qwen and Llama, to run efficiently on CPUs. These models have been benchmarked with IIT Madras, evaluating both quantitative performance and qualitative accuracy.

The Tech of Kompact AI

Dewan explained that LLMs are nothing but mathematical equations that can be run on both GPUs and CPUs. He said that they don’t use the technique of distillation and quantisation, which is quite common today. Instead, Ziroh Labs analyses the mathematical foundations (linear algebra and probability equations) of LLMs and optimises these at a theoretical level without altering the model’s structure or reducing its parameter size.

After theoretical optimisation, the model is tuned specifically for the processor it will run on, taking into account the CPU, motherboard, memory architecture (like L1/L2/L3 caches), and interconnects.

Dewan argued that running an LLM on a CPU is not novel—the real challenge is maintaining quality and achieving usable speed (throughput). He explained that anything computable can run on any computer, but the practicality lies in how fast and how accurately it runs. They have been able to solve both these aspects without compressing the models.

“What is essential, then, that needs to be solved is twofold. One is to produce the desired level of outcome, that is, quality. And two is how fast it will generate the output. So these are the two problems that need to be solved. If you can solve these together, the system becomes usable,” Dewan said.

Partnership with IIT Madras

Dewan shared that the partnership with IIT Madras came about through Professor S Sadagopan, the former director of IIIT-Bangalore, who introduced him to Professor V Kamakoti, the current director of IIT Madras.

At the launch event, Sadagopan said, “India too is developing GPUs, but it will take time. Ziroh Labs demonstrates that AI solutions can be developed using CPUs that are available in plenty, without the forced need for a GPU, at a fraction of the cost.”

Dewan added that their collaboration with IIT Madras has a dual purpose—ongoing model validation and the development of real-world use cases. “The idea is to make these LLMs available to startups so that an ecosystem can be built,” he said.

Kamakoti said the initiative reflects a nature-inspired approach. “Nature has taught us that one can effectively acquire knowledge and subsequently infer in only a limited set of domains. Attempts to acquire everything under the universe are not sustainable and bound to fail over a period of time.”

“This effort is certainly a major step in arresting the possible AI divide between one who can afford the modern hyperscalar systems and one who cannot,” he added.

Dewan discussed the diverse range of use cases that have emerged since the launch of Kompact AI. “We’ve received over 200 requests across various segments, including healthcare, remote telemetry, and even solutions for kirana stores,” he said.

“People are also working on creating education software tools and automation systems. Numerous innovative use cases are coming from different industries,” Dewan added.

Take on Big Investments in AI

Microsoft has announced plans to spend $80 billion on building AI data centres, while Meta and Google have committed $65 billion and $75 billion, respectively. When asked whether such massive investments are justified, Dewan pointed to the scale of the models these companies are developing.

“They’re designing huge models… their thesis is that large models will do a lot of things,” he said. While $50 billion may seem like a vast sum of money, Dewan noted that in the world of large language models, it’s relatively modest, citing Grok, which has over a trillion parameters, as an example. He added, “They have the money, so they’re doing it. And we have the tech, and we can solve our problems. So everybody will coexist.”

Ziroh Labs currently has a team of eleven people and is bootstrapped. The company was founded in 2016 to address the critical problem of data privacy and security, specifically focusing on developing privacy-preserving cryptographic systems that could be used at scale. Dewan said they are still working on this. “We will bring privacy to AI in 2026, because ultimately, AI must have privacy.”