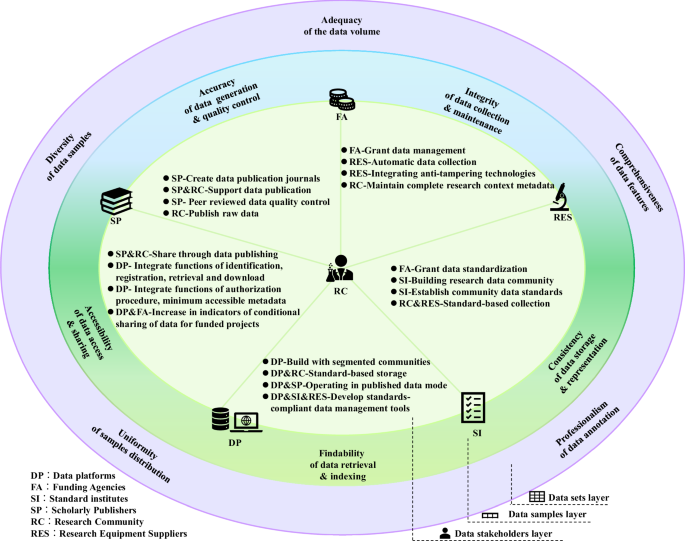

Accuracy of data generation and quality control

Data serves as the medium through which researchers explore and understand the physical world. The accuracy of scientific data is crucial for ensuring the reliability of research. In the traditional research paradigm, scientists formulate hypotheses based on known or empirical physical models. They then generate scientific data using experimental or computational tools, analyze and interpret the data, and further refine existing knowledge models. However, AI-driven scientific research diverges from this approach. Instead of relying solely on established empirical models, AI has the potential to leverage its advantages in high-dimensional analysis. By exploring the possibility of constructing new knowledge models from the intrinsic correlations within scientific data, AI may potentially accelerate research, bypassing the slower development associated with traditional physical models. Nevertheless, the reliability of AI knowledge models built entirely on scientific data hinges on the accurate representation of that data. Additionally, accurate data provides a crucial entry point for interpretable research in later stages of AI model development.

The accuracy of materials data is closely related to its production stage, including measurement tools, conditions, operator operations, data processing, and evaluation methods. Due to the complexity of scientific data production and influencing factors, it is difficult to develop general data output specifications to effectively guarantee data accuracy. In the current landscape of data governance, researchers’ comprehensive control over relevant variables, coupled with domain expertise, assumes paramount importance in evaluating and verifying data reliability and repeatability.

Here we discuss a mechanism for ensuring the accuracy of materials data from a macro perspective. Each piece of materials data, generated under rigorous scientific conditions, is a phenotypic reflection of the material’s intrinsic properties and serves as a valuable unit of identification that allows AI models to fit the real physical world. In this data-driven model of research, each piece of accurate data has its own academic value and exists as a coordinate point on a map of the material’s intrinsic laws, whether or not it aligns with the researcher’s personal research goals. We call for a data-centric academic publishing ecosystem that includes peer-reviewed scientific data accuracy assessment and publishing mechanisms, such as Scientific Data, an innovative journal in this regard. In this mode, each piece of accurate scientific data will be recognized for its academic value and established as an important evaluation factor for scientific contributions. The evaluation will focus on the objectivity and unknowns of the data, motivating researchers to produce and share more high-quality data.

The discourse surrounding data publication has been ongoing within research communities16,17,18. A survey16 conducted among 250 researchers in scientific and social science fields focused on data publication and peer review revealed several key findings. Researchers expressed a desire for data to be disseminated through databases or repositories to enhance accessibility. Few respondents expected published data to be peer-reviewed, but peer-reviewed data enjoyed much greater trust and prestige. Adequate metadata was recognized as crucial, with nearly all respondents expecting peer review to include an evaluation of data files. Citation and download counts were deemed important indicators of data impact. These survey results can inform publishers in constructing data publication formats that meet community expectations. However, it is essential not to overlook the challenges associated with data peer review. Although most researchers consider peer review critical for ensuring data quality, evaluating data quality extensively remains a complex issue. Challenges related to data peer review include:

-

Resource intensiveness: Researchers must invest additional effort to meet standardized data publication requirements, which may yield limited tangible benefits.

-

Specialized knowledge: Unlike traditional paper reviews, defining criteria for data review is complex and requires specialized domain knowledge. Additionally, the scale of data may exceed reviewers’ capacity.

-

Heterogeneous material data: Material data, primarily collected and managed by individual researchers, exhibit varying expression formats and quality, complicating understanding and comparison during review.

-

Verification complexity: Considering time and cost, data validation poses difficulties.

As discussed earlier, assessing the quality of material data for AI applications should prioritize both the objectivity and novelty of the data. Furthermore, scalable and maintainable data peer review is closely tied to various aspects of the data ecosystem. Suggested combinations of transformation actions to address these challenges include:

-

Establishing consensus-based data standards: Engage stakeholders, including material research equipment and software providers, researchers, publishers, and data repositories, to adopt unified data collection, management, transmission, review, and storage formats. This consensus on data expression formats will facilitate understanding, evaluation, comparison, and integration of data.

-

Integrating anti-tampering technologies: Incorporate tamper-proof technologies (e.g., blockchain hash values) into standardized data formats. Equipment or software generating data should create tamper-proof markers added to the original data in standardized formats to support the assessment of data authenticity.

-

Maintaining researcher practices while enhancing data publication: Researchers can continue using raw data to support scientific discoveries for traditional manuscript publications. Simultaneously, they could also publish complete, standardized raw data and use metrics like publication volume, citation rates, and download rates as academic impact indicators. This approach encourages researchers to publish data, fostering a data publication community and providing a fairer evaluation of contributions, moving away from extreme winner-takes-all academic norms.

-

Using automated programs for peer review: Publishing institutions should use data repositories as the conduit for data reception, review, and publication. Automated data screening techniques should pre-assess data for compliance with format requirements and authenticity markers. Compare existing data in the database to determine whether the data is novel.

-

Developing visual data review processes: Integrate automated program-identified data into similar clusters, assessing whether data reflect object characteristics and align with population trends. Specialized data requiring manual review can be efficiently handled. Experienced reviewers can then decide whether to approve publication.

Integrity of data collection and maintenance

The demand for large data sets by AI and the scarcity of scientific data make it increasingly urgent for researchers to use multi-source data. Only by fully capturing information such as the background, conditions, and results of research data into a self-explanatory unit of meaning can data flow freely and independently in the field, and be repeatedly accessed, understood, and correctly used by different researchers. At the same time, these rich details of data production will also provide fine-grained guidance for AI models in the verification and application of the physical world.

However, at the data collection stage, frontline materials researchers often collect only the data fragments that they are interested in, e.g., only images of the results of a particular characterization are collected, without recording the context and conditions under which they were generated. Such data can only be understood by those who generated it based on their previous research, and other users cannot properly understand and reuse it. It also affects the proper understanding of the data selected to build datasets and the accurate reproduction of models in reality.

The evolving demands of data collection and description reflect the expanded scope of data utilization and value pathways in the context of data-driven research. As previously mentioned, each data sample represents a discrete reflection of the target group under study. These samples not only serve the short-term, specific research needs of data producers but can also be repeatedly accessed and integrated by different researchers into various scales of target data clusters. Leveraging AI to explore these data clusters allows for insights into the inherent properties of the research subjects.

However, achieving the convenient and widespread implementation of the latter approach requires a shift in mindset among all data stakeholders. They must adjust data governance practices at their respective stages to facilitate data flow and utilization. For instance, modifying data collection descriptions ensures data completeness, enabling long-term reusability beyond merely burdening researchers with new data governance tasks within the traditional research paradigm.

To address the data integrity requirements of AI-driven research, suggested portfolio reform measures include:

-

Funding agencies: When defining project metrics and allocating funds, funding agencies should guide applicants in understanding the long-term, macroscopic value of data within the data-driven context. Establishing assessment criteria for data collection methods and metadata descriptions ensures sustained support for data management across projects.

-

Research equipment and software providers: In response to researchers’ short-term and long-term data usage patterns, equipment and software providers should adapt their data collection and export services. Presenting detailed data production context (including device, environment, and technical conditions) to users and opening data collection interfaces for automated programmatic19,20,21,22,23 access can reduce the burden of data integrity descriptions during data processing.

-

Researchers: Embed relevant metadata (such as researcher information, study subjects, and research objectives) into collected data. When using data, researchers can selectively analyze areas of interest. During data storage, transmission, and publication, maintaining the original data format with comprehensive metadata descriptions ensures integrity.

-

Data repositories and publishing institutions: Establish metadata description workflows that promote data integrity24. Encourage the inclusion of original data with complete metadata descriptions when accepting data for publication.

Consistency of data storage and representation

AI can process structured data, unstructured data, and semi-structured data simultaneously. However, in specific model construction, data must conform to certain formats and organizational schemes, so that AI can accurately and efficiently compare and identify the relationships and patterns hidden between data. When constructing data sets from multiple sources, the presentation of data content and organizational structure is often inconsistent. It directly affects the efficiency of data integration and the accuracy of AI models. Recent advancements in large language models (LLMs) have opened new avenues for handling heterogeneous data. Currently, mature applications involve using LLMs to assist in literature reading, summarization, and analysis25. Researchers are also exploring LLMs for extracting relevant scientific information from vast amounts of unstructured scientific literature26,27. However, given that LLMs generate text, the effectiveness of information extraction depends closely on the composition and distribution of training data. Additional verification against the original text is necessary during information extraction, which remains a significant work burden. Moreover, the data extracted by LLMs from unstructured texts are predictive outputs generated from their training data. Unlike traditional experimental or computational data, these LLM-derived data are characterized by an inherent indirectness and predictive nature. When used to train AI applications for scientific research, they may undermine the reliability and interpretability of the resulting models. To enable seamless integration of diverse data sources in AI-driven research, establishing community-consensus data standards and normalizing data representations are essential. This aligns with the FAIR principles, promoting interoperability across human-machine interactions. These standardized data formats will serve as reference benchmarks for data collection, storage, transmission, and integration within the scientific community, supporting training, validation, and optimization of scientific AI models.

However, material data standardization remains in its infancy within the materials domain. Several prominent challenges hinder its implementation:

-

Materials research involves various experimental and computational processes, resulting in a multitude of material data types with varying formats. A unified standard covering all material data remains elusive.

-

Insufficient funding and research focus on material data standardization hinder progress.

-

Material data standardization spans various lifecycle stages, and clarifying responsibility for driving standardization remains essential.

-

The adoption and promotion of data standards pose significant data processing burdens due to the sheer volume of material data.

To address these challenges, recommended reform measures include:

-

Modular approach: Given the long-tail nature of material data, incremental standardization using modular approaches is advisable. Establishing standard modules for specific material research aspects (e.g., synthesis, test, or data processing) allows gradual progress. Representative examples include the Crystallographic Information Framework (CIF, https://www.iucr.org/resources/cif/) for crystallography and NeXus (https://www.nexusformat.org/) for experimental data in neutron, X-ray, and muon science. Another noteworthy effort is the universal framework developed by the Materials Genomics Committee of the China Society of Testing Materials (CSTM/FC97), which leverages modular construction methods and adheres to FAIR principles. This framework provides practical guidance and case studies for material data standardization(http://www.cstm.com.cn/article/details/390ce11f-41a2-4d01-8544-04012bb13782/).

-

Community collaboration: Engage all stakeholders related to material data in forming domain-specific data standardization communities and leading organizations. Notably, the FAIRmat8(https://www.fairmat-nfdi.eu/fairmat/) initiative could serve as an reference model for community building efforts.

-

Tool development: Develop automated data collection tools, storage platforms, and publication systems aligned with data standards to ease adoption and promote effective dissemination.

-

Funding support: Funding agencies should recognize the immense value of data standardization for future research and proactively support material data standardization projects.

Findability of data retrieval and indexing

Building large datasets requires rapid querying and retrieval of the necessary data samples. The FAIR Principles clearly define the minimum requirements for data findability, including assigning persistent unique identifiers to (meta)data, describing (meta)data with a variety of accurate and relevant attributes, and registering (meta)data in a searchable resource. Meeting these requirements requires the power of data-sharing platforms and segmented research communities.

Data platforms can integrate identification, registration, and publication for open access to data. However, it is difficult for individual researchers to afford to build and operate a data platform by themselves. Although the materials research community has established many data-sharing platforms, such as the Novel Materials Discovery Laboratory ((NOMAD, https://nomad-lab.eu/) and the Materials Data Facility (MDF, https://www.materialsdatafacility.org/), researchers still face the problem of not knowing where and how to search for data of interest, especially experimental data. This implies that these data platforms have not been seamlessly integrated into material science research communities to effectively promote discoveries within the field.

The integration of data platforms with research communities can draw inspiration from the operational models of academic conferences and journals. In the materials science research community, a range of specialized materials topics (such as steel materials, nanomaterials, and catalytic materials) naturally gives rise to dedicated academic conferences and journals. These platforms reflect certain research trends within the community and indicate a substantial number of researchers supporting these themes. Researchers rely on these conferences and journals to stay updated on the latest advancements, report their findings, and adjust their future research directions. Consequently, these platforms have become organically integrated into the academic field, ensuring sustainable development.

Similarly, data platforms can be designed and maintained to emulate the functions of academic conferences and journals within research communities. By recognizing the academic value of scientific data, defining the data themes to be accepted, and providing a platform for researchers to report novel scientific data and access existing datasets, data platforms can incentivize and acknowledge researchers. This approach encourages the integration of data platforms into research communities, fostering the discovery and exchange of data of interest. Following certain data publication standards or norms, as the data platform operates, specific material systems’ datasets will gradually grow in diversity and quantity. This growth will meet researchers’ data discovery needs and support the widespread adoption of AI-driven research in the field.

Combined recommendations to promote the integration of data platforms into research communities and establish mechanisms for data discovery and sharing include:

-

Forming scientific data communities: Based on current thematic forums or research associations (e.g., semiconductor materials, nanomaterials, biomaterials), establish scientific data communities within each field to discuss data classification and develop data standards.

-

Creating data publication journals: Establish new data publication journals supported by thematic journals in the field, and develop corresponding data publication platforms. The scope of data publications would include unpublished specific material data, stored according to field consensus standards.

-

Integrating basic functions: Ensure these thematic data platforms include essential functions such as data identification, registration, uploading, querying, and downloading, thus providing a public digital community for data management and communication among researchers.

-

Organizing data publication forums: Concurrently organize sub-forums on data publication at periodic academic forums in the field. These sub-forums would focus on reporting novel scientific datasets and expanding the understanding of field-specific data, while also covering AI applications based on published datasets, to attract broad participation from researchers.

-

Establishing sustainable business models: Seek to establish sustainable business models that leverage the high-standard data exploration and AI application support provided by the data platform, ensuring long-term maintenance and operation of the platform.

Accessibility of data access and sharing

Building and processing large datasets requires easy access to data for researchers, and this is a major challenge to achieving large-scale data sharing. According to the accessibility requirement of the FAIR principle, data should be retrievable by their identifier using a standardized communication protocol that is open, free, and universally implementable, allowing for authentication and authorization if necessary. At a minimum, metadata should be accessible even if the data are unavailable.

The technical requirements of the Accessibility Principle have been maturely applied to the construction of data platforms. The integration of these functions into data platforms in the field of subdivided materials can meet the minimum requirements for data management. However, the willingness of data owners to share is the key to solving the accessibility of data, which is the main obstacle to the large-scale construction of domain data sets. As a new and unique factor of production, data has not yet formed a well-defined mechanism in various fields to coordinate the contradictions of ownership, use rights, and revenue rights, making it difficult for domain data sharing to operate systematically. Blockchain methods are a technical choice to ensure data traceability and transactions28. However, there are still many inconveniences for researchers to obtain and integrate domain data due to its technical complexity and privacy characteristics.

From the researcher’s perspective, the factors that influence their willingness to share scientific data include the following:

-

Intellectual property protection: Researchers worry that sharing data may allow others to publish related findings first, potentially diminishing the commercial value and patent potential of the data, which could negatively impact their own competitive standing.

-

Lack of incentives: The scientific community has weak reward mechanisms for data sharing, with no relevant incentives in place.

-

Insufficient infrastructure support: Researchers often lack the resources to establish platforms for open data access and face a shortage of user-friendly infrastructure to support data sharing.

-

Misuse of data: Researchers are concerned that their data might be misused, which may harm their academic reputation.

-

Cultural barriers: The culture of data sharing is not yet established, and researchers may be reluctant to share data due to habitual practices.

To address these challenges and encourage researchers to share scientific data, the following measures are suggested:

-

Academic recognition: Publishing institutions should recognize the academic value of data on par with traditional papers. Establishing data ownership through identifiers and citations, and providing academic impact incentives such as impact factors and citation metrics for shared data. Additionally, researchers could be rewarded with data usability credits proportional to the amount of data they share, incentivizing further data publication and sharing.

-

Infrastructure development: Academic institutions should build data-sharing platforms within specific thematic material research communities. These platforms should integrate data storage, identification, and controlled access, providing researchers with user-friendly infrastructure for data sharing.

-

Quality control: Researchers could share tamper-evident original data generated by objective equipment, in alignment with Data Generation and Quality Control recommendations. This approach minimizes the influence of human factors on the data, preserving its objectivity, and reduces the risk of misinterpretation by other users, thereby protecting the reputation of the data providers.

-

Establishment of data sharing communities: A viable approach is to model data-sharing communities after the Human Genome Project. This involves classifying and dividing tasks related to specific material systems, followed by internal sharing and utilization of data within the community. A case of accelerating materials research through division of labor and data sharing can be found in the literature29.

-

Funding agency requirements: Funding agencies could mandate data sharing within a defined timeframe and scope for research projects they support, fostering a culture of data openness.