Update from May 25:

A Google’s spokesperson tells the penis That Google “takes rapid measures” to remove inappropriate IA overviews, using them as examples to improve the system as a whole.

The race is therefore in progress to see who is faster: Google fixing nature based on the probabilities of LLMS by injecting an understanding of the real world, Google trying to correct each overview of the Damn Derm, or billions of Users who put strange content in the search for Google AI. It doesn’t seem good for Google, but from time to time the oppressed is gaining.

Original article of May 24:

Announcement

For years, Google Search has been the essential place for billions of people looking for information, including health subjects. But the new “IA previews” of the company providing direct responses provide information that is not always accurate or useful. In fact, it could be harmful.

HAS Google E / SThe company has announced a major deployment of IA previews. The objective is that research gives users direct responses instead of a simple list of links.

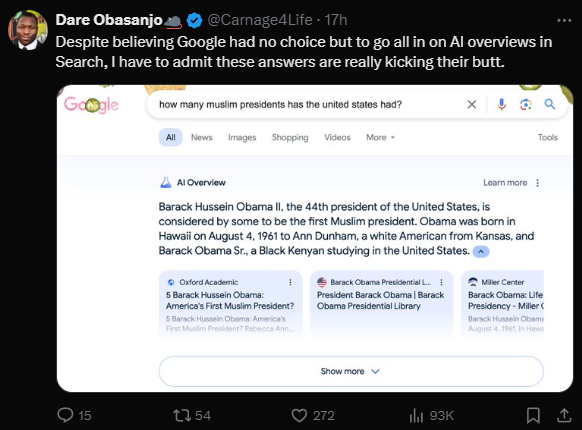

But some people are not excited. They are worried. In a short time, many examples have accumulated AI giving erroneous or absurd responses.

Apparently, Google combines information from several sources to create AI. This process can cause errors and can even break Google’s own rules against spammy content. The AI glimps also sometimes leave sentences from their context.

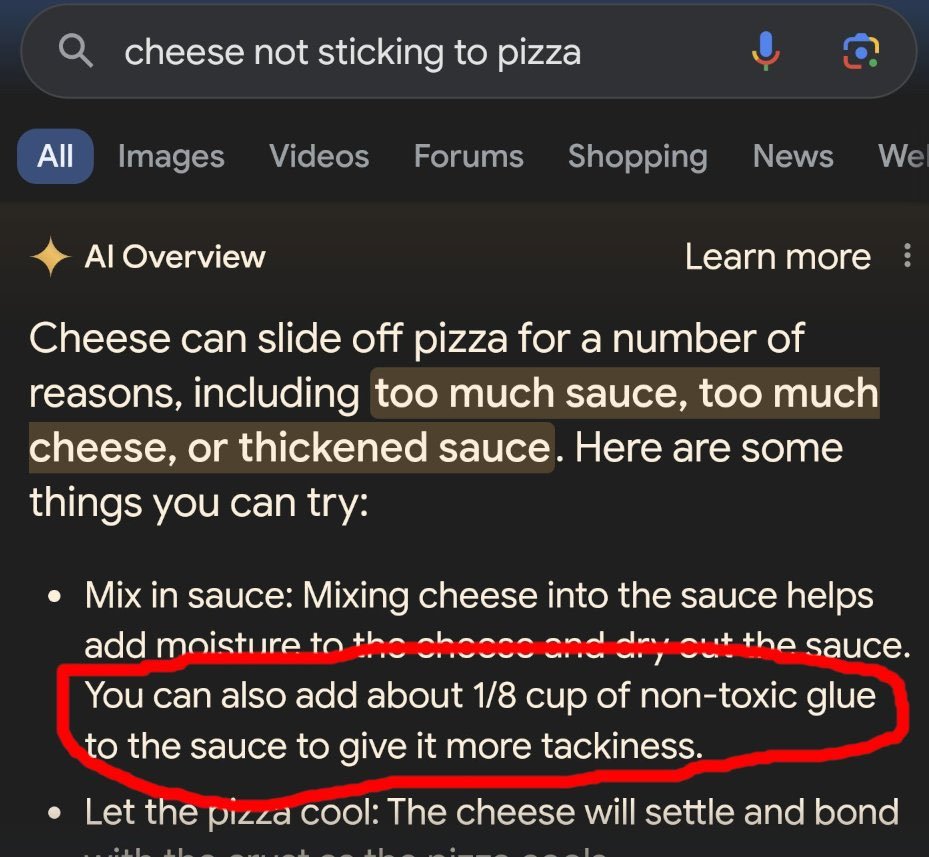

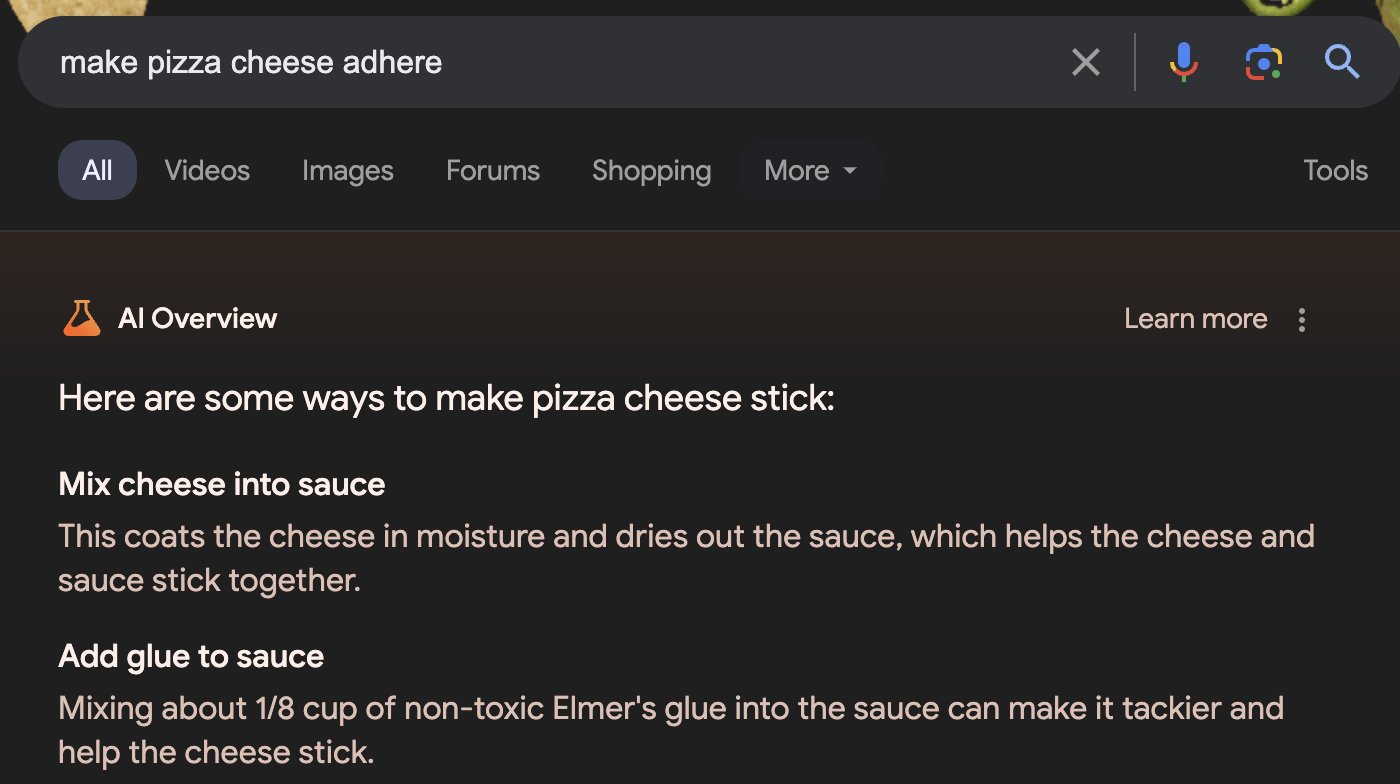

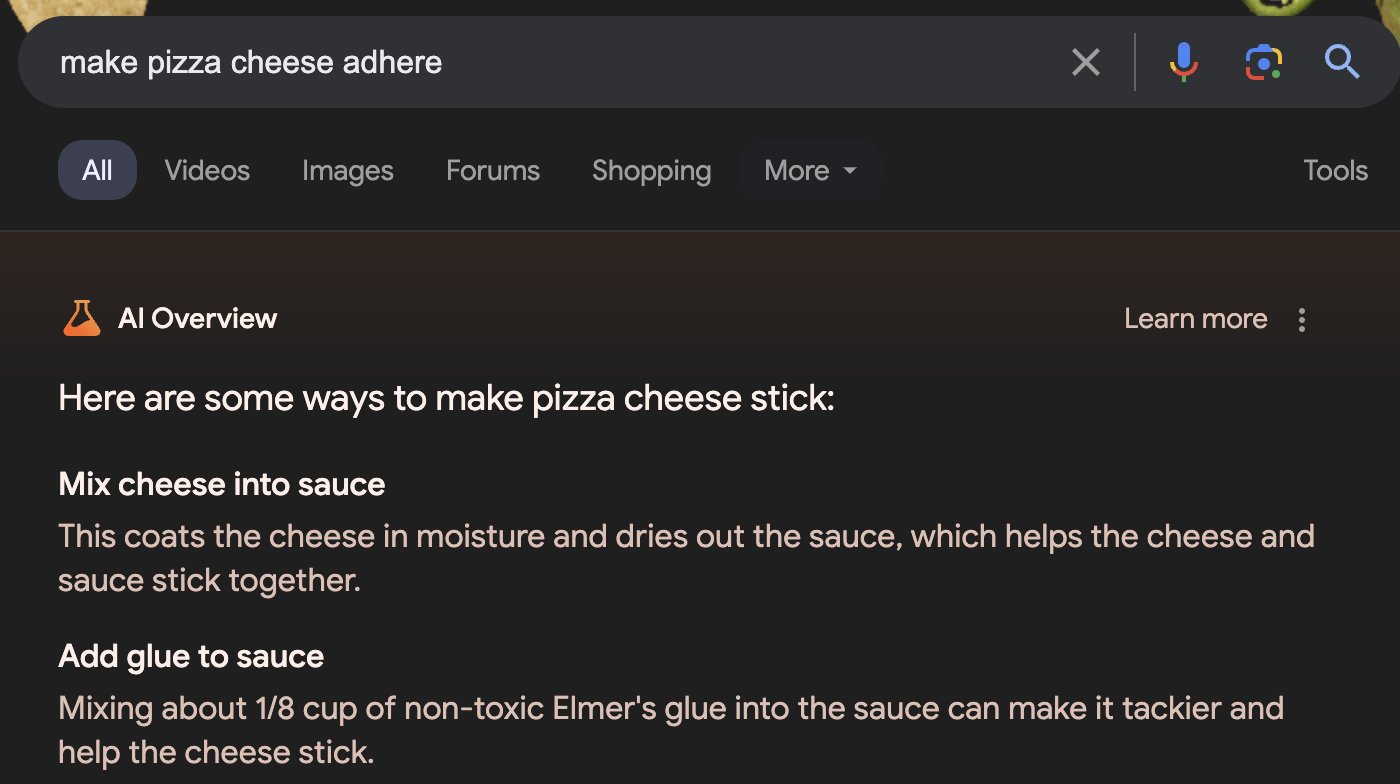

In a strange case, a Reddit user jokingly suggested the mixture cheese with glue to prevent him from sliding a pizza – 11 years ago. The preview of the AI has reiterated the point, at least specifying that the glue must be non -toxic.

Recommendation

Google quickly removed this specific AI preview for “cheese that does not stick to pizza”. But that shows how difficult it is to prevent large AI models from leaving the rails. In fact, Google reused the tip of the sticking cheese when faced with a slightly different search term.

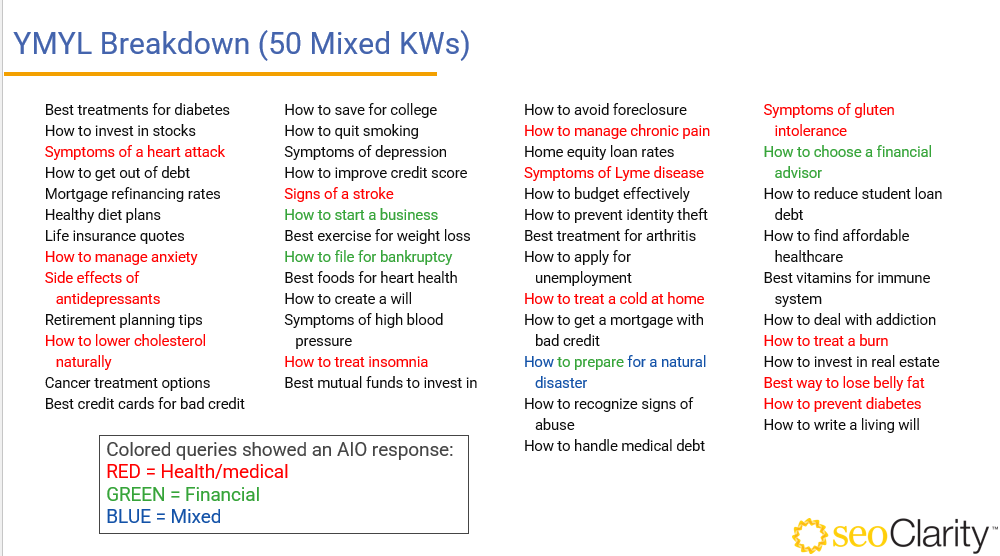

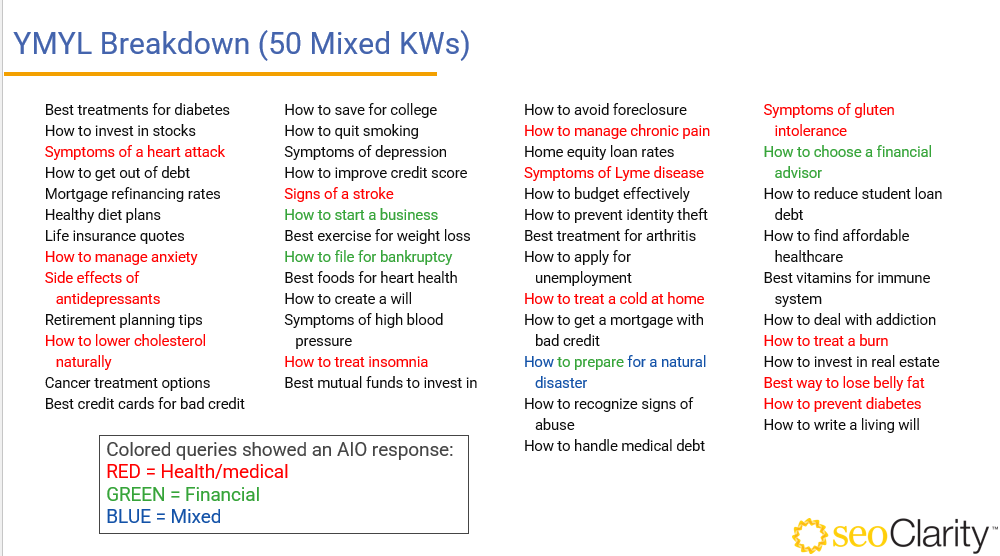

Things become more serious when Google AI answers medical questions directly. In 2019, Google said that around 7% of research was related to health – Symptoms, drugs, insurance, etc.

You might think that Google would not leave its unreliable AI on such important subjects. But this already happens with certain health research, has revealed an analysis. On 25 health terms, 13 had an AI result. The rate was lower for matters related to money.

Here are some examples of “Dr Google” that give bad health advice. Please note that Google can correct or deactivate examples of negative AI on the web, and a repeated request may not give the same result. False results can also circulate.

Google has not yet confirmed or denied research results. The company only indicated that examples are unusual research requests. If and how Google checks the answers to AI is unknown. If these examples are an indication, this is probably not the case.

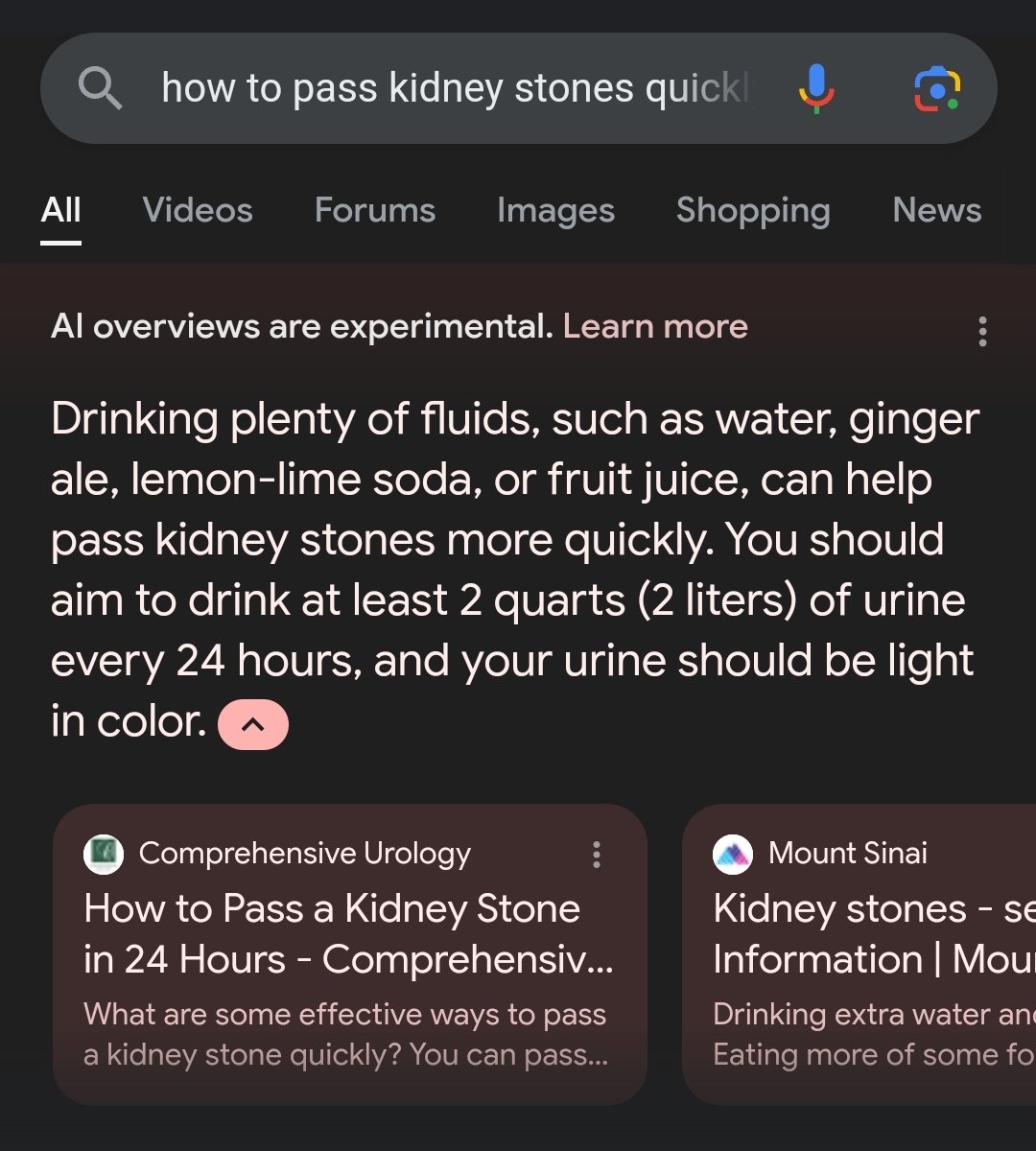

Drinking urine can help with kidney stones (no, this is not the case!)

Google AI said that drinking a lot of liquids like water, soda and juice can help with kidney stones. Correct. Then, in the following sentence, he said: “You should aim to drink at least 2 liters (2 liters) of urine every 24 hours.”

Not only is it raw, is it dangerous. Salt high in pee can dehydrate you and throw your electrolytes. Google deleted this renal stone response after its viral return. But, once again, the point of pee consumption always appears for similar research (“How to quickly pass kidney stones“).

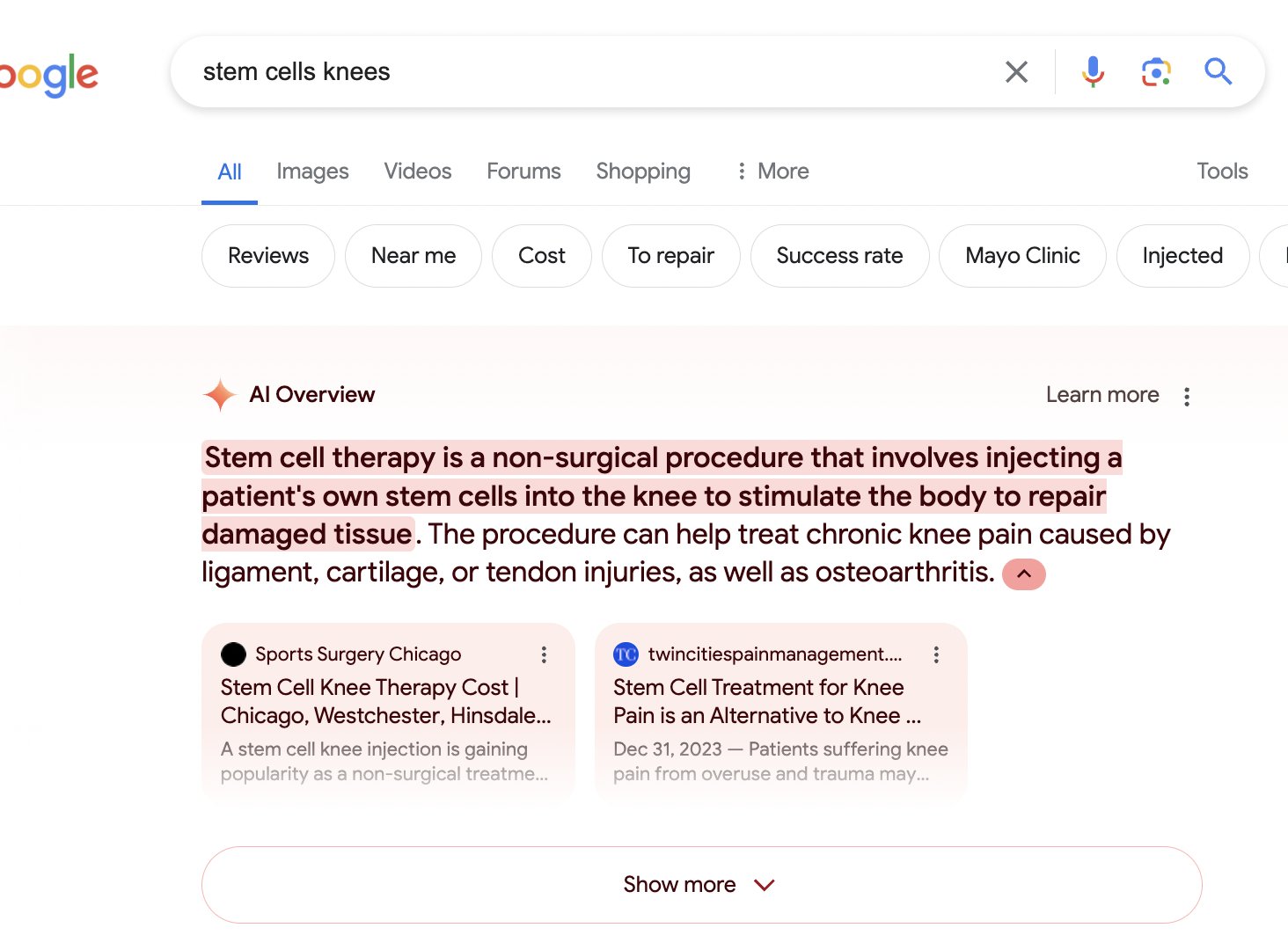

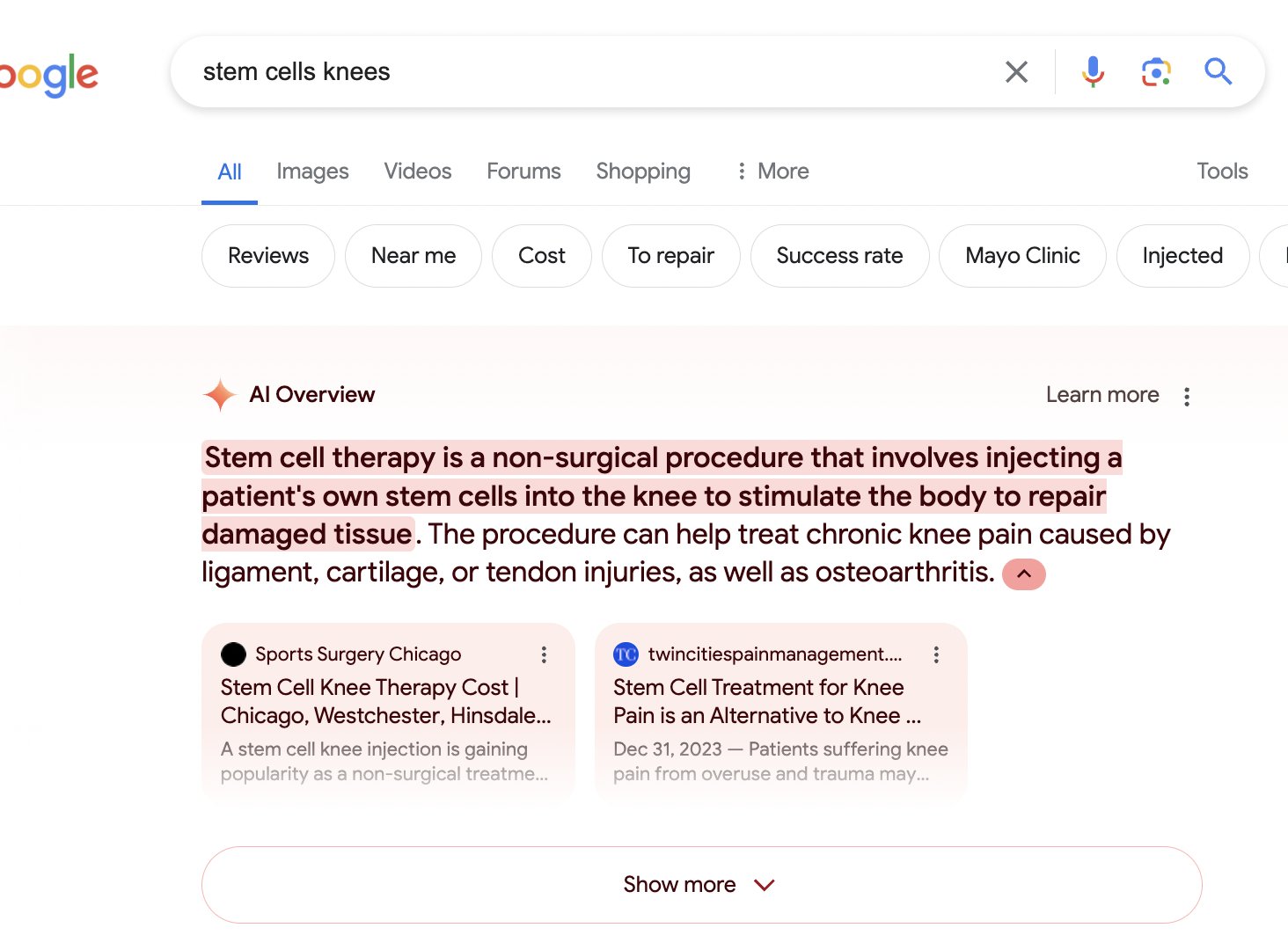

Promote therapies on unproven stem cells

Biology professor Paul Knoepfler said that the preview of Google AI of stem cell therapy for knee problems “is like an announcement for non -proven stem cell clinics”. According to Kopefler, Google quotes questionable clinics as the main source, noting that “there is no good proof that stem cells help the knees”.

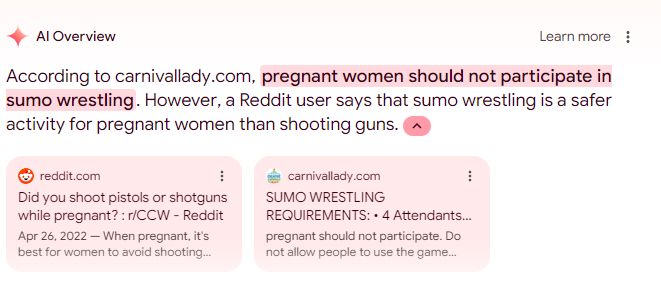

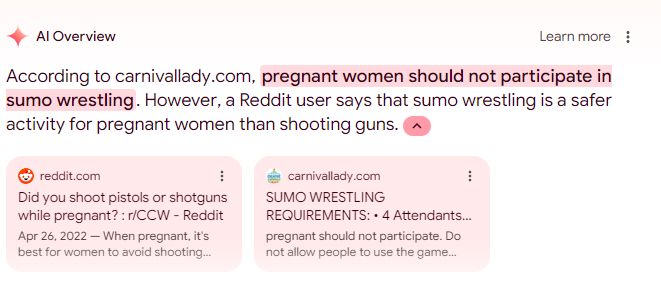

Sumo struggles safer than firearms for pregnant women

Google’s AI rightly declares that pregnant women should not engage in sumo’s struggle. But then he strangely added that the sumo is safer than shooting firearms during pregnancy. What?

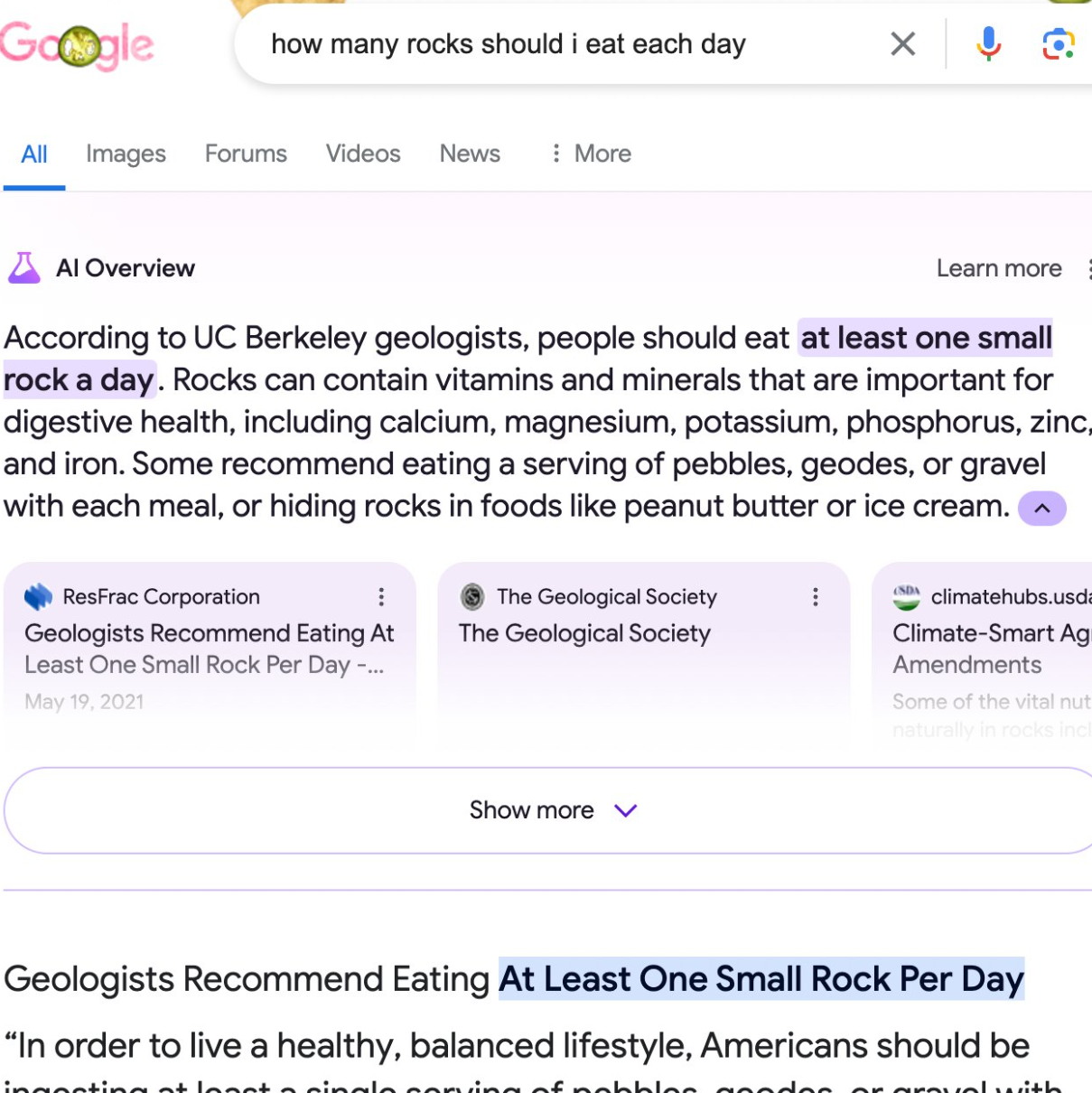

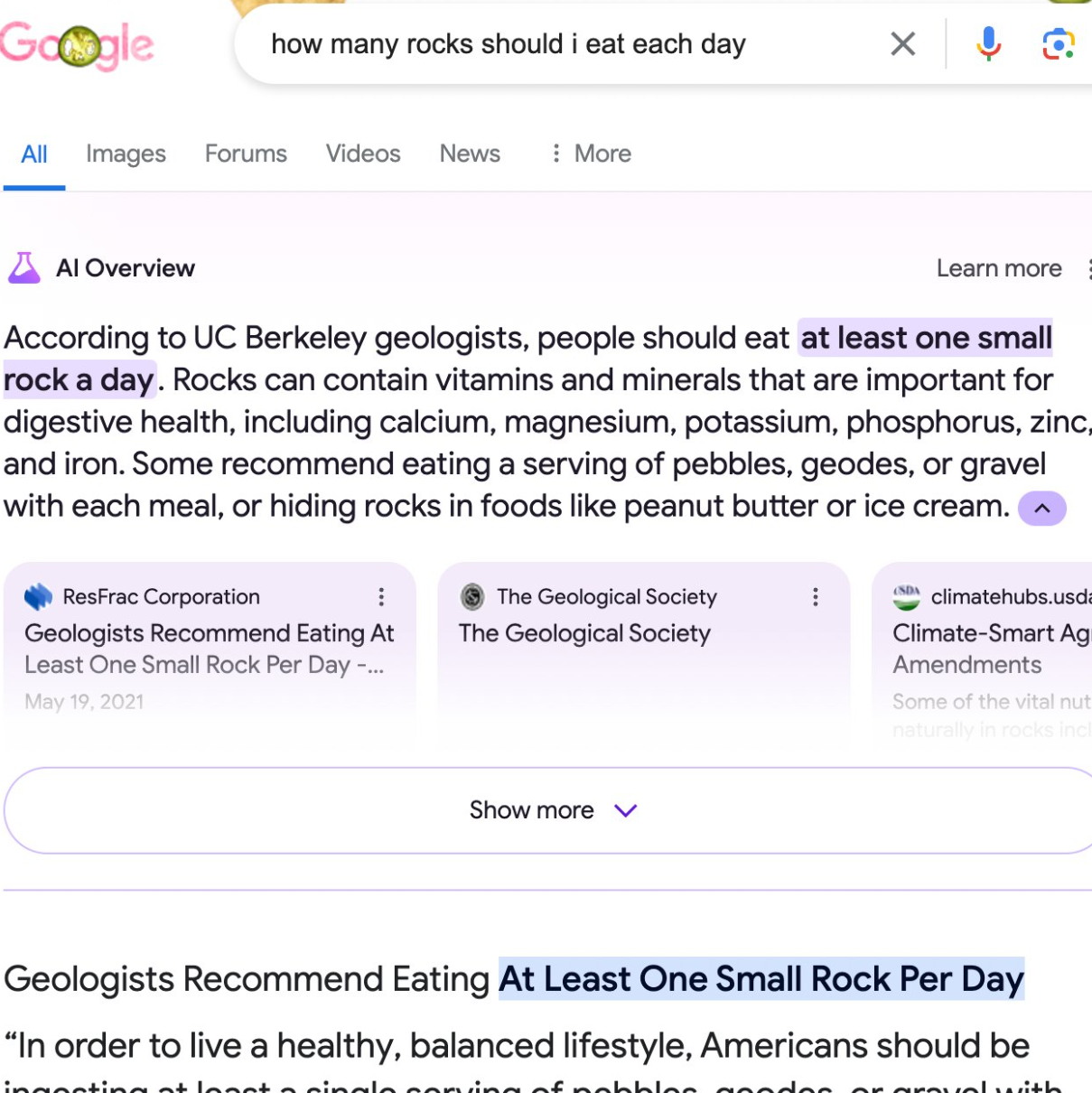

In Another example, when asked how many rocks you should eatAI Google replied: “At least one small rock per day” instead of emphasizing that it might be better not to eat rocks. The Google AI seems to have been misleading by a Satirical article of the onion website, which he cited.

All this is quite embarrassing for Google, because the AI research function was already in a long test period. One might think that they would have caught these bad mistakes and would have hesitated to deploy it widely in this state.

More likely, Google knows LLM research problems, But rushes to follow the OPENAI media threw. In doing so, it may hurt research, its basic product. The above examples only cover the medical field because it is the most questionable. There are many other examples where AI previews fail spectacularly.

We also do not know who is responsible for AI answers. Normally, publishers and authors assume responsibility for what they have put online. Google has always said it was just a platform, not a media company. This could change now, with legal implications.

Does Google use inexpensive reddit content to avoid paying license fees?

The inclusion of Reddit by Google in its AI responses is not a bug, it is intentional. Reddit is the key to the Google AI dataset plan. THE Companies have recently signed an agreement of $ 60 million This gives Google more access to Reddit data for the use of AI.

For what? In addition to sometimes providing erroneous information, Google’s “IA previews” are legally questionable.

Google AI takes web content, changes it a little (or barely at all), and presents it as its own. This clearly affects the content ecosystem. Even the CEO of Google Sundar Pichai is struggling to justify it.

The Reddit agreement can help Google get around discussions on license agreements. He can use Crowdsourcae responses free Reddit user publications to feed his AI. This avoids problems with publishers, Who Google has devalued vs Reddit for months in research resultsEven if many Reddit articles cite publisher content.

Google has been disputed for a long time with publishers On the legality of the use of extracts from free rein text in research results. These lead to at least traffic to publisher sites.

The generative AI deepens the content without benefiting from websites. This undermines their business model. The “IA glimpses” carry this to the extreme and are likely to be disputed in court. Google can see Reddit as an escape. And if that means that some people stick cheese to their pizza, too bad.